4.0

1NSchAb

1NSchAb

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

33<br />

Web Application Penetration Testing<br />

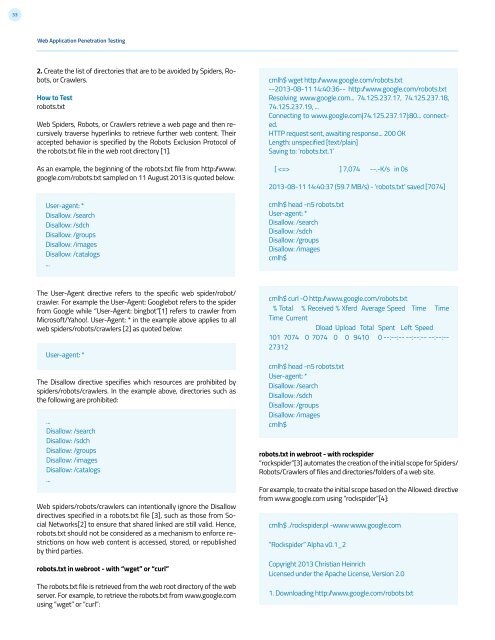

2. Create the list of directories that are to be avoided by Spiders, Robots,<br />

or Crawlers.<br />

How to Test<br />

robots.txt<br />

Web Spiders, Robots, or Crawlers retrieve a web page and then recursively<br />

traverse hyperlinks to retrieve further web content. Their<br />

accepted behavior is specified by the Robots Exclusion Protocol of<br />

the robots.txt file in the web root directory [1].<br />

As an example, the beginning of the robots.txt file from http:/www.<br />

google.com/robots.txt sampled on 11 August 2013 is quoted below:<br />

User-agent: *<br />

Disallow: /search<br />

Disallow: /sdch<br />

Disallow: /groups<br />

Disallow: /images<br />

Disallow: /catalogs<br />

...<br />

cmlh$ wget http:/www.google.com/robots.txt<br />

--2013-08-11 14:40:36-- http:/www.google.com/robots.txt<br />

Resolving www.google.com... 74.125.237.17, 74.125.237.18,<br />

74.125.237.19, ...<br />

Connecting to www.google.com|74.125.237.17|:80... connected.<br />

HTTP request sent, awaiting response... 200 OK<br />

Length: unspecified [text/plain]<br />

Saving to: ‘robots.txt.1’<br />

[ ] 7,074 --.-K/s in 0s<br />

2013-08-11 14:40:37 (59.7 MB/s) - ‘robots.txt’ saved [7074]<br />

cmlh$ head -n5 robots.txt<br />

User-agent: *<br />

Disallow: /search<br />

Disallow: /sdch<br />

Disallow: /groups<br />

Disallow: /images<br />

cmlh$<br />

The User-Agent directive refers to the specific web spider/robot/<br />

crawler. For example the User-Agent: Googlebot refers to the spider<br />

from Google while “User-Agent: bingbot”[1] refers to crawler from<br />

Microsoft/Yahoo!. User-Agent: * in the example above applies to all<br />

web spiders/robots/crawlers [2] as quoted below:<br />

User-agent: *<br />

The Disallow directive specifies which resources are prohibited by<br />

spiders/robots/crawlers. In the example above, directories such as<br />

the following are prohibited:<br />

...<br />

Disallow: /search<br />

Disallow: /sdch<br />

Disallow: /groups<br />

Disallow: /images<br />

Disallow: /catalogs<br />

...<br />

Web spiders/robots/crawlers can intentionally ignore the Disallow<br />

directives specified in a robots.txt file [3], such as those from Social<br />

Networks[2] to ensure that shared linked are still valid. Hence,<br />

robots.txt should not be considered as a mechanism to enforce restrictions<br />

on how web content is accessed, stored, or republished<br />

by third parties.<br />

robots.txt in webroot - with “wget” or “curl”<br />

The robots.txt file is retrieved from the web root directory of the web<br />

server. For example, to retrieve the robots.txt from www.google.com<br />

using “wget” or “curl”:<br />

cmlh$ curl -O http:/www.google.com/robots.txt<br />

% Total % Received % Xferd Average Speed Time Time<br />

Time Current<br />

Dload Upload Total Spent Left Speed<br />

101 7074 0 7074 0 0 9410 0 --:--:-- --:--:-- --:--:--<br />

27312<br />

cmlh$ head -n5 robots.txt<br />

User-agent: *<br />

Disallow: /search<br />

Disallow: /sdch<br />

Disallow: /groups<br />

Disallow: /images<br />

cmlh$<br />

robots.txt in webroot - with rockspider<br />

“rockspider”[3] automates the creation of the initial scope for Spiders/<br />

Robots/Crawlers of files and directories/folders of a web site.<br />

For example, to create the initial scope based on the Allowed: directive<br />

from www.google.com using “rockspider”[4]:<br />

cmlh$ ./rockspider.pl -www www.google.com<br />

“Rockspider” Alpha v0.1_2<br />

Copyright 2013 Christian Heinrich<br />

Licensed under the Apache License, Version 2.0<br />

1. Downloading http:/www.google.com/robots.txt