Stochastic Programming - Index of

Stochastic Programming - Index of

Stochastic Programming - Index of

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

200 STOCHASTIC PROGRAMMING<br />

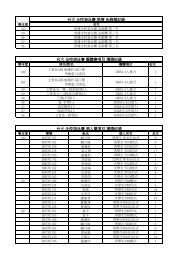

The minimal value for the pair UL:UU is therefore 16.643.<br />

If we were to pick the pair with the largest minimum <strong>of</strong> α and β, we should<br />

pick the pair UL:UU, over which it is ξ 2 that varies. In such a case we have<br />

tried to find that part <strong>of</strong> the function that is the most nonlinear. When we<br />

look at Figure 21, we see that as ξ 2 increases (with ξ 1 = 20), the optimal<br />

solution moves from F to E and then to D, where it stays when ξ 2 comes<br />

above the y coordinate in D. It is perhaps not so surprising that this is the<br />

most serious nonlinearity in φ.<br />

If we try to find the random variable with the highest average nonlinearity,<br />

by summing the errors over those pairs for which the given random variable<br />

varies, we find that for ˜ξ 1 the sum is 9.5+15.143 = 24.643, and for ˜ξ 2 it is<br />

8+16.643, which also equals 24.643. In other words, we have no conclusion.<br />

The next approach we suggested was to look at the dual variables as in<br />

(5.1). The right-hand side structure is very simple in our example, so it is<br />

easy to find the connections. We define two random variables: ˜π 1 for the row<br />

constraining x, and˜π 2 for the row constraining y. With the simple kind <strong>of</strong><br />

uniform distributions we have assumed, each <strong>of</strong> the four values for ˜π 1 and ˜π 2<br />

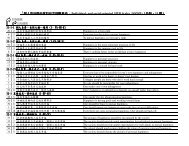

will have probability 0.25. Using Table 1, we see that the possible values for<br />

˜π 1 are 0, 1 and 3 (with 0 appearing twice), while for ˜π 2 they are 0, 2 and 3.5<br />

(also with 0 appearing twice). There are different ideas we can follow.<br />

1. We can find out how the dual variables vary between the extreme points.<br />

The largest individual change is that ˜π 2 fallsfrom3.5to0asweg<strong>of</strong>romUL<br />

to UU. This should again confirm that ˜ξ 2 is a candidate for partitioning.<br />

2. We can calculate E˜π =(1, 11 8<br />

), and the individual variances to 1.5 and<br />

2.17. If we choose based on variance, we pick ˜ξ 2 .<br />

3. We also argued earlier that the size <strong>of</strong> the support was <strong>of</strong> some importance.<br />

A way <strong>of</strong> accommodating that is to multiply all outcomes with the length<br />

<strong>of</strong> the support. (That way, all dual variables are, in a sense, a measure <strong>of</strong><br />

change per total support.) That should make the dual variables comparable.<br />

The calculations are left to the reader. We now end up with ˜π 1 having the<br />

largest variance. (And if we now look at the biggest change in dual variable<br />

over pairs <strong>of</strong> neighboring extreme points, ˜ξ 1 will be the one to partition.)<br />

No conclusions should be made based on these numbers in terms <strong>of</strong> what<br />

is a good heuristic. We have presented these numbers to illustrate the<br />

computations and to indicate how it is possible to make arguments about<br />

partitioning. Before we conclude, let us consider the “look-ahead” strategy<br />

(5.2). In this case there are two possibilities: either we split at ξ 1 =10orwe<br />

split at ξ 2 = 5. If we check what we need to compute in this case, we will<br />

find that some calculations are required in addition to those in Table 1, and