Stochastic Programming - Index of

Stochastic Programming - Index of

Stochastic Programming - Index of

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

220 STOCHASTIC PROGRAMMING<br />

Remember, however, that this is not the true value <strong>of</strong> Q(x k )—just an estimate.<br />

In other words, we have now observed two major differences from the<br />

exact L-shaped method (page 171). First, we operate on a sample rather<br />

than on all outcomes, and, secondly, what we calculate is an estimate <strong>of</strong><br />

a lower bound on Q(x k ) rather than Q(x k ) itself. Hence, since we have a<br />

lower bound, what we are doing is more similar to what we did when we<br />

used the L-shaped decomposition method within approximation schemes, (see<br />

page 204). However, the reason for the lower bound is somewhat different. In<br />

the bounding version <strong>of</strong> L-shaped, the lower bound was based on conditional<br />

expectations, whereas here it is based on inexact optimization. On the other<br />

hand, we have earlier pointed out that the Jensen lower bound has three<br />

different interpretations, one <strong>of</strong> which is to use conditional expectations (as<br />

in procedure Bounding L-shaped) and another that is inexact optimization<br />

(as in SD). So what is actually the principal difference<br />

For the three interpretations <strong>of</strong> the Jensen bound to be equivalent, the<br />

limited set <strong>of</strong> bases must come from solving the recourse problem in the points<br />

<strong>of</strong> conditional expectations. That is not the case in SD. Here the points are<br />

random (according to the sample ξ j ). Using a limited number <strong>of</strong> bases still<br />

produces a lower bound, but not the Jensen lower bound.<br />

Therefore SD and the bounding version <strong>of</strong> L-shaped are really quite<br />

different. The reason for the lower bound is different, and the objective value<br />

in SD is only a lower bound in terms <strong>of</strong> expectations (due to sampling). One<br />

method picks the limited number <strong>of</strong> points in a very careful way, the other<br />

at random. One method has an exact stopping criteria (error bound), the<br />

other has a statistically based stopping rule. So, more than anything else,<br />

they are alternative approaches. If one cannot solve the exact problem, one<br />

either resorts to bounds or to sample-based methods.<br />

In the L-shaped method we demonstrated how to find optimality cuts. We<br />

can now find a cut corresponding to x k (which is not binding and might even<br />

not be a lower bound, although it represents an estimate <strong>of</strong> a lower bound).<br />

As for the L-shaped method, we shall replace Q(x) in the objective by θ, and<br />

then add constraints. The cut generated in iteration k is given by<br />

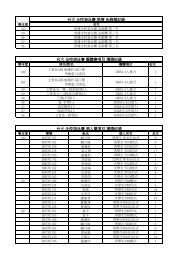

θ ≥ 1 k<br />

k∑<br />

(πj k )T [ξ j − T (ξ j )x] =α k k +(βk k )T x.<br />

j=1<br />

The double set <strong>of</strong> indices on α and β indicate that the cut was generated in<br />

iteration k (the subscript) and that it has been updated in iteration k (the<br />

superscript).<br />

In contrast to the L-shaped decomposition method, we must now also look<br />

at the old cuts. The reason is that, although we expect these cuts to be loose<br />

(since we use inexact optimization), they may in fact be far too tight (since<br />

they are based on a sample). Also, being old, they are based on a sample that