- Page 1 and 2:

Measure, Integration & Real Analysi

- Page 3 and 4:

About the Author Sheldon Axler was

- Page 5 and 6:

viii Contents 2D Lebesgue Measure 4

- Page 7 and 8:

x Contents 6E Consequences of Baire

- Page 9 and 10:

xii Contents 11 Fourier Analysis 33

- Page 11 and 12:

Preface for Instructors You are abo

- Page 13 and 14:

xvi Preface for Instructors • Cha

- Page 15 and 16:

Acknowledgments I owe a huge intell

- Page 17 and 18:

2 Chapter 1 Riemann Integration 1A

- Page 19 and 20:

4 Chapter 1 Riemann Integration m (

- Page 21 and 22:

6 Chapter 1 Riemann Integration whe

- Page 23 and 24:

8 Chapter 1 Riemann Integration 6 S

- Page 25 and 26:

10 Chapter 1 Riemann Integration Ca

- Page 27 and 28:

12 Chapter 1 Riemann Integration EX

- Page 29 and 30:

14 Chapter 2 Measures 2A Outer Meas

- Page 31 and 32:

16 Chapter 2 Measures The next resu

- Page 33 and 34:

18 Chapter 2 Measures Outer Measure

- Page 35 and 36:

20 Chapter 2 Measures Now we can pr

- Page 37 and 38:

≈ 7π Chapter 2 Measures Clearly

- Page 39 and 40:

24 Chapter 2 Measures 10 Prove that

- Page 41 and 42:

26 Chapter 2 Measures We have shown

- Page 43 and 44:

28 Chapter 2 Measures Borel Subsets

- Page 45 and 46:

30 Chapter 2 Measures Inverse image

- Page 47 and 48:

32 Chapter 2 Measures The definitio

- Page 49 and 50:

34 Chapter 2 Measures 2.42 Definiti

- Page 51 and 52:

36 Chapter 2 Measures The next resu

- Page 53 and 54:

38 Chapter 2 Measures EXERCISES 2B

- Page 55 and 56:

40 Chapter 2 Measures 23 Suppose f

- Page 57 and 58:

42 Chapter 2 Measures • Suppose X

- Page 59 and 60:

44 Chapter 2 Measures For convenien

- Page 61 and 62:

46 Chapter 2 Measures 5 Suppose (X,

- Page 63 and 64:

48 Chapter 2 Measures Thus |A ∪ G

- Page 65 and 66:

50 Chapter 2 Measures where the equ

- Page 67 and 68:

52 Chapter 2 Measures Lebesgue Meas

- Page 69 and 70:

54 Chapter 2 Measures In practice,

- Page 71 and 72:

56 Chapter 2 Measures 2.74 Definiti

- Page 73 and 74:

58 Chapter 2 Measures Now we can de

- Page 75 and 76:

60 Chapter 2 Measures EXERCISES 2D

- Page 77 and 78:

62 Chapter 2 Measures 2E Convergenc

- Page 79 and 80:

64 Chapter 2 Measures Proof Suppose

- Page 81 and 82:

66 Chapter 2 Measures Luzin’s The

- Page 83 and 84:

68 Chapter 2 Measures For each inte

- Page 85 and 86:

70 Chapter 2 Measures The next resu

- Page 87 and 88:

72 Chapter 2 Measures 9 Suppose F 1

- Page 89 and 90:

74 Chapter 3 Integration 3A Integra

- Page 91 and 92:

76 Chapter 3 Integration 3.6 Exampl

- Page 93 and 94:

78 Chapter 3 Integration 3.11 Monot

- Page 95 and 96:

80 Chapter 3 Integration Now we can

- Page 97 and 98:

82 Chapter 3 Integration The condit

- Page 99 and 100:

84 Chapter 3 Integration The inequa

- Page 101 and 102:

86 Chapter 3 Integration 12 Show th

- Page 103 and 104:

88 Chapter 3 Integration 3B Limits

- Page 105 and 106:

90 Chapter 3 Integration 3.27 Defin

- Page 107 and 108:

92 Chapter 3 Integration Suppose (X

- Page 109 and 110:

94 Chapter 3 Integration Proof Supp

- Page 111 and 112:

96 Chapter 3 Integration 3.42 Examp

- Page 113 and 114:

98 Chapter 3 Integration in other w

- Page 115 and 116:

100 Chapter 3 Integration 7 Let λ

- Page 117 and 118:

102 Chapter 4 Differentiation 4A Ha

- Page 119 and 120:

104 Chapter 4 Differentiation Suppo

- Page 121 and 122:

106 Chapter 4 Differentiation EXERC

- Page 123 and 124:

108 Chapter 4 Differentiation 4B De

- Page 125 and 126:

110 Chapter 4 Differentiation Deriv

- Page 127 and 128:

112 Chapter 4 Differentiation The n

- Page 129 and 130:

114 Chapter 4 Differentiation Proof

- Page 131 and 132:

Chapter 5 Product Measures Lebesgue

- Page 133 and 134:

118 Chapter 5 Product Measures 5.3

- Page 135 and 136:

120 Chapter 5 Product Measures Mono

- Page 137 and 138:

122 Chapter 5 Product Measures Now

- Page 139 and 140:

124 Chapter 5 Product Measures The

- Page 141 and 142:

126 Chapter 5 Product Measures 5.23

- Page 143 and 144:

128 Chapter 5 Product Measures EXER

- Page 145 and 146:

130 Chapter 5 Product Measures The

- Page 147 and 148:

132 Chapter 5 Product Measures As y

- Page 149 and 150:

134 Chapter 5 Product Measures The

- Page 151 and 152:

136 Chapter 5 Product Measures 5C L

- Page 153 and 154:

138 Chapter 5 Product Measures The

- Page 155 and 156:

140 Chapter 5 Product Measures Volu

- Page 157 and 158:

142 Chapter 5 Product Measures This

- Page 159 and 160:

144 Chapter 5 Product Measures EXER

- Page 161 and 162:

Chapter 6 Banach Spaces We begin th

- Page 163 and 164:

148 Chapter 6 Banach Spaces The mat

- Page 165 and 166:

150 Chapter 6 Banach Spaces The def

- Page 167 and 168:

152 Chapter 6 Banach Spaces Entranc

- Page 169 and 170:

154 Chapter 6 Banach Spaces 14 Supp

- Page 171 and 172:

156 Chapter 6 Banach Spaces For b a

- Page 173 and 174:

158 Chapter 6 Banach Spaces We now

- Page 175 and 176:

160 Chapter 6 Banach Spaces 6.28 Ex

- Page 177 and 178:

162 Chapter 6 Banach Spaces EXERCIS

- Page 179 and 180:

164 Chapter 6 Banach Spaces Sometim

- Page 181 and 182:

166 Chapter 6 Banach Spaces 6.40 De

- Page 183 and 184:

168 Chapter 6 Banach Spaces 6.45 Ex

- Page 185 and 186:

170 Chapter 6 Banach Spaces EXERCIS

- Page 187 and 188:

172 Chapter 6 Banach Spaces 6D Line

- Page 189 and 190:

174 Chapter 6 Banach Spaces Discont

- Page 191 and 192:

176 Chapter 6 Banach Spaces The not

- Page 193 and 194:

178 Chapter 6 Banach Spaces If V is

- Page 195 and 196:

180 Chapter 6 Banach Spaces Then A

- Page 197 and 198:

182 Chapter 6 Banach Spaces 2 Suppo

- Page 199 and 200:

184 Chapter 6 Banach Spaces 6E Cons

- Page 201 and 202:

186 Chapter 6 Banach Spaces Because

- Page 203 and 204:

188 Chapter 6 Banach Spaces The nex

- Page 205 and 206:

190 Chapter 6 Banach Spaces 6.86 Pr

- Page 207 and 208:

192 Chapter 6 Banach Spaces A linea

- Page 209 and 210:

194 Chapter 7 L p Spaces 7A L p (μ

- Page 211 and 212:

196 Chapter 7 L p Spaces What we ca

- Page 213 and 214:

198 Chapter 7 L p Spaces 7.11 Examp

- Page 215 and 216:

200 Chapter 7 L p Spaces 6 Suppose

- Page 217 and 218:

202 Chapter 7 L p Spaces 7B L p (μ

- Page 219 and 220:

204 Chapter 7 L p Spaces L p (μ) I

- Page 221 and 222:

206 Chapter 7 L p Spaces Duality Re

- Page 223 and 224:

208 Chapter 7 L p Spaces EXERCISES

- Page 225 and 226:

210 Chapter 7 L p Spaces 18 Suppose

- Page 227 and 228:

212 Chapter 8 Hilbert Spaces 8A Inn

- Page 229 and 230:

214 Chapter 8 Hilbert Spaces As we

- Page 231 and 232:

216 Chapter 8 Hilbert Spaces The ne

- Page 233 and 234:

218 Chapter 8 Hilbert Spaces The or

- Page 235 and 236:

220 Chapter 8 Hilbert Spaces The pr

- Page 237 and 238:

222 Chapter 8 Hilbert Spaces 9 The

- Page 239 and 240:

224 Chapter 8 Hilbert Spaces 8B Ort

- Page 241 and 242:

226 Chapter 8 Hilbert Spaces In the

- Page 243 and 244:

228 Chapter 8 Hilbert Spaces 8.37 o

- Page 245 and 246:

230 Chapter 8 Hilbert Spaces The re

- Page 247 and 248:

232 Chapter 8 Hilbert Spaces 8.45 r

- Page 249 and 250:

234 Chapter 8 Hilbert Spaces Suppos

- Page 251 and 252:

236 Chapter 8 Hilbert Spaces 16 Sup

- Page 253 and 254:

238 Chapter 8 Hilbert Spaces • Fo

- Page 255 and 256:

240 Chapter 8 Hilbert Spaces • Su

- Page 257 and 258:

242 Chapter 8 Hilbert Spaces Proof

- Page 259 and 260:

244 Chapter 8 Hilbert Spaces 8.61 D

- Page 261 and 262:

246 Chapter 8 Hilbert Spaces A mome

- Page 263 and 264:

248 Chapter 8 Hilbert Spaces 8.73 E

- Page 265 and 266:

250 Chapter 8 Hilbert Spaces Riesz

- Page 267 and 268:

252 Chapter 8 Hilbert Spaces 10 (a)

- Page 269 and 270:

254 Chapter 8 Hilbert Spaces 23 Pro

- Page 271 and 272:

256 Chapter 9 Real and Complex Meas

- Page 273 and 274:

258 Chapter 9 Real and Complex Meas

- Page 275 and 276:

260 Chapter 9 Real and Complex Meas

- Page 277 and 278:

262 Chapter 9 Real and Complex Meas

- Page 279 and 280:

264 Chapter 9 Real and Complex Meas

- Page 281 and 282:

266 Chapter 9 Real and Complex Meas

- Page 283 and 284:

268 Chapter 9 Real and Complex Meas

- Page 285 and 286:

270 Chapter 9 Real and Complex Meas

- Page 287 and 288:

≈ 100e Chapter 9 Real and Complex

- Page 289 and 290:

274 Chapter 9 Real and Complex Meas

- Page 291 and 292:

276 Chapter 9 Real and Complex Meas

- Page 293 and 294:

278 Chapter 9 Real and Complex Meas

- Page 295 and 296:

Chapter 10 Linear Maps on Hilbert S

- Page 297 and 298:

282 Chapter 10 Linear Maps on Hilbe

- Page 299 and 300:

284 Chapter 10 Linear Maps on Hilbe

- Page 301 and 302:

286 Chapter 10 Linear Maps on Hilbe

- Page 303 and 304:

288 Chapter 10 Linear Maps on Hilbe

- Page 305 and 306:

290 Chapter 10 Linear Maps on Hilbe

- Page 307 and 308:

292 Chapter 10 Linear Maps on Hilbe

- Page 309 and 310:

294 Chapter 10 Linear Maps on Hilbe

- Page 311 and 312:

296 Chapter 10 Linear Maps on Hilbe

- Page 313 and 314:

298 Chapter 10 Linear Maps on Hilbe

- Page 315 and 316:

300 Chapter 10 Linear Maps on Hilbe

- Page 317 and 318:

302 Chapter 10 Linear Maps on Hilbe

- Page 319 and 320:

304 Chapter 10 Linear Maps on Hilbe

- Page 321 and 322:

306 Chapter 10 Linear Maps on Hilbe

- Page 323 and 324:

308 Chapter 10 Linear Maps on Hilbe

- Page 325 and 326:

310 Chapter 10 Linear Maps on Hilbe

- Page 327 and 328:

312 Chapter 10 Linear Maps on Hilbe

- Page 329 and 330:

≈ 100π Chapter 10 Linear Maps on

- Page 331 and 332:

316 Chapter 10 Linear Maps on Hilbe

- Page 333 and 334:

318 Chapter 10 Linear Maps on Hilbe

- Page 335 and 336:

320 Chapter 10 Linear Maps on Hilbe

- Page 337 and 338:

322 Chapter 10 Linear Maps on Hilbe

- Page 339 and 340:

324 Chapter 10 Linear Maps on Hilbe

- Page 341 and 342:

326 Chapter 10 Linear Maps on Hilbe

- Page 343 and 344:

328 Chapter 10 Linear Maps on Hilbe

- Page 345 and 346:

330 Chapter 10 Linear Maps on Hilbe

- Page 347 and 348:

332 Chapter 10 Linear Maps on Hilbe

- Page 349 and 350:

334 Chapter 10 Linear Maps on Hilbe

- Page 351 and 352:

336 Chapter 10 Linear Maps on Hilbe

- Page 353 and 354:

338 Chapter 10 Linear Maps on Hilbe

- Page 355 and 356:

340 Chapter 11 Fourier Analysis 11A

- Page 357 and 358:

342 Chapter 11 Fourier Analysis Hil

- Page 359 and 360:

344 Chapter 11 Fourier Analysis 11.

- Page 361 and 362: 346 Chapter 11 Fourier Analysis 11.

- Page 363 and 364: 348 Chapter 11 Fourier Analysis Sol

- Page 365 and 366: 350 Chapter 11 Fourier Analysis Fou

- Page 367 and 368: 352 Chapter 11 Fourier Analysis In

- Page 369 and 370: 354 Chapter 11 Fourier Analysis 12

- Page 371 and 372: 356 Chapter 11 Fourier Analysis Now

- Page 373 and 374: 358 Chapter 11 Fourier Analysis 11.

- Page 375 and 376: 360 Chapter 11 Fourier Analysis 11.

- Page 377 and 378: 362 Chapter 11 Fourier Analysis 11

- Page 379 and 380: 364 Chapter 11 Fourier Analysis (b)

- Page 381 and 382: 366 Chapter 11 Fourier Analysis Not

- Page 383 and 384: 368 Chapter 11 Fourier Analysis Con

- Page 385 and 386: 370 Chapter 11 Fourier Analysis Our

- Page 387 and 388: 372 Chapter 11 Fourier Analysis The

- Page 389 and 390: 374 Chapter 11 Fourier Analysis Fou

- Page 391 and 392: 376 Chapter 11 Fourier Analysis whe

- Page 393 and 394: 378 Chapter 11 Fourier Analysis 3 S

- Page 395 and 396: Chapter 12 Probability Measures Pro

- Page 397 and 398: 382 Chapter 12 Probability Measures

- Page 399 and 400: 384 Chapter 12 Probability Measures

- Page 401 and 402: 386 Chapter 12 Probability Measures

- Page 403 and 404: 388 Chapter 12 Probability Measures

- Page 405 and 406: 390 Chapter 12 Probability Measures

- Page 407 and 408: 392 Chapter 12 Probability Measures

- Page 409 and 410: 394 Chapter 12 Probability Measures

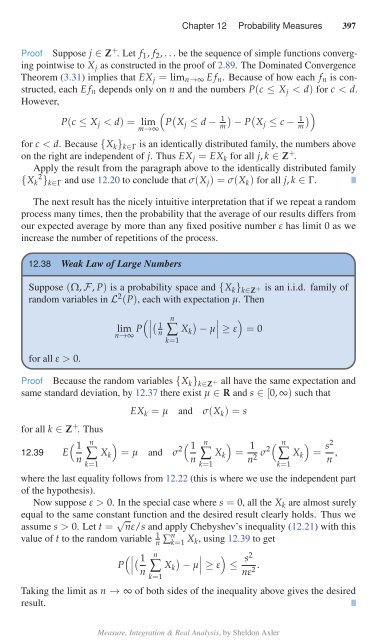

- Page 411: 396 Chapter 12 Probability Measures

- Page 415 and 416: Photo Credits • page vi: photos b

- Page 417 and 418: Bibliography The chapters of this b

- Page 419 and 420: 404 Notation Index ∑ ∞ k=1 g k,

- Page 421 and 422: Index Abel summation, 344 absolute

- Page 423 and 424: 408 Index Hahn Decomposition Theore

- Page 425 and 426: 410 Index random variable, 385 rang