NA SENDA DA IMAGEM A Representação ea Tecnologia na Arte

NA SENDA DA IMAGEM A Representação ea Tecnologia na Arte

NA SENDA DA IMAGEM A Representação ea Tecnologia na Arte

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

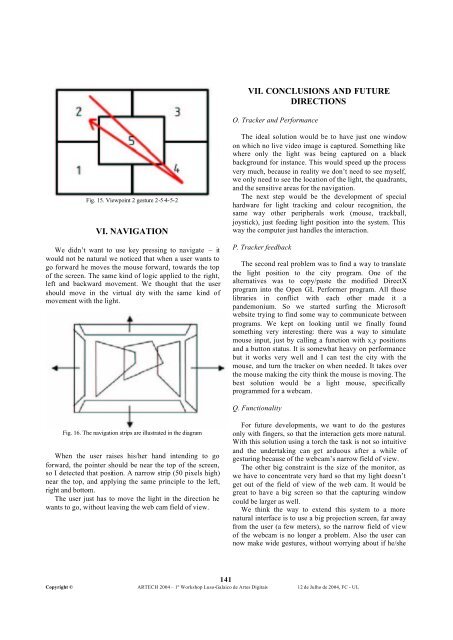

Fig. 15. Viewpoint 2 gesture 2-5-4-5-2<br />

VI. <strong>NA</strong>VIGATION<br />

We didn’t want to use key pressing to <strong>na</strong>vigate – it<br />

would not be <strong>na</strong>tural we noticed that when a user wants to<br />

go forward he moves the mouse forward, towards the top<br />

of the screen. The same kind of logic applied to the right,<br />

left and backward movement. We thought that the user<br />

should move in the virtual city with the same kind of<br />

movement with the light.<br />

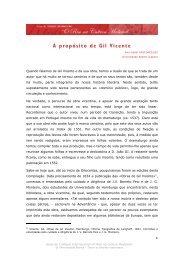

Fig. 16. The <strong>na</strong>vigation strips are illustrated in the diagram<br />

When the user raises his/her hand intending to go<br />

forward, the pointer should be n<strong>ea</strong>r the top of the screen,<br />

so I detected that position. A <strong>na</strong>rrow strip (50 pixels high)<br />

n<strong>ea</strong>r the top, and applying the same principle to the left,<br />

right and bottom.<br />

The user just has to move the light in the direction he<br />

wants to go, without l<strong>ea</strong>ving the web cam field of view.<br />

VII. CONCLUSIONS AND FUTURE<br />

DIRECTIONS<br />

O. Tracker and Performance<br />

The id<strong>ea</strong>l solution would be to have just one window<br />

on which no live video image is captured. Something like<br />

where only the light was being captured on a black<br />

background for instance. This would speed up the process<br />

very much, because in r<strong>ea</strong>lity we don’t need to see myself,<br />

we only need to see the location of the light, the quadrants,<br />

and the sensitive ar<strong>ea</strong>s for the <strong>na</strong>vigation.<br />

The next step would be the development of special<br />

hardware for light tracking and colour recognition, the<br />

same way other peripherals work (mouse, trackball,<br />

joystick), just feeding light position into the system. This<br />

way the computer just handles the interaction.<br />

P. Tracker feedback<br />

The second r<strong>ea</strong>l problem was to find a way to translate<br />

the light position to the city program. One of the<br />

alter<strong>na</strong>tives was to copy/paste the modified DirectX<br />

program into the Open GL Performer program. All those<br />

libraries in conflict with <strong>ea</strong>ch other made it a<br />

pandemonium. So we started surfing the Microsoft<br />

website trying to find some way to communicate between<br />

programs. We kept on looking until we fi<strong>na</strong>lly found<br />

something very interesting: there was a way to simulate<br />

mouse input, just by calling a function with x,y positions<br />

and a button status. It is somewhat h<strong>ea</strong>vy on performance<br />

but it works very well and I can test the city with the<br />

mouse, and turn the tracker on when needed. It takes over<br />

the mouse making the city think the mouse is moving. The<br />

best solution would be a light mouse, specifically<br />

programmed for a webcam.<br />

Q. Functio<strong>na</strong>lity<br />

For future developments, we want to do the gestures<br />

only with fingers, so that the interaction gets more <strong>na</strong>tural.<br />

With this solution using a torch the task is not so intuitive<br />

and the undertaking can get arduous after a while of<br />

gesturing because of the webcam’s <strong>na</strong>rrow field of view.<br />

The other big constraint is the size of the monitor, as<br />

we have to concentrate very hard so that my light doesn’t<br />

get out of the field of view of the web cam. It would be<br />

gr<strong>ea</strong>t to have a big screen so that the capturing window<br />

could be larger as well.<br />

We think the way to extend this system to a more<br />

<strong>na</strong>tural interface is to use a big projection screen, far away<br />

from the user (a few meters), so the <strong>na</strong>rrow field of view<br />

of the webcam is no longer a problem. Also the user can<br />

now make wide gestures, without worrying about if he/she<br />

141<br />

Copyright © ARTECH 2004 – 1º Workshop Luso-Galaico de <strong>Arte</strong>s Digitais 12 de Julho de 2004, FC - UL