Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

3.4. Results<br />

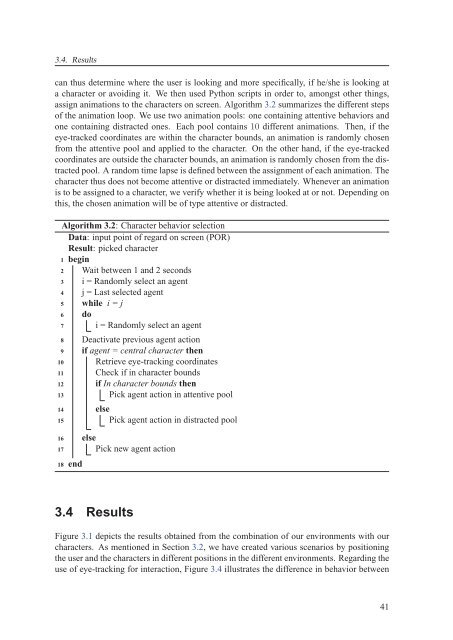

can thus determine where the user is looking and more specifically, if he/she is looking at<br />

a character or avoiding it. We then used Python scripts in order to, amongst other things,<br />

assign animations to the characters on screen. Algorithm 3.2 summarizes the different steps<br />

of the animation loop. We use two animation pools: one containing attentive behaviors and<br />

one containing distracted ones. Each pool contains 10 different animations. Then, if the<br />

eye-tracked coordinates are within the character bounds, an animation is randomly chosen<br />

from the attentive pool and applied to the character. On the other hand, if the eye-tracked<br />

coordinates are outside the character bounds, an animation is randomly chosen from the distracted<br />

pool. A random time lapse is defined between the assignment of each animation. The<br />

character thus does not become attentive or distracted immediately. Whenever an animation<br />

is to be assigned to a character, we verify whether it is being looked at or not. Depending on<br />

this, the chosen animation will be of type attentive or distracted.<br />

Algorithm 3.2: Character behavior selection<br />

Data: input point of regard on screen (POR)<br />

Result: picked character<br />

1 begin<br />

2 Wait between 1 and 2 seconds<br />

3 i = Randomly select an agent<br />

4 j = Last selected agent<br />

5 while i=j<br />

6 do<br />

7 i = Randomly select an agent<br />

8<br />

9<br />

10<br />

11<br />

12<br />

13<br />

14<br />

15<br />

16<br />

17<br />

18<br />

end<br />

Deactivate previous agent action<br />

if agent = central character then<br />

Retrieve eye-tracking coordinates<br />

Check if in character bounds<br />

if In character bounds then<br />

Pick agent action in attentive pool<br />

else<br />

Pick agent action in distracted pool<br />

else<br />

Pick new agent action<br />

3.4 Results<br />

Figure 3.1 depicts the results obtained from the combination of our environments with our<br />

characters. As mentioned in Section 3.2, we have created various scenarios by positioning<br />

the user and the characters in different positions in the different environments. Regarding the<br />

use of eye-tracking for interaction, Figure 3.4 illustrates the difference in behavior between<br />

41