Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

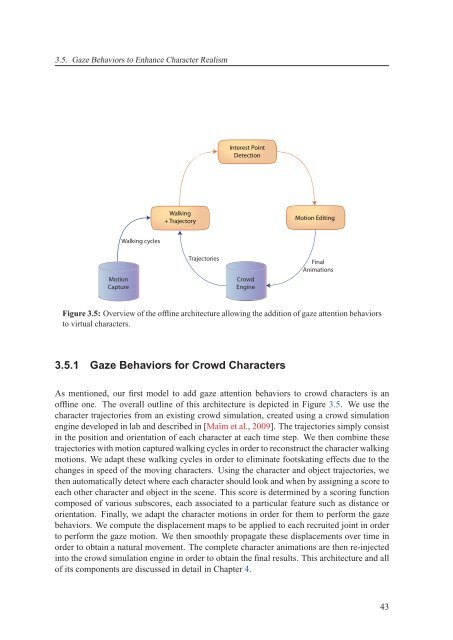

3.5. Gaze Behaviors to Enhance Character Realism<br />

Motion<br />

Capture<br />

Walking cycles<br />

Walking<br />

+ Trajectory<br />

Trajectories<br />

Interest Point<br />

Detection<br />

Crowd<br />

Engine<br />

Motion Editing<br />

Final<br />

Animations<br />

Figure 3.5: Overview of the offline architecture allowing the addition of gaze attention behaviors<br />

to virtual characters.<br />

3.5.1 Gaze Behaviors for Crowd Characters<br />

As mentioned, our first model to add gaze attention behaviors to crowd characters is an<br />

offline one. The overall outline of this architecture is depicted in Figure 3.5. We use the<br />

character trajectories from an existing crowd simulation, created using a crowd simulation<br />

engine developed in lab and described in [Maïm et al., <strong>20</strong>09]. The trajectories simply consist<br />

in the position and orientation of each character at each time step. We then combine these<br />

trajectories with motion captured walking cycles in order to reconstruct the character walking<br />

motions. We adapt these walking cycles in order to eliminate footskating effects due to the<br />

changes in speed of the moving characters. Using the character and object trajectories, we<br />

then automatically detect where each character should look and when by assigning a score to<br />

each other character and object in the scene. This score is determined by a scoring function<br />

composed of various subscores, each associated to a particular feature such as distance or<br />

orientation. Finally, we adapt the character motions in order for them to perform the gaze<br />

behaviors. We compute the displacement maps to be applied to each recruited joint in order<br />

to perform the gaze motion. We then smoothly propagate these displacements over time in<br />

order to obtain a natural movement. The complete character animations are then re-injected<br />

into the crowd simulation engine in order to obtain the final results. This architecture and all<br />

of its components are discussed in detail in Chapter 4.<br />

43