Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Texte intégral / Full text (pdf, 20 MiB) - Infoscience - EPFL

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Chapter 5. Interaction with Virtual Crowds for VRET of Agoraphobia<br />

Finally, we have used this setup together with a gesture recognition algorithm developed<br />

by van der Pol [van der Pol, <strong>20</strong>09] in order to obtain increased interaction between user and<br />

virtual characters. The gesture recognition is done by tracking the user’s hand. We thus use<br />

a data glove with Light-Emitting Diodes (LEDs) which is also part of the Phasespace motion<br />

capture system.<br />

5.1 System Overview<br />

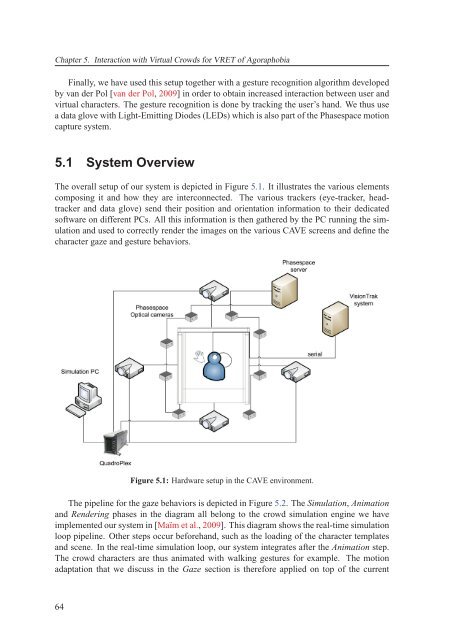

The overall setup of our system is depicted in Figure 5.1. It illustrates the various elements<br />

composing it and how they are interconnected. The various trackers (eye-tracker, headtracker<br />

and data glove) send their position and orientation information to their dedicated<br />

software on different PCs. All this information is then gathered by the PC running the simulation<br />

and used to correctly render the images on the various CAVE screens and define the<br />

character gaze and gesture behaviors.<br />

Figure 5.1: Hardware setup in the CAVE environment.<br />

The pipeline for the gaze behaviors is depicted in Figure 5.2. The Simulation, Animation<br />

and Rendering phases in the diagram all belong to the crowd simulation engine we have<br />

implemented our system in [Maïm et al., <strong>20</strong>09]. This diagram shows the real-time simulation<br />

loop pipeline. Other steps occur beforehand, such as the loading of the character templates<br />

and scene. In the real-time simulation loop, our system integrates after the Animation step.<br />

The crowd characters are thus animated with walking gestures for example. The motion<br />

adaptation that we discuss in the Gaze section is therefore applied on top of the current<br />

64