TGQR 2010Q4 Report.pdf - Teragridforum.org

TGQR 2010Q4 Report.pdf - Teragridforum.org

TGQR 2010Q4 Report.pdf - Teragridforum.org

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

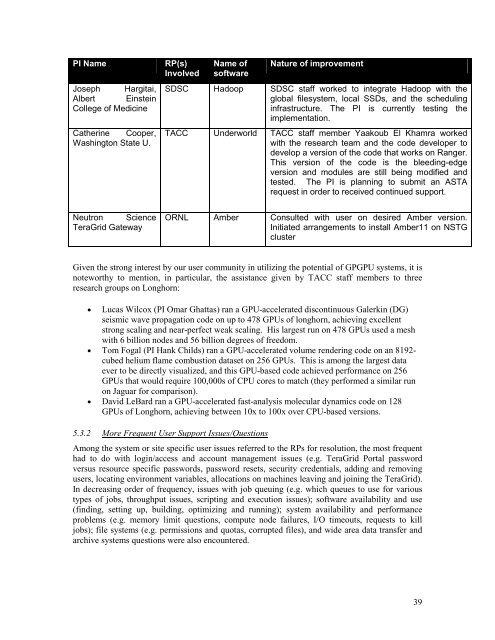

PI Name<br />

Joseph Hargitai,<br />

Albert Einstein<br />

College of Medicine<br />

Catherine Cooper,<br />

Washington State U.<br />

RP(s)<br />

Involved<br />

Name of<br />

software<br />

Nature of improvement<br />

SDSC Hadoop SDSC staff worked to integrate Hadoop with the<br />

global filesystem, local SSDs, and the scheduling<br />

infrastructure. The PI is currently testing the<br />

implementation.<br />

TACC Underworld TACC staff member Yaakoub El Khamra worked<br />

with the research team and the code developer to<br />

develop a version of the code that works on Ranger.<br />

This version of the code is the bleeding-edge<br />

version and modules are still being modified and<br />

tested. The PI is planning to submit an ASTA<br />

request in order to received continued support.<br />

Neutron Science<br />

TeraGrid Gateway<br />

ORNL Amber Consulted with user on desired Amber version.<br />

Initiated arrangements to install Amber11 on NSTG<br />

cluster<br />

Given the strong interest by our user community in utilizing the potential of GPGPU systems, it is<br />

noteworthy to mention, in particular, the assistance given by TACC staff members to three<br />

research groups on Longhorn:<br />

5.3.2<br />

• Lucas Wilcox (PI Omar Ghattas) ran a GPU-accelerated discontinuous Galerkin (DG)<br />

seismic wave propagation code on up to 478 GPUs of longhorn, achieving excellent<br />

strong scaling and near-perfect weak scaling. His largest run on 478 GPUs used a mesh<br />

with 6 billion nodes and 56 billion degrees of freedom.<br />

• Tom Fogal (PI Hank Childs) ran a GPU-accelerated volume rendering code on an 8192-<br />

cubed helium flame combustion dataset on 256 GPUs. This is among the largest data<br />

ever to be directly visualized, and this GPU-based code achieved performance on 256<br />

GPUs that would require 100,000s of CPU cores to match (they performed a similar run<br />

on Jaguar for comparison).<br />

• David LeBard ran a GPU-accelerated fast-analysis molecular dynamics code on 128<br />

GPUs of Longhorn, achieving between 10x to 100x over CPU-based versions.<br />

More Frequent User Support Issues/Questions<br />

Among the system or site specific user issues referred to the RPs for resolution, the most frequent<br />

had to do with login/access and account management issues (e.g. TeraGrid Portal password<br />

versus resource specific passwords, password resets, security credentials, adding and removing<br />

users, locating environment variables, allocations on machines leaving and joining the TeraGrid).<br />

In decreasing order of frequency, issues with job queuing (e.g. which queues to use for various<br />

types of jobs, throughput issues, scripting and execution issues); software availability and use<br />

(finding, setting up, building, optimizing and running); system availability and performance<br />

problems (e.g. memory limit questions, compute node failures, I/O timeouts, requests to kill<br />

jobs); file systems (e.g. permissions and quotas, corrupted files), and wide area data transfer and<br />

archive systems questions were also encountered.<br />

39