TGQR 2010Q4 Report.pdf - Teragridforum.org

TGQR 2010Q4 Report.pdf - Teragridforum.org

TGQR 2010Q4 Report.pdf - Teragridforum.org

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

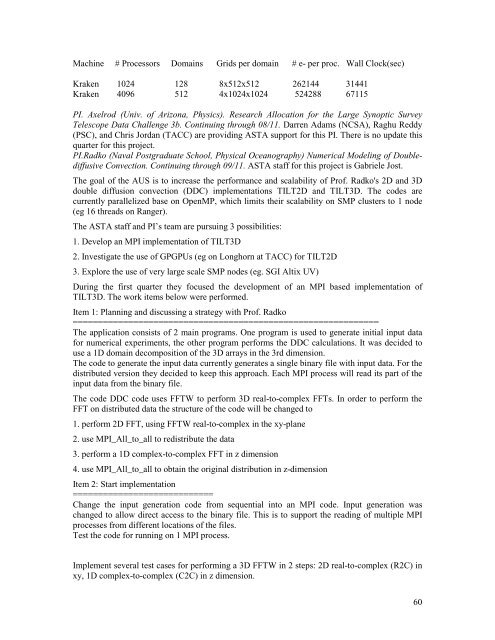

Machine # Processors Domains Grids per domain # e- per proc. Wall Clock(sec)<br />

Kraken 1024 128 8x512x512 262144 31441<br />

Kraken 4096 512 4x1024x1024 524288 67115<br />

PI. Axelrod (Univ. of Arizona, Physics). Research Allocation for the Large Synoptic Survey<br />

Telescope Data Challenge 3b. Continuing through 08/11. Darren Adams (NCSA), Raghu Reddy<br />

(PSC), and Chris Jordan (TACC) are providing ASTA support for this PI. There is no update this<br />

quarter for this project.<br />

PI.Radko (Naval Postgraduate School, Physical Oceanography) Numerical Modeling of Doublediffusive<br />

Convection. Continuing through 09/11. ASTA staff for this project is Gabriele Jost.<br />

The goal of the AUS is to increase the performance and scalability of Prof. Radko's 2D and 3D<br />

double diffusion convection (DDC) implementations TILT2D and TILT3D. The codes are<br />

currently parallelized base on OpenMP, which limits their scalability on SMP clusters to 1 node<br />

(eg 16 threads on Ranger).<br />

The ASTA staff and PI’s team are pursuing 3 possibilities:<br />

1. Develop an MPI implementation of TILT3D<br />

2. Investigate the use of GPGPUs (eg on Longhorn at TACC) for TILT2D<br />

3. Explore the use of very large scale SMP nodes (eg. SGI Altix UV)<br />

During the first quarter they focused the development of an MPI based implementation of<br />

TILT3D. The work items below were performed.<br />

Item 1: Planning and discussing a strategy with Prof. Radko<br />

=============================================================<br />

The application consists of 2 main programs. One program is used to generate initial input data<br />

for numerical experiments, the other program performs the DDC calculations. It was decided to<br />

use a 1D domain decomposition of the 3D arrays in the 3rd dimension.<br />

The code to generate the input data currently generates a single binary file with input data. For the<br />

distributed version they decided to keep this approach. Each MPI process will read its part of the<br />

input data from the binary file.<br />

The code DDC code uses FFTW to perform 3D real-to-complex FFTs. In order to perform the<br />

FFT on distributed data the structure of the code will be changed to<br />

1. perform 2D FFT, using FFTW real-to-complex in the xy-plane<br />

2. use MPI_All_to_all to redistribute the data<br />

3. perform a 1D complex-to-complex FFT in z dimension<br />

4. use MPI_All_to_all to obtain the original distribution in z-dimension<br />

Item 2: Start implementation<br />

============================<br />

Change the input generation code from sequential into an MPI code. Input generation was<br />

changed to allow direct access to the binary file. This is to support the reading of multiple MPI<br />

processes from different locations of the files.<br />

Test the code for running on 1 MPI process.<br />

Implement several test cases for performing a 3D FFTW in 2 steps: 2D real-to-complex (R2C) in<br />

xy, 1D complex-to-complex (C2C) in z dimension.<br />

60