Critical Thinking and Intelligence Analysis

Critical Thinking and Intelligence Analysis

Critical Thinking and Intelligence Analysis

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

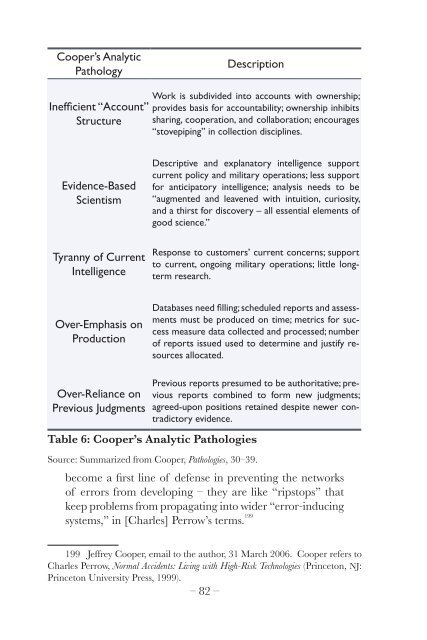

Cooper’s Analytic<br />

Pathology<br />

Description<br />

Inefficient “Account”<br />

Structure<br />

Work is subdivided into accounts with ownership;<br />

provides basis for accountability; ownership inhibits<br />

sharing, cooperation, <strong>and</strong> collaboration; encourages<br />

“stovepiping” in collection disciplines.<br />

Evidence-Based<br />

Scientism<br />

Tyranny of Current<br />

<strong>Intelligence</strong><br />

Descriptive <strong>and</strong> explanatory intelligence support<br />

current policy <strong>and</strong> military operations; less support<br />

for anticipatory intelligence; analysis needs to be<br />

“augmented <strong>and</strong> leavened with intuition, curiosity,<br />

<strong>and</strong> a thirst for discovery – all essential elements of<br />

good science.”<br />

Response to customers’ current concerns; support<br />

to current, ongoing military operations; little longterm<br />

research.<br />

Over-Emphasis on<br />

Production<br />

Databases need filling; scheduled reports <strong>and</strong> assessments<br />

must be produced on time; metrics for success<br />

measure data collected <strong>and</strong> processed; number<br />

of reports issued used to determine <strong>and</strong> justify resources<br />

allocated.<br />

Over-Reliance on<br />

Previous Judgments<br />

Previous reports presumed to be authoritative; previous<br />

reports combined to form new judgments;<br />

agreed-upon positions retained despite newer contradictory<br />

evidence.<br />

Table 6: Cooper’s Analytic Pathologies<br />

Source: Summarized from Cooper, Pathologies, 30–39.<br />

become a first line of defense in preventing the networks<br />

of errors from developing – they are like “ripstops” that<br />

keep problems from propagating into wider “error-inducing<br />

systems,” in [Charles] Perrow’s terms. 199<br />

199 Jeffrey Cooper, email to the author, 31 March 2006. Cooper refers to<br />

Charles Perrow, Normal Accidents: Living with High-Risk Technologies (Princeton, NJ:<br />

Princeton University Press, 1999).<br />

– 82 –