Automotive User Interfaces and Interactive Vehicular Applications

Automotive User Interfaces and Interactive Vehicular Applications

Automotive User Interfaces and Interactive Vehicular Applications

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Natural, Intuitive H<strong>and</strong> Gestures -<br />

A Substitute for Traditional DV-Control?<br />

Research approach<br />

� Driving <strong>and</strong> ADAS/IVIS operation is a complex visual-auditory task; overload/distraction affects driving safety<br />

→ novel solutions for future vehicular interfaces are required to keep the driver's workload low<br />

� Motion controllers (Wii Remote, PlayStation Move, Kinect) have revolutionized interaction with computer (games)<br />

→ replacing button/stick based interaction with natural interaction based on intuitive gestures <strong>and</strong> body postures<br />

� Mapping is „easy“ for games<br />

...but is a highly competitive task for application in in-car UI‘s<br />

→ body parts to be used? (h<strong>and</strong>, arm, head, back), region in the car (wheel, controls, gearshift), what are intuitive poses/<br />

gestures?, which application(s) to control?, mapping comm<strong>and</strong> ↔ gesture?<br />

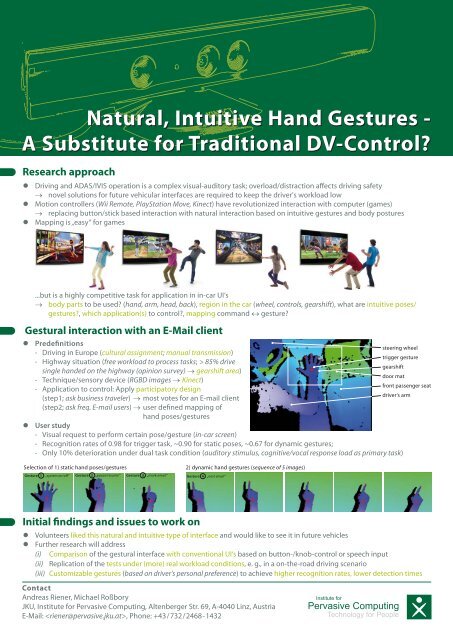

Gestural interaction with an E-Mail client<br />

� Predefinitions<br />

- Driving in Europe (cultural assignment; manual transmission)<br />

- Highway situation (free workload to process tasks; > 85% drive<br />

gearshift<br />

single h<strong>and</strong>ed on the highway (opinion survey) → gearshift area)<br />

door mat<br />

- Technique/sensory device (RGBD images → Kinect)<br />

- Application to control: Apply participatory design<br />

(step1; ask business traveler) → most votes for an E-mail client<br />

(step2; ask freq. E-mail users) → user defined mapping of<br />

h<strong>and</strong> poses/gestures<br />

� <strong>User</strong> study<br />

- Visual request to perform certain pose/gesture (in-car screen)<br />

- Recognition rates of 0.98 for trigger task, ~0.90 for static poses, ~0.67 for dynamic gestures;<br />

- Only 10% deterioration under dual task condition (auditory stimulus, cognitive/vocal response load as primary task)<br />

Selection of 1) static h<strong>and</strong> poses/gestures 2) dynamic h<strong>and</strong> gestures (sequence of 5 images)<br />

Gesture 1 „system on/off“ Gesture 2 „pause/resume“ Gesture 3 „mark email“<br />

Gesture 4 „next email“<br />

Initial findings <strong>and</strong> issues to work on<br />

� Volunteers liked this natural <strong>and</strong> intuitive type of interface <strong>and</strong> would like to see it in future vehicles<br />

� Further research will address<br />

(i) Comparison of the gestural interface with conventional UI‘s based on button-/knob-control or speech input<br />

(ii) Replication of the tests under (more) real workload conditions, e. g., in a on-the-road driving scenario<br />

(iii) Customizable gestures (based on driver's personal preference) to achieve higher recognition rates, lower detection times<br />

Contact<br />

Andreas Riener, Michael Roßbory<br />

JKU, Institute for Pervasive Computing, Altenberger Str. 69, A-4040 Linz, Austria<br />

E-Mail: , Phone: +43 / 732 / 2468 - 1432<br />

steering wheel<br />

trigger gesture<br />

Institute for<br />

Pervasive Computing<br />

Technology for People<br />

front passenger seat<br />

driver‘s arm