Annual Meeting - SCEC.org

Annual Meeting - SCEC.org

Annual Meeting - SCEC.org

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>SCEC</strong> Research Accomplishments | Report<br />

(ANSS) catalog in California, the Japan Meteorological Agency (JMA) catalog for Japan, and the Global Centroid Moment<br />

Tensor (CMT) catalog in the Western Pacific testing region. The CMT catalog is also used for global testing.<br />

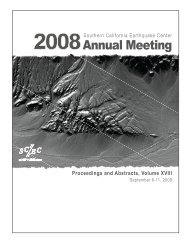

Today, 224 forecasting experiments are<br />

being tested worldwide (Figure 79). Two<br />

regions of current interest are New Zealand,<br />

where 15 models were being tested by<br />

CSEP at the time of the 4 Sept 2010 Darfield<br />

earthquake (M7.1), and Japan, where 91<br />

models were under CSEP testing at the<br />

time of the 11 Mar 2011 Tohoku earthquake<br />

(M9.0). The Darfield and Tohoku<br />

earthquake sequences are being observed<br />

by high-quality seismic networks, and they<br />

may lead to a better understanding about<br />

how such sequences could unfold along<br />

other active zones like those in the western<br />

United States.<br />

The CSEP testing procedures follow strict<br />

“rules of the game” that adhere to the<br />

principle of reproducibility: the testing<br />

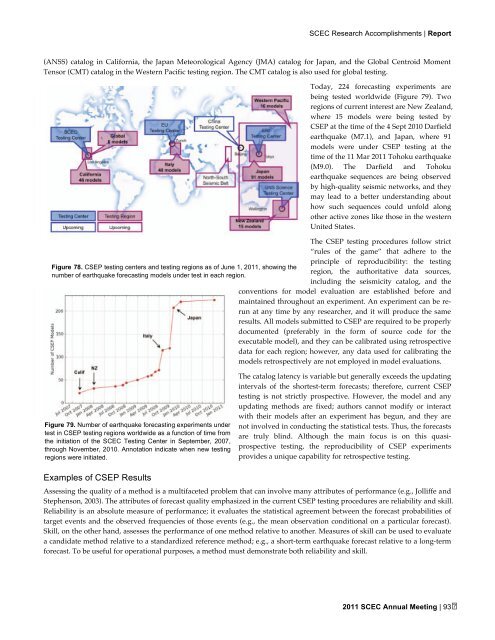

Figure 78. CSEP testing centers and testing regions as of June 1, 2011, showing the<br />

region, the authoritative data sources,<br />

number of earthquake forecasting models under test in each region.<br />

including the seismicity catalog, and the<br />

conventions for model evaluation are established before and<br />

maintained throughout an experiment. An experiment can be rerun<br />

at any time by any researcher, and it will produce the same<br />

results. All models submitted to CSEP are required to be properly<br />

documented (preferably in the form of source code for the<br />

executable model), and they can be calibrated using retrospective<br />

data for each region; however, any data used for calibrating the<br />

models retrospectively are not employed in model evaluations.<br />

Figure 79. Number of earthquake forecasting experiments under<br />

test in CSEP testing regions worldwide as a function of time from<br />

the initiation of the <strong>SCEC</strong> Testing Center in September, 2007,<br />

through November, 2010. Annotation indicate when new testing<br />

regions were initiated.<br />

Examples of CSEP Results<br />

The catalog latency is variable but generally exceeds the updating<br />

intervals of the shortest-term forecasts; therefore, current CSEP<br />

testing is not strictly prospective. However, the model and any<br />

updating methods are fixed; authors cannot modify or interact<br />

with their models after an experiment has begun, and they are<br />

not involved in conducting the statistical tests. Thus, the forecasts<br />

are truly blind. Although the main focus is on this quasiprospective<br />

testing, the reproducibility of CSEP experiments<br />

provides a unique capability for retrospective testing.<br />

Assessing the quality of a method is a multifaceted problem that can involve many attributes of performance (e.g., Jolliffe and<br />

Stephenson, 2003). The attributes of forecast quality emphasized in the current CSEP testing procedures are reliability and skill.<br />

Reliability is an absolute measure of performance; it evaluates the statistical agreement between the forecast probabilities of<br />

target events and the observed frequencies of those events (e.g., the mean observation conditional on a particular forecast).<br />

Skill, on the other hand, assesses the performance of one method relative to another. Measures of skill can be used to evaluate<br />

a candidate method relative to a standardized reference method; e.g., a short-term earthquake forecast relative to a long-term<br />

forecast. To be useful for operational purposes, a method must demonstrate both reliability and skill.<br />

2011 <strong>SCEC</strong> <strong>Annual</strong> <strong>Meeting</strong> | 93