The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

86 CHAPTER 4 NEURAL NETWORK BASED SYSTEM IDENTIFICATION<br />

( H<br />

)<br />

∑<br />

ŷ i (t) = g i W 2 ih V h (t) + B2 i<br />

h=1<br />

+ g i<br />

⎛<br />

⎝<br />

⎞<br />

m∑<br />

W 3 ij X j (t) ⎠ for i = 1, 2, 3 · · · n (4.7)<br />

j=1<br />

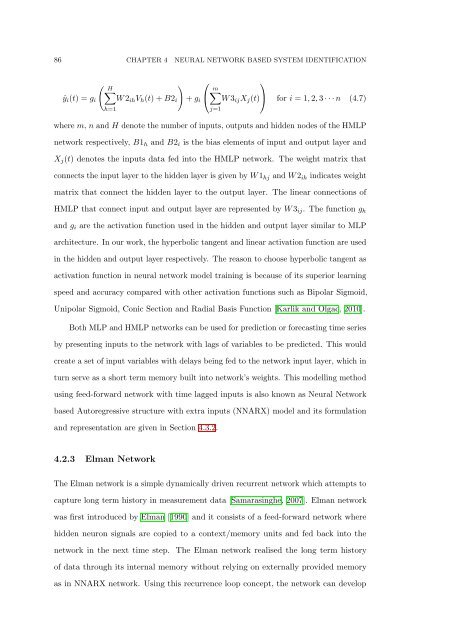

where m, n and H denote the number <strong>of</strong> inputs, outputs and hidden nodes <strong>of</strong> the HMLP<br />

network respectively, B1 h and B2 i is the bias elements <strong>of</strong> input and output layer and<br />

X j (t) denotes the inputs data fed into the HMLP network. <strong>The</strong> weight matrix that<br />

connects the input layer to the hidden layer is given by W 1 hj and W 2 ih indicates weight<br />

matrix that connect the hidden layer to the output layer. <strong>The</strong> linear connections <strong>of</strong><br />

HMLP that connect input and output layer are represented by W 3 ij . <strong>The</strong> function g h<br />

and g i are the activation function used in the hidden and output layer similar to MLP<br />

architecture. In our work, the hyperbolic tangent and linear activation function are used<br />

in the hidden and output layer respectively. <strong>The</strong> reason to choose hyperbolic tangent as<br />

activation function in neural network model training is because <strong>of</strong> its superior learning<br />

speed and accuracy compared with other activation functions such as Bipolar Sigmoid,<br />

Unipolar Sigmoid, Conic Section and Radial Basis Function [Karlik and Olgac, 2010].<br />

Both MLP and HMLP networks can be used for prediction or forecasting time series<br />

by presenting inputs to the network with lags <strong>of</strong> variables to be predicted. This would<br />

create a set <strong>of</strong> input variables with delays being fed to the network input layer, which in<br />

turn serve as a short term memory built into network’s weights. This modelling method<br />

using feed-forward network with time lagged inputs is also known as <strong>Neural</strong> <strong>Network</strong><br />

based Autoregressive structure with extra inputs (NNARX) model and its formulation<br />

and representation are given in Section 4.3.2.<br />

4.2.3 Elman <strong>Network</strong><br />

<strong>The</strong> Elman network is a simple dynamically driven recurrent network which attempts to<br />

capture long term history in measurement data [Samarasinghe, 2007]. Elman network<br />

was first introduced by Elman [1990] and it consists <strong>of</strong> a feed-forward network where<br />

hidden neuron signals are copied to a context/memory units and fed back into the<br />

network in the next time step. <strong>The</strong> Elman network realised the long term history<br />

<strong>of</strong> data through its internal memory without relying on externally provided memory<br />

as in NNARX network. Using this recurrence loop concept, the network can develop