The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

4.3 SYSTEM IDENTIFICATION WITH NEURAL NETWORK 111<br />

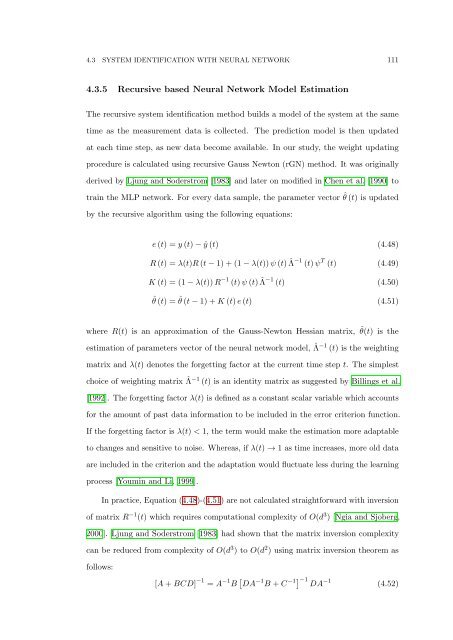

4.3.5 Recursive based <strong>Neural</strong> <strong>Network</strong> Model Estimation<br />

<strong>The</strong> recursive system identification method builds a model <strong>of</strong> the system at the same<br />

time as the measurement data is collected. <strong>The</strong> prediction model is then updated<br />

at each time step, as new data become available. In our study, the weight updating<br />

procedure is calculated using recursive Gauss Newton (rGN) method. It was originally<br />

derived by Ljung and Soderstrom [1983] and later on modified in Chen et al. [1990] to<br />

train the MLP network. For every data sample, the parameter vector ˆθ (t) is updated<br />

by the recursive algorithm using the following equations:<br />

e (t) = y (t) − ŷ (t) (4.48)<br />

R (t) = λ(t)R (t − 1) + (1 − λ(t)) ψ (t) ˆΛ −1 (t) ψ T (t) (4.49)<br />

K (t) = (1 − λ(t)) R −1 (t) ψ (t) ˆΛ −1 (t) (4.50)<br />

ˆθ (t) = ˆθ (t − 1) + K (t) e (t) (4.51)<br />

where R(t) is an approximation <strong>of</strong> the Gauss-Newton Hessian matrix, ˆθ(t) is the<br />

estimation <strong>of</strong> parameters vector <strong>of</strong> the neural network model, ˆΛ −1 (t) is the weighting<br />

matrix and λ(t) denotes the forgetting factor at the current time step t. <strong>The</strong> simplest<br />

choice <strong>of</strong> weighting matrix ˆΛ −1 (t) is an identity matrix as suggested by Billings et al.<br />

[1992]. <strong>The</strong> forgetting factor λ(t) is defined as a constant scalar variable which accounts<br />

for the amount <strong>of</strong> past data information to be included in the error criterion function.<br />

If the forgetting factor is λ(t) < 1, the term would make the estimation more adaptable<br />

to changes and sensitive to noise. Whereas, if λ(t) → 1 as time increases, more old data<br />

are included in the criterion and the adaptation would fluctuate less during the learning<br />

process [Youmin and Li, 1999].<br />

In practice, Equation (4.48)-(4.51) are not calculated straightforward with inversion<br />

<strong>of</strong> matrix R −1 (t) which requires computational complexity <strong>of</strong> O(d 3 ) [Ngia and Sjoberg,<br />

2000]. Ljung and Soderstrom [1983] had shown that the matrix inversion complexity<br />

can be reduced from complexity <strong>of</strong> O(d 3 ) to O(d 2 ) using matrix inversion theorem as<br />

follows:<br />

[A + BCD] −1 = A −1 B [ DA −1 B + C −1] −1<br />

DA<br />

−1<br />

(4.52)