The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

34 CHAPTER 2 LITERATURE REVIEW<br />

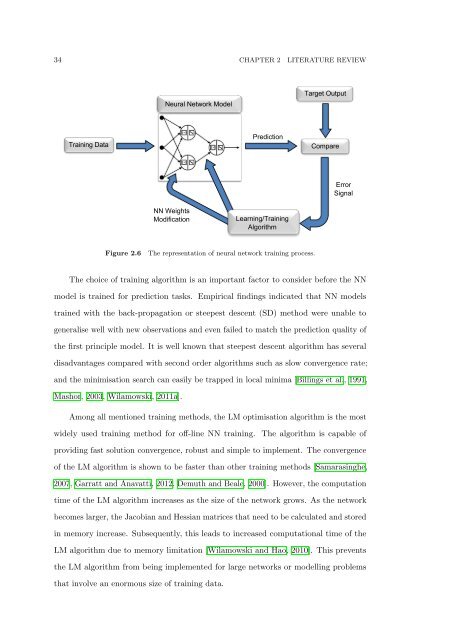

<strong>Neural</strong> <strong>Network</strong> Model<br />

Target Output<br />

Training Data<br />

Prediction<br />

Compare<br />

Error<br />

Signal<br />

NN Weights<br />

Modification<br />

Learning/Training<br />

Algorithm<br />

Figure 2.6<br />

<strong>The</strong> representation <strong>of</strong> neural network training process.<br />

<strong>The</strong> choice <strong>of</strong> training algorithm is an important factor to consider before the NN<br />

model is trained for prediction tasks. Empirical findings indicated that NN models<br />

trained with the back-propagation or steepest descent (SD) method were unable to<br />

generalise well with new observations and even failed to match the prediction quality <strong>of</strong><br />

the first principle model. It is well known that steepest descent algorithm has several<br />

disadvantages compared with second order algorithms such as slow convergence rate;<br />

and the minimisation search can easily be trapped in local minima [Billings et al., 1991,<br />

Mashor, 2003, Wilamowski, 2011a].<br />

Among all mentioned training methods, the LM optimisation algorithm is the most<br />

widely used training method for <strong>of</strong>f-line NN training. <strong>The</strong> algorithm is capable <strong>of</strong><br />

providing fast solution convergence, robust and simple to implement. <strong>The</strong> convergence<br />

<strong>of</strong> the LM algorithm is shown to be faster than other training methods [Samarasinghe,<br />

2007, Garratt and Anavatti, 2012, Demuth and Beale, 2000]. However, the computation<br />

time <strong>of</strong> the LM algorithm increases as the size <strong>of</strong> the network grows. As the network<br />

becomes larger, the Jacobian and Hessian matrices that need to be calculated and stored<br />

in memory increase. Subsequently, this leads to increased computational time <strong>of</strong> the<br />

LM algorithm due to memory limitation [Wilamowski and Hao, 2010]. This prevents<br />

the LM algorithm from being implemented for large networks or modelling problems<br />

that involve an enormous size <strong>of</strong> training data.