The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

The Development of Neural Network Based System Identification ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

4.3 SYSTEM IDENTIFICATION WITH NEURAL NETWORK 103<br />

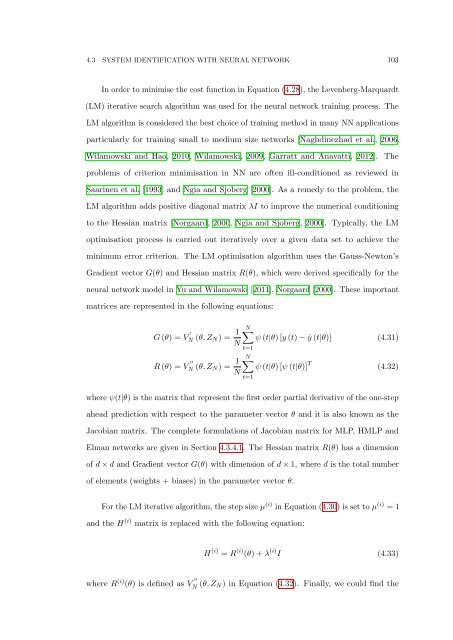

In order to minimise the cost function in Equation (4.28), the Levenberg-Marquardt<br />

(LM) iterative search algorithm was used for the neural network training process. <strong>The</strong><br />

LM algorithm is considered the best choice <strong>of</strong> training method in many NN applications<br />

particularly for training small to medium size networks [Naghdinezhad et al., 2006,<br />

Wilamowski and Hao, 2010, Wilamowski, 2009, Garratt and Anavatti, 2012]. <strong>The</strong><br />

problems <strong>of</strong> criterion minimisation in NN are <strong>of</strong>ten ill-conditioned as reviewed in<br />

Saarinen et al. [1993] and Ngia and Sjoberg [2000]. As a remedy to the problem, the<br />

LM algorithm adds positive diagonal matrix λI to improve the numerical conditioning<br />

to the Hessian matrix [Norgaard, 2000, Ngia and Sjoberg, 2000]. Typically, the LM<br />

optimisation process is carried out iteratively over a given data set to achieve the<br />

minimum error criterion. <strong>The</strong> LM optimisation algorithm uses the Gauss-Newton’s<br />

Gradient vector G(θ) and Hessian matrix R(θ), which were derived specifically for the<br />

neural network model in Yu and Wilamowski [2011], Norgaard [2000]. <strong>The</strong>se important<br />

matrices are represented in the following equations:<br />

G (θ) = V ′ N (θ, Z N ) = 1 N<br />

R (θ) = V ′′<br />

N (θ, Z N ) = 1 N<br />

N∑<br />

ψ (t|θ) [y (t) − ŷ (t|θ)] (4.31)<br />

t=1<br />

N∑<br />

ψ (t|θ) [ψ (t|θ)] T (4.32)<br />

where ψ(t|θ) is the matrix that represent the first order partial derivative <strong>of</strong> the one-step<br />

ahead prediction with respect to the parameter vector θ and it is also known as the<br />

Jacobian matrix. <strong>The</strong> complete formulations <strong>of</strong> Jacobian matrix for MLP, HMLP and<br />

Elman networks are given in Section 4.3.4.1. <strong>The</strong> Hessian matrix R(θ) has a dimension<br />

<strong>of</strong> d × d and Gradient vector G(θ) with dimension <strong>of</strong> d × 1, where d is the total number<br />

t=1<br />

<strong>of</strong> elements (weights + biases) in the parameter vector θ.<br />

For the LM iterative algorithm, the step size µ (i) in Equation (4.30) is set to µ (i) = 1<br />

and the H (i) matrix is replaced with the following equation:<br />

H (i) = R (i) (θ) + λ (i) I (4.33)<br />

where R (i) (θ) is defined as V ′′<br />

N (θ, Z N) in Equation (4.32). Finally, we could find the