CSEM Scientific and Technical Report 2008

CSEM Scientific and Technical Report 2008

CSEM Scientific and Technical Report 2008

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

H<strong>and</strong> Detection Using Boosted Classifiers<br />

D. Hasler, K. Ali<br />

This research explores the potential of boosted classifiers for object detection. These classifiers combine simple decision rules devised “by h<strong>and</strong>”<br />

<strong>and</strong> result in a powerful classification scheme. The detection of the presence of h<strong>and</strong>s in an image is chosen to test the classification framework.<br />

H<strong>and</strong>s have been chosen for two reasons: the system can be applied for security in manufacturing plants, <strong>and</strong> the problem of h<strong>and</strong> detection is<br />

sufficiently hard, given the variability in appearance, so as to be confident with respect to the generalization of the algorithm to other objects.<br />

Boosted classifiers [1] have been studied intensively in the past<br />

few years, <strong>and</strong> are applied, for example, for face detection in<br />

modern digital cameras. Boosted classifiers offer several<br />

advantages:<br />

• The boosting framework provides a theoretical guarantee<br />

that the resulting classification does not overfit the training<br />

data; in other words, there is a good chance that if a<br />

system has been trained to detect h<strong>and</strong>s in a particular<br />

video sequence, it will also be able to detect the h<strong>and</strong>s in<br />

a different setup.<br />

• The detection is based on simple rules that are designed<br />

by h<strong>and</strong> with a few free (unknown) parameters. Unlike<br />

neural networks, which tend to behave like black boxes,<br />

boosted classifier can take advantage of the knowledge of<br />

a human expert in an easier way: the expert designs the<br />

simple rule, telling the system what kind of information is<br />

relevant to the detection in the image, <strong>and</strong> the boosting<br />

does the rest.<br />

• The resulting detector can be made computationally very<br />

efficient.<br />

The h<strong>and</strong> detection is applied on the contrast <strong>and</strong> orientation<br />

image delivered by the DEVISE [ 2] camera <strong>and</strong> its vision<br />

sensor. These contrast <strong>and</strong> orientation values are computed<br />

on the vision sensor chip, <strong>and</strong> are robust to illumination<br />

changes.<br />

To detect h<strong>and</strong>s, the algorithm starts by pre-processing the<br />

image: the image is thresholded <strong>and</strong> the orientation is<br />

quantified in 8 values.<br />

The simple decision rule is as follows:<br />

Given the thresholded image <strong>and</strong> a sliding window,<br />

• Choose a sub-window at coordinate (x,y) with size (w,h),<br />

<strong>and</strong> an orientation O (O ∈ {0..7}).<br />

• Count the number of pixels with orientation O in the subwindow,<br />

<strong>and</strong> normalize this number by the sum of the<br />

(contrast) pixels in the sub-window to allow for even<br />

greater robustness to illumination changes.<br />

• Compare this result to a threshold T <strong>and</strong> a parity P: There<br />

is h<strong>and</strong> in the image if the result is greater than T <strong>and</strong> the<br />

parity is greater than zero or if the result is smaller than T<br />

<strong>and</strong> the parity is smaller than zero.<br />

This simple rule is given to the boosted classifier, which will<br />

choose parameters (x,y,w,h,O,P,T), <strong>and</strong> sample a number of<br />

these rules. These rules will vote about the presence of the<br />

h<strong>and</strong>. The detector is nothing but the result of this vote, with<br />

some trivial post-processing that allows for the removal of<br />

repeated hits.<br />

In this particular example, the expert decided that the relevant<br />

information for detecting a h<strong>and</strong> is the geometrical<br />

configuration of contrast edges of given orientations in the<br />

image.<br />

At a 95% detection rate, the classifier obtained in using this<br />

technique gives one false alarm every 100’000 tests or every<br />

11 images (a sliding window that performs 9’000 tests per<br />

image is used).<br />

On a regular PC, the detection runs in real time at<br />

25 frames/second.<br />

The simplicity of the rule used to detect the h<strong>and</strong>, compared to<br />

the performance of the resulting detector, gives a hint about<br />

the potential of this technology, <strong>and</strong> confirms the robustness<br />

of the data representation delivered by the vision sensor.<br />

The algorithm has the potential to be applied in manufacturing<br />

plants, where the position of the h<strong>and</strong> can be used for security<br />

applications. For example, on a rolling-mill, no security<br />

devices exist that prevent a h<strong>and</strong> to be processed by the mill.<br />

Using this technology, it is possible to stop the machine if the<br />

h<strong>and</strong> comes too close to the drum. In general, a vision sensor<br />

could be mounted on any machine, <strong>and</strong> (with some specific<br />

adaptation) allow the machine to operate only if there are no<br />

h<strong>and</strong>s present at the sensitive locations.<br />

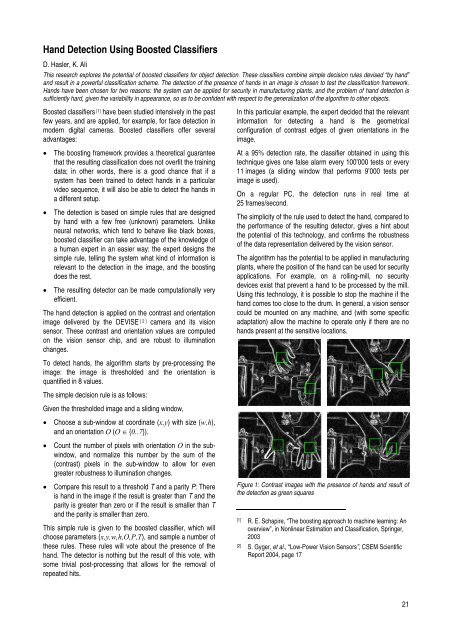

Figure 1: Contrast images with the presence of h<strong>and</strong>s <strong>and</strong> result of<br />

the detection as green squares<br />

[1] R. E. Schapire, “The boosting approach to machine learning: An<br />

overview”, in Nonlinear Estimation <strong>and</strong> Classification, Springer,<br />

2003<br />

[2] S. Gyger, et al., “Low-Power Vision Sensors”, <strong>CSEM</strong> <strong>Scientific</strong><br />

<strong>Report</strong> 2004, page 17<br />

21