6th European Conference - Academic Conferences

6th European Conference - Academic Conferences

6th European Conference - Academic Conferences

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

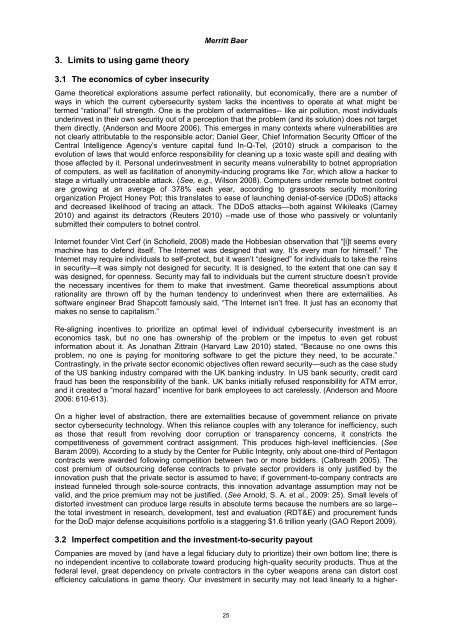

3. Limits to using game theory<br />

3.1 The economics of cyber insecurity<br />

Merritt Baer<br />

Game theoretical explorations assume perfect rationality, but economically, there are a number of<br />

ways in which the current cybersecurity system lacks the incentives to operate at what might be<br />

termed “rational” full strength. One is the problem of externalities-- like air pollution, most individuals<br />

underinvest in their own security out of a perception that the problem (and its solution) does not target<br />

them directly. (Anderson and Moore 2006). This emerges in many contexts where vulnerabilities are<br />

not clearly attributable to the responsible actor; Daniel Geer, Chief Information Security Officer of the<br />

Central Intelligence Agency‟s venture capital fund In-Q-Tel, (2010) struck a comparison to the<br />

evolution of laws that would enforce responsibility for cleaning up a toxic waste spill and dealing with<br />

those affected by it. Personal underinvestment in security means vulnerability to botnet appropriation<br />

of computers, as well as facilitation of anonymity-inducing programs like Tor, which allow a hacker to<br />

stage a virtually untraceable attack. (See, e.g., Wilson 2008). Computers under remote botnet control<br />

are growing at an average of 378% each year, according to grassroots security monitoring<br />

organization Project Honey Pot; this translates to ease of launching denial-of-service (DDoS) attacks<br />

and decreased likelihood of tracing an attack. The DDoS attacks—both against Wikileaks (Carney<br />

2010) and against its detractors (Reuters 2010) --made use of those who passively or voluntarily<br />

submitted their computers to botnet control.<br />

Internet founder Vint Cerf (in Schofield, 2008) made the Hobbesian observation that “[i]t seems every<br />

machine has to defend itself. The Internet was designed that way. It‟s every man for himself.” The<br />

Internet may require individuals to self-protect, but it wasn‟t “designed” for individuals to take the reins<br />

in security—it was simply not designed for security. It is designed, to the extent that one can say it<br />

was designed, for openness. Security may fall to individuals but the current structure doesn‟t provide<br />

the necessary incentives for them to make that investment. Game theoretical assumptions about<br />

rationality are thrown off by the human tendency to underinvest when there are externalities. As<br />

software engineer Brad Shapcott famously said, “The Internet isn‟t free. It just has an economy that<br />

makes no sense to capitalism.”<br />

Re-aligning incentives to prioritize an optimal level of individual cybersecurity investment is an<br />

economics task, but no one has ownership of the problem or the impetus to even get robust<br />

information about it. As Jonathan Zittrain (Harvard Law 2010) stated, “Because no one owns this<br />

problem, no one is paying for monitoring software to get the picture they need, to be accurate.”<br />

Contrastingly, in the private sector economic objectives often reward security—such as the case study<br />

of the US banking industry compared with the UK banking industry. In US bank security, credit card<br />

fraud has been the responsibility of the bank. UK banks initially refused responsibility for ATM error,<br />

and it created a “moral hazard” incentive for bank employees to act carelessly. (Anderson and Moore<br />

2006: 610-613).<br />

On a higher level of abstraction, there are externalities because of government reliance on private<br />

sector cybersecurity technology. When this reliance couples with any tolerance for inefficiency, such<br />

as those that result from revolving door corruption or transparency concerns, it constricts the<br />

competitiveness of government contract assignment. This produces high-level inefficiencies. (See<br />

Baram 2009). According to a study by the Center for Public Integrity, only about one-third of Pentagon<br />

contracts were awarded following competition between two or more bidders. (Calbreath 2005). The<br />

cost premium of outsourcing defense contracts to private sector providers is only justified by the<br />

innovation push that the private sector is assumed to have; if government-to-company contracts are<br />

instead funneled through sole-source contracts, this innovation advantage assumption may not be<br />

valid, and the price premium may not be justified. (See Arnold, S. A. et al., 2009: 25). Small levels of<br />

distorted investment can produce large results in absolute terms because the numbers are so large--<br />

the total investment in research, development, test and evaluation (RDT&E) and procurement funds<br />

for the DoD major defense acquisitions portfolio is a staggering $1.6 trillion yearly (GAO Report 2009).<br />

3.2 Imperfect competition and the investment-to-security payout<br />

Companies are moved by (and have a legal fiduciary duty to prioritize) their own bottom line; there is<br />

no independent incentive to collaborate toward producing high-quality security products. Thus at the<br />

federal level, great dependency on private contractors in the cyber weapons arena can distort cost<br />

efficiency calculations in game theory. Our investment in security may not lead linearly to a higher-<br />

25