6th European Conference - Academic Conferences

6th European Conference - Academic Conferences

6th European Conference - Academic Conferences

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

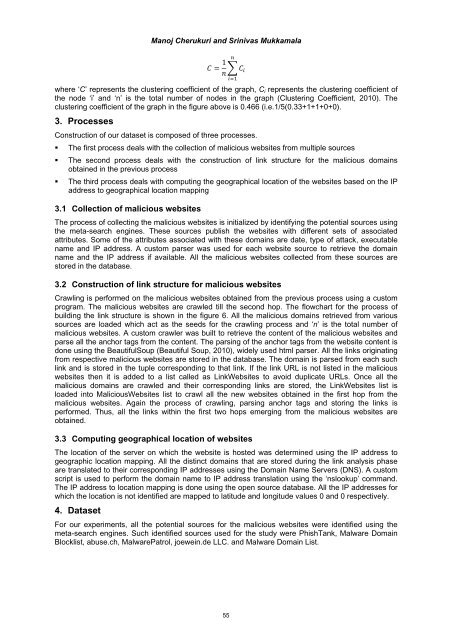

Manoj Cherukuri and Srinivas Mukkamala<br />

<br />

1<br />

where ‘C’ represents the clustering coefficient of the graph, Ci represents the clustering coefficient of<br />

the node ‘i’ and ‘n’ is the total number of nodes in the graph (Clustering Coefficient, 2010). The<br />

clustering coefficient of the graph in the figure above is 0.466 (i.e.1/5(0.33+1+1+0+0).<br />

3. Processes<br />

Construction of our dataset is composed of three processes.<br />

The first process deals with the collection of malicious websites from multiple sources<br />

The second process deals with the construction of link structure for the malicious domains<br />

obtained in the previous process<br />

The third process deals with computing the geographical location of the websites based on the IP<br />

address to geographical location mapping<br />

3.1 Collection of malicious websites<br />

The process of collecting the malicious websites is initialized by identifying the potential sources using<br />

the meta-search engines. These sources publish the websites with different sets of associated<br />

attributes. Some of the attributes associated with these domains are date, type of attack, executable<br />

name and IP address. A custom parser was used for each website source to retrieve the domain<br />

name and the IP address if available. All the malicious websites collected from these sources are<br />

stored in the database.<br />

3.2 Construction of link structure for malicious websites<br />

Crawling is performed on the malicious websites obtained from the previous process using a custom<br />

program. The malicious websites are crawled till the second hop. The flowchart for the process of<br />

building the link structure is shown in the figure 6. All the malicious domains retrieved from various<br />

sources are loaded which act as the seeds for the crawling process and ‘n’ is the total number of<br />

malicious websites. A custom crawler was built to retrieve the content of the malicious websites and<br />

parse all the anchor tags from the content. The parsing of the anchor tags from the website content is<br />

done using the BeautifulSoup (Beautiful Soup, 2010), widely used html parser. All the links originating<br />

from respective malicious websites are stored in the database. The domain is parsed from each such<br />

link and is stored in the tuple corresponding to that link. If the link URL is not listed in the malicious<br />

websites then it is added to a list called as LinkWebsites to avoid duplicate URLs. Once all the<br />

malicious domains are crawled and their corresponding links are stored, the LinkWebsites list is<br />

loaded into MaliciousWebsites list to crawl all the new websites obtained in the first hop from the<br />

malicious websites. Again the process of crawling, parsing anchor tags and storing the links is<br />

performed. Thus, all the links within the first two hops emerging from the malicious websites are<br />

obtained.<br />

3.3 Computing geographical location of websites<br />

The location of the server on which the website is hosted was determined using the IP address to<br />

geographic location mapping. All the distinct domains that are stored during the link analysis phase<br />

are translated to their corresponding IP addresses using the Domain Name Servers (DNS). A custom<br />

script is used to perform the domain name to IP address translation using the ‘nslookup’ command.<br />

The IP address to location mapping is done using the open source database. All the IP addresses for<br />

which the location is not identified are mapped to latitude and longitude values 0 and 0 respectively.<br />

4. Dataset<br />

For our experiments, all the potential sources for the malicious websites were identified using the<br />

meta-search engines. Such identified sources used for the study were PhishTank, Malware Domain<br />

Blocklist, abuse.ch, MalwarePatrol, joewein.de LLC. and Malware Domain List.<br />

55