Low (web) Quality - BALTEX

Low (web) Quality - BALTEX

Low (web) Quality - BALTEX

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

152<br />

Weighting multi-models in seasonal forecasting – and what can be learnt for<br />

the combination of RCMs<br />

Andreas P. Weigel, Mark A. Liniger and C. Appenzeller<br />

Federal Office of Meteorology and Climatology, MeteoSwiss, Switzerland<br />

Email: andreas.weigel@meteoswiss.ch<br />

1. Introduction<br />

Multi-model combination (MMC) is a pragmatic approach<br />

to estimate the range of uncertainties induced by model<br />

error. MMC is now routinely applied on essentially all time<br />

scales, ranging from the scale of weather forecasts to the<br />

scale of climate-change scenarios, and its success has been<br />

demonstrated in many studies (e.g. Palmer et al. 2004).<br />

Simple multi-models can be easily constructed by pooling<br />

together the available single model predictions with equal<br />

weight. However, given that models may differ in their<br />

quality and prediction skill, it has been suggested to further<br />

optimize the effect of MMC by weighting the participating<br />

models according to their prior performance (e.g. Giorgi and<br />

Mearns 2002). How can such weights be objectively<br />

estimated? How robust are such estimates, and what are the<br />

consequences if “wrong” weights are applied, i.e. if the<br />

weights are not fully consistent with the true model skill? It<br />

is the aim of this study to discuss these questions at the<br />

example of seasonal forecasts as well as synthetic toy model<br />

forecasts, i.e. prediction contexts where a sufficient number<br />

of training and verification data is available. Potential<br />

implications of the results for the construction of weighted<br />

RCM multi-models will be discussed.<br />

2. Model weighting in seasonal forecasting<br />

The weighting method presented in this study has been<br />

described in detail in Weigel et al. (2008). Essentially,<br />

optimum weights are obtained by minimizing a universal<br />

skill metric called ignorance (Roulston and Smith 2002), a<br />

score which is derived from information theory. The<br />

ignorance quantifies the information deficit (measured in<br />

bits) of a user who is in possession of a probabilistic<br />

prediction, but does not yet know the true outcome. This<br />

metric has its fundamental justification in the fact that it is<br />

the aim of any prediction strategy to maximize the available<br />

information about a future event, respectively to minimize<br />

the information deficit. The method has been applied and<br />

tested in cross-validation mode on a set of 40 years of<br />

hindcast data from two models of DEMETER database<br />

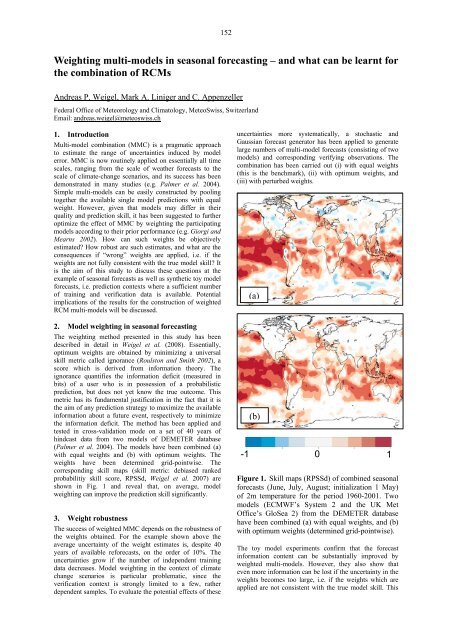

(Palmer et al. 2004). The models have been combined (a)<br />

with equal weights and (b) with optimum weights. The<br />

weights have been determined grid-pointwise. The<br />

corresponding skill maps (skill metric: debiased ranked<br />

probabilitiy skill score, RPSSd, Weigel et al. 2007) are<br />

shown in Fig. 1 and reveal that, on average, model<br />

weighting can improve the prediction skill significantly.<br />

3. Weight robustness<br />

The success of weighted MMC depends on the robustness of<br />

the weights obtained. For the example shown above the<br />

average uncertainty of the weight estimates is, despite 40<br />

years of available reforecasts, on the order of 10%. The<br />

uncertainties grow if the number of independent training<br />

data decreases. Model weighting in the context of climate<br />

change scenarios is particular problematic, since the<br />

verification context is strongly limited to a few, rather<br />

dependent samples. To evaluate the potential effects of these<br />

uncertainties more systematically, a stochastic and<br />

Gaussian forecast generator has been applied to generate<br />

large numbers of multi-model forecasts (consisting of two<br />

models) and corresponding verifying observations. The<br />

combination has been carried out (i) with equal weights<br />

(this is the benchmark), (ii) with optimum weights, and<br />

(iii) with perturbed weights.<br />

(a)<br />

(b)<br />

-1 0<br />

Figure 1. Skill maps (RPSSd) of combined seasonal<br />

forecasts (June, July, August; initialization 1 May)<br />

of 2m temperature for the period 1960-2001. Two<br />

models (ECMWF’s System 2 and the UK Met<br />

Office’s GloSea 2) from the DEMETER database<br />

have been combined (a) with equal weights, and (b)<br />

with optimum weights (determined grid-pointwise).<br />

The toy model experiments confirm that the forecast<br />

information content can be substantially improved by<br />

weighted multi-models. However, they also show that<br />

even more information can be lost if the uncertainty in the<br />

weights becomes too large, i.e. if the weights which are<br />

applied are not consistent with the true model skill. This<br />

1