Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Chapter 7. Proc. ISCAS 2005, pages 5878-5881 125<br />

<strong>in</strong>variant under these transformations. In [7] we show that<br />

these are the only <strong>in</strong>determ<strong>in</strong>acies, given some additional weak<br />

restrictions to the model, namely that A has to be k-admissible<br />

and that s is not allowed to conta<strong>in</strong> a Gaussian k-component.<br />

As usual by preprocess<strong>in</strong>g of the observations x by whiten<strong>in</strong>g<br />

we may also assume that Cov(x) = I. Then I =<br />

Cov(x) =A Cov(s)A ⊤ = AA ⊤ so A is orthogonal.<br />

B. MICA us<strong>in</strong>g Hessian diagonalization (MHICA)<br />

We assume that s admits a C2−density ps. Us<strong>in</strong>g orthogonality<br />

of A we get ps(s0) =px(As0) for s0 ∈ Rnk .Let<br />

Hf (x0) denote the Hessian of f evaluated at x0. It transforms<br />

like a 2-tensor so locally at s0 with ps(s0) > 0 we get<br />

Hln ps (s0)<br />

⊤<br />

=Hln px◦A(s0) =AHln px (As0)A<br />

The key idea now lies <strong>in</strong> the fact that s is assumed to be k<strong>in</strong>dependent,<br />

so ps factorizes <strong>in</strong>to n groups depend<strong>in</strong>g only<br />

on k separate variables each. So ln ps is a sum of functions<br />

depend<strong>in</strong>g on k separate variables hence Hln ps (s0) is blockdiagonal<br />

i.e. a k-scal<strong>in</strong>g.<br />

The algorithm, multidimensional Hessian ICA (MHICA),<br />

now simply uses the block-diagonality structure from equation<br />

1 and performs JBD of estimates of a set of Hessians<br />

Hln ps (si) evaluated at different po<strong>in</strong>ts si ∈ Rnk . Given slight<br />

restrictions on the eigenvalues, the result<strong>in</strong>g block diagonalizer<br />

then equals A⊤ except for k-scal<strong>in</strong>g and permutation. The<br />

Hessians are estimated us<strong>in</strong>g kernel-density approximation<br />

with a sufficiently smooth kernel, but other methods such<br />

as approximation us<strong>in</strong>g f<strong>in</strong>ite differences are possible, too.<br />

Density approximation is problematic, but <strong>in</strong> this sett<strong>in</strong>g due to<br />

the fact that we can use many Hessians we only need rough<br />

estimates. For more details on the kernel approximation we<br />

refer to the one-dimensional Hessian ICA algorithm from [8].<br />

MHICA generalizes one-dimensional ideas proposed <strong>in</strong> [8],<br />

[9]. More generally, we could have also used characteristic<br />

functions <strong>in</strong>stead of densities, which leads to a related algorithm,<br />

see [10] for the s<strong>in</strong>gle-dimensional ICA case.<br />

IV. MULTIDIMENSIONAL TIME DECORRELATION<br />

Instead of assum<strong>in</strong>g k-<strong>in</strong>dependence of the sources <strong>in</strong><br />

the MBSS problem, <strong>in</strong> this section we assume that s is a<br />

multivariate centered discrete WSS random process such that<br />

its symmetrized autocovariances<br />

¯Rs(τ) := 1 � � ⊤<br />

E s(t + τ)s(t)<br />

2<br />

� + E � s(t)s(t + τ) ⊤��<br />

(2)<br />

are k-scal<strong>in</strong>gs for all τ. This models the fact that the sources<br />

are supposed to be block-decorrelated <strong>in</strong> the time-doma<strong>in</strong> for<br />

all time-shifts τ.<br />

A. Indeterm<strong>in</strong>acies<br />

Aga<strong>in</strong> A can only be found up to k-scal<strong>in</strong>g and kpermutation<br />

because condition 2 is <strong>in</strong>variant under this transformation.<br />

One sufficient condition for identifiability is to have<br />

pairwise different eigenvalues of at least one Rs(τ), however<br />

generalizations are possible, see [4] for the case k =1.Us<strong>in</strong>g<br />

whiten<strong>in</strong>g, we can aga<strong>in</strong> assume orthogonal A.<br />

(1)<br />

3500<br />

3000<br />

2500<br />

2000<br />

1500<br />

1000<br />

500<br />

0<br />

0.5 1 1.5 2 2.5 3 3.5 4<br />

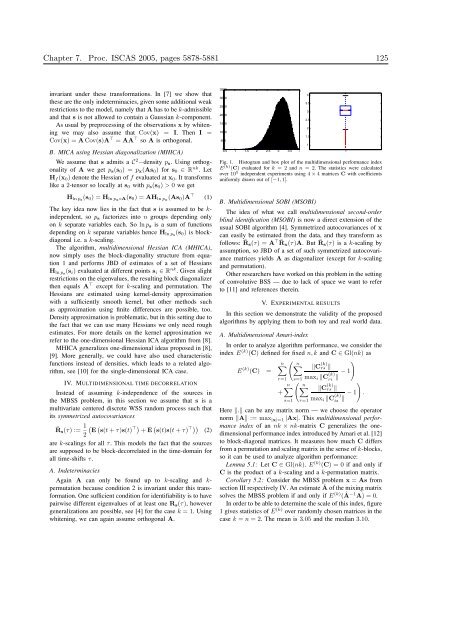

Fig. 1. Histogram and box plot of the multidimensional performance <strong>in</strong>dex<br />

E (k) (C) evaluated for k =2and n =2. The statistics were calculated<br />

over 10 5 <strong>in</strong>dependent experiments us<strong>in</strong>g 4 × 4 matrices C with coefficients<br />

uniformly drawn out of [−1, 1].<br />

B. Multidimensional SOBI (MSOBI)<br />

Theideaofwhatwecallmultidimensional second-order<br />

bl<strong>in</strong>d identification (MSOBI) is now a direct extension of the<br />

usual SOBI algorithm [4]. Symmetrized autocovariances of x<br />

can easily be estimated from the data, and they transform as<br />

follows: ¯ Rs(τ) =A⊤ ¯ Rx(τ)A. But ¯ Rs(τ) is a k-scal<strong>in</strong>g by<br />

assumption, so JBD of a set of such symmetrized autocovariance<br />

matrices yields A as diagonalizer (except for k-scal<strong>in</strong>g<br />

and permutation).<br />

Other researchers have worked on this problem <strong>in</strong> the sett<strong>in</strong>g<br />

of convolutive BSS — due to lack of space we want to refer<br />

to [11] and references there<strong>in</strong>.<br />

V. EXPERIMENTAL RESULTS<br />

In this section we demonstrate the validity of the proposed<br />

algorithms by apply<strong>in</strong>g them to both toy and real world data.<br />

A. Multidimensional Amari-<strong>in</strong>dex<br />

In order to analyze algorithm performance, we consider the<br />

<strong>in</strong>dex E (k) (C) def<strong>in</strong>ed for fixed n, k and C ∈ Gl(nk) as<br />

E (k) n�<br />

�<br />

n�<br />

�C<br />

(C) =<br />

r=1 s=1<br />

(k)<br />

rs �<br />

maxi �C (k)<br />

�<br />

− 1<br />

ri �<br />

n�<br />

�<br />

n�<br />

�C<br />

+<br />

s=1 r=1<br />

(k)<br />

rs �<br />

maxi �C (k)<br />

�<br />

− 1 .<br />

is �<br />

Here �.� can be any matrix norm — we choose the operator<br />

norm �A� := max |x|=1 |Ax|. Thismultidimensional performance<br />

<strong>in</strong>dex of an nk × nk-matrix C generalizes the onedimensional<br />

performance <strong>in</strong>dex <strong>in</strong>troduced by Amari et al. [12]<br />

to block-diagonal matrices. It measures how much C differs<br />

from a permutation and scal<strong>in</strong>g matrix <strong>in</strong> the sense of k-blocks,<br />

so it can be used to analyze algorithm performance:<br />

Lemma 5.1: Let C ∈ Gl(nk). E (k) (C) =0if and only if<br />

C is the product of a k-scal<strong>in</strong>g and a k-permutation matrix.<br />

Corollary 5.2: Consider the MBSS problem x = As from<br />

section III respectively IV. An estimate  of the mix<strong>in</strong>g matrix<br />

solves the MBSS problem if and only if E (k) ( Â−1 A)=0.<br />

In order to be able to determ<strong>in</strong>e the scale of this <strong>in</strong>dex, figure<br />

1 gives statistics of E (k) over randomly chosen matrices <strong>in</strong> the<br />

case k = n =2. The mean is 3.05 and the median 3.10.<br />

4<br />

3.5<br />

3<br />

2.5<br />

2<br />

1.5<br />

1<br />

1