Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Chapter 18. Proc. EUSIPCO 2006 249<br />

v1 − v0,...,vn − v0 are l<strong>in</strong>early <strong>in</strong>dependent. Consider the<br />

follow<strong>in</strong>g embedd<strong>in</strong>g<br />

R n → R n+1 : (x1,...,xn) ↦→ (x1,...,xn,1).<br />

We may therefore identify the p dimensional aff<strong>in</strong>e subspaces<br />

with the p + 1 l<strong>in</strong>ear subspaces <strong>in</strong> R n+1 by embedd<strong>in</strong>g<br />

the generators and tak<strong>in</strong>g the l<strong>in</strong>ear closure. In fact it<br />

is easy to see that we obta<strong>in</strong> a 1-to-1 mapp<strong>in</strong>g between the<br />

p dimensional aff<strong>in</strong>e subspaces of R n and the p + 1 dimensional<br />

l<strong>in</strong>ear subspaces <strong>in</strong> R n−1 , which <strong>in</strong>tersect the orthogonal<br />

complement of (0,...,0,1) only at the orig<strong>in</strong>.<br />

Hence we can reduce the aff<strong>in</strong>e case to calculations for<br />

l<strong>in</strong>ear subsets only. Note that s<strong>in</strong>ce only eigenvectors of sums<br />

of projections onto the subsets Vi can become centroids <strong>in</strong><br />

the batch version of the k-means algorithm, any centroid is<br />

also <strong>in</strong> the image of the above embedd<strong>in</strong>g and can be identified<br />

uniquely with a aff<strong>in</strong>e subspace of the orig<strong>in</strong>al problem.<br />

4. EXPERIMENTAL RESULTS<br />

We f<strong>in</strong>ish by illustrat<strong>in</strong>g the algorithm <strong>in</strong> a few examples.<br />

4.1 Toy example<br />

As a toy example, let us first consider 10 4 samples of<br />

G4,2, namely uniformly randomly chosen from the 6 possible<br />

2-dimensional coord<strong>in</strong>ate planes. In order to avoid<br />

any bias with<strong>in</strong> the algorithm, the non-zero coefficients from<br />

the plane-represent<strong>in</strong>g matrices have been chosen uniformly<br />

from O2. The samples have been deteriorated by Gaussian<br />

noise with a signal-to-noise ratio of 10dB. Application of<br />

the Grassmann k-means algorithm with k = 6 yields convergence<br />

after only 6 epochs with the result<strong>in</strong>g 6 clusters<br />

with centroids [V i ]. The distance measure µ(V) := (|vi1 +<br />

vi2| + |vi1 − vi2|)i should be large only <strong>in</strong> two coord<strong>in</strong>ates if<br />

[V] is close to the correspond<strong>in</strong>g 2-dimensional coord<strong>in</strong>ate<br />

plane. And <strong>in</strong>deed, the found centroids have distance measures<br />

µ(V i ) =<br />

⎛ ⎞ ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ ⎛ ⎞<br />

0.02 1.7 1.7 0.01 2.0 0.01<br />

⎜ 0 ⎟ ⎜0.01⎟<br />

⎜0.01⎟<br />

⎜ 1.5 ⎟ ⎜ 2.0 ⎟ ⎜ 2.0 ⎟<br />

⎝<br />

1.9<br />

⎠, ⎝<br />

0.01<br />

⎠, ⎝<br />

1.7<br />

⎠, ⎝<br />

1.5<br />

⎠, ⎝<br />

0<br />

⎠, ⎝<br />

0.01<br />

⎠.<br />

1.9 1.7 0.02 0 0.01 2.0<br />

Hence, the algorithm correctly chose all 6 coord<strong>in</strong>ate planes<br />

as cluster centroids.<br />

4.2 Polytope identification<br />

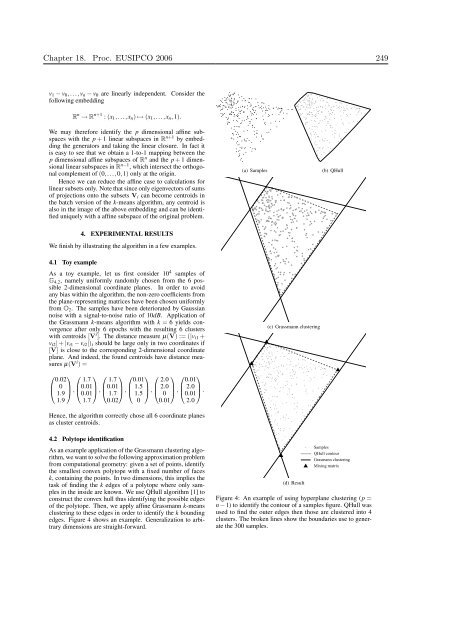

As an example application of the Grassmann cluster<strong>in</strong>g algorithm,<br />

we want to solve the follow<strong>in</strong>g approximation problem<br />

from computational geometry: given a set of po<strong>in</strong>ts, identify<br />

the smallest convex polytope with a fixed number of faces<br />

k, conta<strong>in</strong><strong>in</strong>g the po<strong>in</strong>ts. In two dimensions, this implies the<br />

task of f<strong>in</strong>d<strong>in</strong>g the k edges of a polytope where only samples<br />

<strong>in</strong> the <strong>in</strong>side are known. We use QHull algorithm [1] to<br />

construct the convex hull thus identify<strong>in</strong>g the possible edges<br />

of the polytope. Then, we apply aff<strong>in</strong>e Grassmann k-means<br />

cluster<strong>in</strong>g to these edges <strong>in</strong> order to identify the k bound<strong>in</strong>g<br />

edges. Figure 4 shows an example. Generalization to arbitrary<br />

dimensions are straight-forward.<br />

(a) Samples (b) QHull<br />

(c) Grassmann cluster<strong>in</strong>g<br />

(d) Result<br />

Samples<br />

QHull contour<br />

Grasmann cluster<strong>in</strong>g<br />

Mix<strong>in</strong>g matrix<br />

Figure 4: An example of us<strong>in</strong>g hyperplane cluster<strong>in</strong>g (p =<br />

n − 1) to identify the contour of a samples figure. QHull was<br />

used to f<strong>in</strong>d the outer edges then those are clustered <strong>in</strong>to 4<br />

clusters. The broken l<strong>in</strong>es show the boundaries use to generate<br />

the 300 samples.