Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

208 Chapter 15. Neurocomput<strong>in</strong>g, 69:1485-1501, 2006<br />

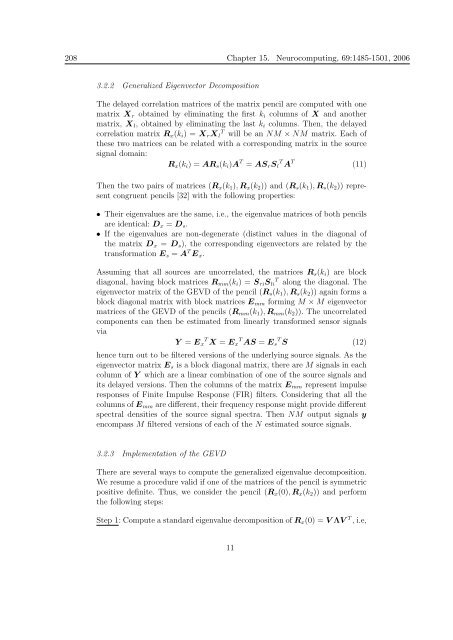

3.2.2 Generalized Eigenvector Decomposition<br />

The delayed correlation matrices of the matrix pencil are computed with one<br />

matrix Xr obta<strong>in</strong>ed by elim<strong>in</strong>at<strong>in</strong>g the first ki columns of X and another<br />

matrix, Xl, obta<strong>in</strong>ed by elim<strong>in</strong>at<strong>in</strong>g the last ki columns. Then, the delayed<br />

correlation matrix Rx(ki) = XrXl T will be an NM × NM matrix. Each of<br />

these two matrices can be related with a correspond<strong>in</strong>g matrix <strong>in</strong> the source<br />

signal doma<strong>in</strong>:<br />

Rx(ki) = ARs(ki)A T = ASrSl T A T<br />

(11)<br />

Then the two pairs of matrices (Rx(k1), Rx(k2)) and (Rs(k1), Rs(k2)) represent<br />

congruent pencils [32] with the follow<strong>in</strong>g properties:<br />

• Their eigenvalues are the same, i.e., the eigenvalue matrices of both pencils<br />

are identical: Dx = Ds.<br />

• If the eigenvalues are non-degenerate (dist<strong>in</strong>ct values <strong>in</strong> the diagonal of<br />

the matrix Dx = Ds), the correspond<strong>in</strong>g eigenvectors are related by the<br />

transformation Es = A T Ex.<br />

Assum<strong>in</strong>g that all sources are uncorrelated, the matrices Rs(ki) are block<br />

diagonal, hav<strong>in</strong>g block matrices Rmm(ki) = SriSli T along the diagonal. The<br />

eigenvector matrix of the GEVD of the pencil (Rs(k1), Rs(k2)) aga<strong>in</strong> forms a<br />

block diagonal matrix with block matrices Emm form<strong>in</strong>g M × M eigenvector<br />

matrices of the GEVD of the pencils (Rmm(k1), Rmm(k2)). The uncorrelated<br />

components can then be estimated from l<strong>in</strong>early transformed sensor signals<br />

via<br />

Y = Ex T X = Ex T AS = Es T S (12)<br />

hence turn out to be filtered versions of the underly<strong>in</strong>g source signals. As the<br />

eigenvector matrix Es is a block diagonal matrix, there are M signals <strong>in</strong> each<br />

column of Y which are a l<strong>in</strong>ear comb<strong>in</strong>ation of one of the source signals and<br />

its delayed versions. Then the columns of the matrix Emm represent impulse<br />

responses of F<strong>in</strong>ite Impulse Response (FIR) filters. Consider<strong>in</strong>g that all the<br />

columns of Emm are different, their frequency response might provide different<br />

spectral densities of the source signal spectra. Then NM output signals y<br />

encompass M filtered versions of each of the N estimated source signals.<br />

3.2.3 Implementation of the GEVD<br />

There are several ways to compute the generalized eigenvalue decomposition.<br />

We resume a procedure valid if one of the matrices of the pencil is symmetric<br />

positive def<strong>in</strong>ite. Thus, we consider the pencil (Rx(0), Rx(k2)) and perform<br />

the follow<strong>in</strong>g steps:<br />

Step 1: Compute a standard eigenvalue decomposition of Rx(0) = V ΛV T , i.e,<br />

11