Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

1.4. Sparseness 31<br />

Sparse projection<br />

Algorithmically, we followed Hoyer’s approach and solved the<br />

sparse NMF problem by alternatively updat<strong>in</strong>g A and S us<strong>in</strong>g<br />

gradient descent on the residual error �X − AS� 2 . After each<br />

update, the columns of A and the rows of S are projected onto<br />

M := {s|�s�1 = σ} ∩ {s|�s�2 = 1} ∩ {s ≥ 0} (1.14)<br />

<strong>in</strong> order to satisfy the sparseness conditions of (1.13). For this,<br />

po<strong>in</strong>ts x ∈ R n have to be projected onto adjacent po<strong>in</strong>ts <strong>in</strong> M,<br />

which was def<strong>in</strong>ed as �x − p�2 ≤ �x − q�2 for all q ∈ M and<br />

denoted as p ⊳ x.<br />

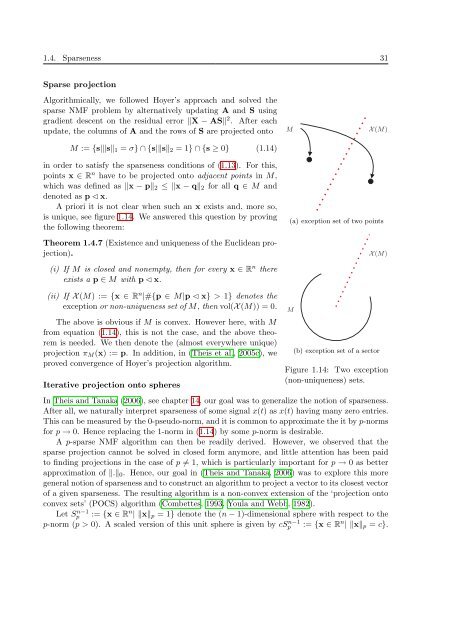

A priori it is not clear when such an x exists and, more so,<br />

is unique, see figure 1.14. We answered this question by prov<strong>in</strong>g<br />

the follow<strong>in</strong>g theorem:<br />

Theorem 1.4.7 (Existence and uniqueness of the Euclidean projection).<br />

M X (M)<br />

(i) If M is closed and nonempty, then for every x ∈ R n there<br />

exists a p ∈ M with p ⊳ x.<br />

(ii) If X (M) := {x ∈ R n |#{p ∈ M|p ⊳ x} > 1} denotes the<br />

exception or non-uniqueness set of M, then vol(X (M)) = 0.<br />

M X (M)<br />

(a) exception set of two po<strong>in</strong>ts<br />

(a) exception set of two po<strong>in</strong>ts<br />

M<br />

(b) excepti<br />

Figure 1: Two examples of exception s<br />

In other words, the exception set conta<strong>in</strong>s the set of<br />

can’t uniquely project. Our goal is to show that this set<br />

very small. Figure 1 shows the exception set of two diffe<br />

Note that if x ∈ M then x ⊳ x, and x is the only poi<br />

So M ∩ X (M) = ∅. Obviously the exception set of an a<br />

is empty. Indeed, we can prove more generally:<br />

Lemma 2.4. Let M ⊂ Rn X (M)<br />

M<br />

be convex. Then X (M) = ∅.<br />

For the proof we need the follow<strong>in</strong>g simple lemma, wh<br />

2-norm as it uses the scalar product.<br />

Lemma 2.5. Let a, b ∈ Rn such that �a + b�2 = �a�2 +<br />

are coll<strong>in</strong>ear.<br />

Proof. By tak<strong>in</strong>g squares we get �a + b�2 = �a�2 + 2�a�<br />

�a� 2 + 2〈a, b〉 + �b� 2 = �a� 2 + 2�a��b� +<br />

if 〈., .〉 denotes the (symmetric) scalar product. Hence 〈<br />

and b are coll<strong>in</strong>ear accord<strong>in</strong>g to the Schwarz <strong>in</strong>equality.<br />

Proof of lemma 2.4. Assume X (M) �= ∅. Then let x ∈<br />

M such that pi ⊳ x. By assumption q := 1<br />

2 (p1 + p2) ∈ M<br />

�x − p1� ≤ �x − q� ≤ 1<br />

2 �x − p1� + 1<br />

�x − p2�<br />

2<br />

because both pi are adjacent to x. Therefore �x−q� = � 1<br />

(a) exception set of two po<strong>in</strong>ts<br />

(b) exception set of a sector<br />

Figure 1: Two examples of exception sets.<br />

In other words, the exception set conta<strong>in</strong>s the set of po<strong>in</strong>ts from which we<br />

can’t uniquely project. Our goal is to show that this set vanishes or is at least<br />

very small. Figure 1 shows the exception set of two different sets.<br />

Note that if x ∈ M then x ⊳ x, and x is the only po<strong>in</strong>t with that property.<br />

So M ∩ X (M) = ∅. Obviously the exception set of an aff<strong>in</strong>e l<strong>in</strong>ear hyperspace<br />

is empty. Indeed, we can prove more generally:<br />

Lemma 2.4. Let M ⊂ R<br />

2<br />

and application of lemma 2.5 shows that x − p1 = α(x<br />

3<br />

n be convex. Then X (M) = ∅.<br />

For the proof we need the follow<strong>in</strong>g simple lemma, which only works for the<br />

2-norm as it uses the scalar product.<br />

Lemma 2.5. Let a, b ∈ Rn such that �a + b�2 = �a�2 + �b�2. Then a and b<br />

are coll<strong>in</strong>ear.<br />

Proof. By tak<strong>in</strong>g squares we get �a + b�2 = �a�2 + 2�a��b� + �b�2 , so<br />

�a� 2 + 2〈a, b〉 + �b� 2 = �a� 2 + 2�a��b� + �b� 2<br />

The above is obvious if M is convex. However here, with M<br />

from equation (1.14), this is not the case, and the above theorem<br />

is needed. We then denote the (almost everywhere unique)<br />

projection πM(x) := p. In addition, <strong>in</strong> (Theis et al., 2005c), we (b) exception set of a sector<br />

proved convergence of Hoyer’s projection algorithm.<br />

Figure 1.14: Two exception<br />

(non-uniqueness) sets.<br />

Iterative projection onto spheres<br />

In Theis and Tanaka (2006), see chapter 14, our goal was to generalize the notion of sparseness.<br />

After all, we naturally <strong>in</strong>terpret sparseness of some signal x(t) as x(t) hav<strong>in</strong>g many zero entries.<br />

This can be measured by the 0-pseudo-norm, and it is common to approximate the it by p-norms<br />

for p → 0. Hence replac<strong>in</strong>g the 1-norm <strong>in</strong> (1.14) by some p-norm is desirable.<br />

A p-sparse NMF algorithm can then be readily derived. However, we observed that the<br />

sparse projection cannot be solved <strong>in</strong> closed form anymore, and little attention has been paid<br />

to f<strong>in</strong>d<strong>in</strong>g projections <strong>in</strong> the case of p �= 1, which is particularly important for p → 0 as better<br />

approximation of �.�0. Hence, our goal <strong>in</strong> (Theis and Tanaka, 2006) was to explore this more<br />

general notion of sparseness and to construct an algorithm to project a vector to its closest vector<br />

of a given sparseness. The result<strong>in</strong>g algorithm is a non-convex extension of the ‘projection onto<br />

convex sets’ (POCS) algorithm (Combettes, 1993, Youla and Webb, 1982).<br />

Let S<br />

if 〈., .〉 denotes the (symmetric) scalar product. Hence 〈a, b〉 = �a��b� and a<br />

and b are coll<strong>in</strong>ear accord<strong>in</strong>g to the Schwarz <strong>in</strong>equality.<br />

n−1<br />

p := {x ∈ Rn | �x�p = 1} denote the (n − 1)-dimensional sphere with respect to the<br />

p-norm (p > 0). A scaled version of this unit sphere is given by cSn−1 p := {x ∈ Rn | �x�p = c}.<br />

Proof of lemma 2.4. Assume X (M) �= ∅. Then let x ∈ X (M) and p1 �= p2 ∈<br />

M such that pi ⊳ x. By assumption q := 1<br />

2 (p1 + p2) ∈ M. But<br />

�x − p1� ≤ �x − q� ≤ 1<br />

2 �x − p1� + 1<br />

2 �x − p2� = �x − p1�<br />

(x−p1)�+� 1<br />

2 (x−p2)�<br />

because both pi are adjacent to x. Therefore �x−q� = � 1<br />

2<br />

and application of lemma 2.5 shows that x − p1 = α(x − p2). Tak<strong>in</strong>g norms<br />

3