Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Mathematics in Independent Component Analysis

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

294 Chapter 21. Proc. BIOMED 2005, pages 209-212<br />

1.5<br />

1<br />

0.5<br />

0<br />

−0.5<br />

−1<br />

−1.5<br />

−2<br />

4<br />

2<br />

0<br />

−2<br />

−4<br />

−3<br />

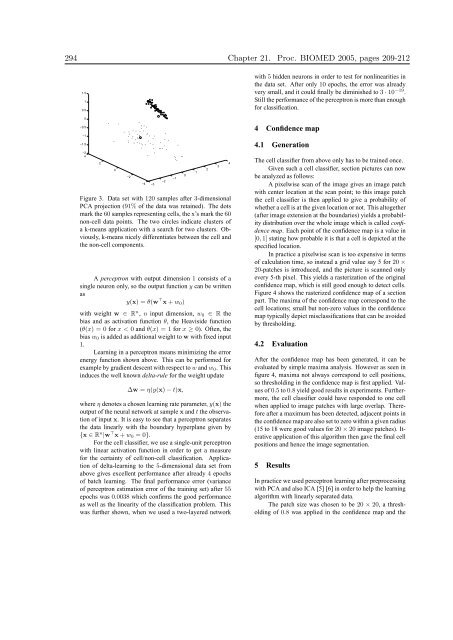

Figure 3. Data set with 120 samples after 3-dimensional<br />

PCA projection (91% of the data was reta<strong>in</strong>ed). The dots<br />

mark the 60 samples represent<strong>in</strong>g cells, the x’s mark the 60<br />

non-cell data po<strong>in</strong>ts. The two circles <strong>in</strong>dicate clusters of<br />

a k-means application with a search for two clusters. Obviously,<br />

k-means nicely differentiates between the cell and<br />

the non-cell components.<br />

A perceptron with output dimension 1 consists of a<br />

s<strong>in</strong>gle neuron only, so the output function y can be written<br />

as<br />

y(x) = θ(w ⊤ x + w0)<br />

with weight w ∈ R n , n <strong>in</strong>put dimension, w0 ∈ R the<br />

bias and as activation function θ, the Heaviside function<br />

(θ(x) = 0 for x < 0 and θ(x) = 1 for x ≥ 0). Often, the<br />

bias w0 is added as additional weight to w with fixed <strong>in</strong>put<br />

1.<br />

Learn<strong>in</strong>g <strong>in</strong> a perceptron means m<strong>in</strong>imiz<strong>in</strong>g the error<br />

energy function shown above. This can be performed for<br />

example by gradient descent with respect to w and w0. This<br />

<strong>in</strong>duces the well known delta-rule for the weight update<br />

−2<br />

−1<br />

∆w = η(y(x) − t)x,<br />

where η denotes a chosen learn<strong>in</strong>g rate parameter, y(x) the<br />

output of the neural network at sample x and t the observation<br />

of <strong>in</strong>put x. It is easy to see that a perceptron separates<br />

the data l<strong>in</strong>early with the boundary hyperplane given by<br />

{x ∈ R n |w ⊤ x + w0 = 0}.<br />

For the cell classifier, we use a s<strong>in</strong>gle-unit perceptron<br />

with l<strong>in</strong>ear activation function <strong>in</strong> order to get a measure<br />

for the certa<strong>in</strong>ty of cell/non-cell classification. Application<br />

of delta-learn<strong>in</strong>g to the 5-dimensional data set from<br />

above gives excellent performance after already 4 epochs<br />

of batch learn<strong>in</strong>g. The f<strong>in</strong>al performance error (variance<br />

of perceptron estimation error of the tra<strong>in</strong><strong>in</strong>g set) after 55<br />

epochs was 0.0038 which confirms the good performance<br />

as well as the l<strong>in</strong>earity of the classification problem. This<br />

was further shown, when we used a two-layered network<br />

0<br />

1<br />

2<br />

3<br />

4<br />

with 5 hidden neurons <strong>in</strong> order to test for nonl<strong>in</strong>earities <strong>in</strong><br />

the data set. After only 10 epochs, the error was already<br />

very small, and it could f<strong>in</strong>ally be dim<strong>in</strong>ished to 3 · 10 −19 .<br />

Still the performance of the perceptron is more than enough<br />

for classification.<br />

4 Confidence map<br />

4.1 Generation<br />

The cell classifier from above only has to be tra<strong>in</strong>ed once.<br />

Given such a cell classifier, section pictures can now<br />

be analyzed as follows:<br />

A pixelwise scan of the image gives an image patch<br />

with center location at the scan po<strong>in</strong>t; to this image patch<br />

the cell classifier is then applied to give a probability of<br />

whether a cell is at the given location or not. This altogether<br />

(after image extension at the boundaries) yields a probability<br />

distribution over the whole image which is called confidence<br />

map. Each po<strong>in</strong>t of the confidence map is a value <strong>in</strong><br />

[0, 1] stat<strong>in</strong>g how probable it is that a cell is depicted at the<br />

specified location.<br />

In practice a pixelwise scan is too expensive <strong>in</strong> terms<br />

of calculation time, so <strong>in</strong>stead a grid value say 5 for 20 ×<br />

20-patches is <strong>in</strong>troduced, and the picture is scanned only<br />

every 5-th pixel. This yields a rasterization of the orig<strong>in</strong>al<br />

confidence map, which is still good enough to detect cells.<br />

Figure 4 shows the rasterized confidence map of a section<br />

part. The maxima of the confidence map correspond to the<br />

cell locations; small but non-zero values <strong>in</strong> the confidence<br />

map typically depict misclassifications that can be avoided<br />

by threshold<strong>in</strong>g.<br />

4.2 Evaluation<br />

After the confidence map has been generated, it can be<br />

evaluated by simple maxima analysis. However as seen <strong>in</strong><br />

figure 4, maxima not always correspond to cell positions,<br />

so threshold<strong>in</strong>g <strong>in</strong> the confidence map is first applied. Values<br />

of 0.5 to 0.8 yield good results <strong>in</strong> experiments. Furthermore,<br />

the cell classifier could have responded to one cell<br />

when applied to image patches with large overlap. Therefore<br />

after a maximum has been detected, adjacent po<strong>in</strong>ts <strong>in</strong><br />

the confidence map are also set to zero with<strong>in</strong> a given radius<br />

(15 to 18 were good values for 20 × 20 image patches). Iterative<br />

application of this algorithm then gave the f<strong>in</strong>al cell<br />

positions and hence the image segmentation.<br />

5 Results<br />

In practice we used perceptron learn<strong>in</strong>g after preprocess<strong>in</strong>g<br />

with PCA and also ICA [5] [6] <strong>in</strong> order to help the learn<strong>in</strong>g<br />

algorithm with l<strong>in</strong>early separated data.<br />

The patch size was chosen to be 20 × 20, a threshold<strong>in</strong>g<br />

of 0.8 was applied <strong>in</strong> the confidence map and the