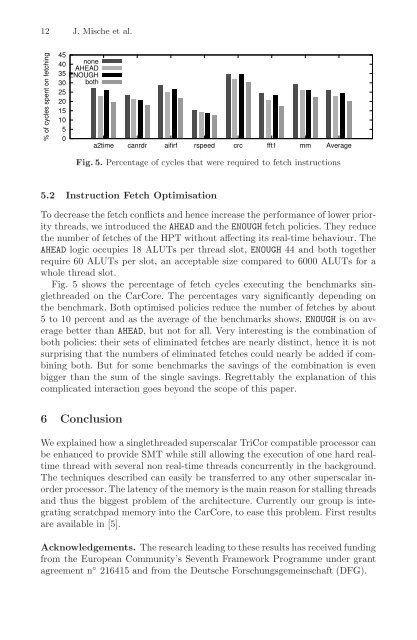

12 J. Mische et al. % <strong>of</strong> cycles spent on fetch<strong>in</strong>g 45 40 none AHEAD 35 ENOUGH 30 25 20 15 10 5 0 both a2time canrdr aifirf rspeed crc fft1 mm Average Fig. 5. Percentage <strong>of</strong> cycles that were required to fetch <strong>in</strong>structions 5.2 Instruction Fetch Optimisation To decrease the fetch conflicts and hence <strong>in</strong>crease the performance <strong>of</strong> lower priority threads, we <strong>in</strong>troduced the AHEAD and the ENOUGH fetch policies. They reduce the number <strong>of</strong> fetches <strong>of</strong> the HPT without affect<strong>in</strong>g its real-time behaviour. The AHEAD logic occupies 18 ALUTs per thread slot, ENOUGH 44 and both together require 60 ALUTs per slot, an acceptable size compared to 6000 ALUTs for a whole thread slot. Fig. 5 shows the percentage <strong>of</strong> fetch cycles execut<strong>in</strong>g the benchmarks s<strong>in</strong>glethreaded on the CarCore. The percentages vary significantly depend<strong>in</strong>g on the benchmark. Both optimised policies reduce the number <strong>of</strong> fetches by about 5 to 10 percent and as the average <strong>of</strong> the benchmarks shows, ENOUGH is on average better than AHEAD, but not for all. Very <strong>in</strong>terest<strong>in</strong>g is the comb<strong>in</strong>ation <strong>of</strong> both policies: their sets <strong>of</strong> elim<strong>in</strong>ated fetches are nearly dist<strong>in</strong>ct, hence it is not surpris<strong>in</strong>g that the numbers <strong>of</strong> elim<strong>in</strong>ated fetches could nearly be added if comb<strong>in</strong><strong>in</strong>g both. But for some benchmarks the sav<strong>in</strong>gs <strong>of</strong> the comb<strong>in</strong>ation is even bigger than the sum <strong>of</strong> the s<strong>in</strong>gle sav<strong>in</strong>gs. Regrettably the explanation <strong>of</strong> this complicated <strong>in</strong>teraction goes beyond the scope <strong>of</strong> this paper. 6 Conclusion We expla<strong>in</strong>ed how a s<strong>in</strong>glethreaded superscalar TriCor compatible processor can be enhanced to provide SMT while still allow<strong>in</strong>g the execution <strong>of</strong> one hard realtime thread with several non real-time threads concurrently <strong>in</strong> the background. The techniques described can easily be transferred to any other superscalar <strong>in</strong>order processor. The latency <strong>of</strong> the memory is the ma<strong>in</strong> reason for stall<strong>in</strong>g threads and thus the biggest problem <strong>of</strong> the architecture. Currently our group is <strong>in</strong>tegrat<strong>in</strong>g scratchpad memory <strong>in</strong>to the CarCore, to ease this problem. First results are available <strong>in</strong> [5]. Acknowledgements. The research lead<strong>in</strong>g to these results has received fund<strong>in</strong>g from the European Community’s Seventh Framework Programme under grant agreement n ◦ 216415 and from the Deutsche Forschungsgeme<strong>in</strong>schaft (DFG).

References How to Enhance a Superscalar Processor 13 1. Tullsen, D.M., Eggers, S.J., Levy, H.M.: Simultaneous multithread<strong>in</strong>g: maximiz<strong>in</strong>g on-chip parallelism. In: ISCA 1995, pp. 392–403 (1995) 2. Gerosa, G., Curtis, S., D’Addeo, M., Jiang, B., Kuttanna, B., Merchant, F., Patel, B., Taufique, M., Samarchi, H.: A Sub-1W to 2W Low-Power IA Processor for Mobile Internet Devices and Ultra-Mobile PCs <strong>in</strong> 45nm Hi-K Metal Gate CMOS. In: IEEE International Solid-State Circuits Conference (ISSCC 2008), pp. 256–611 (2008) 3. Mische, J., Uhrig, S., Kluge, F., Ungerer, T.: Exploit<strong>in</strong>g Spare Resources <strong>of</strong> In-order SMT Processors Execut<strong>in</strong>g Hard Real-time Threads. In: ICCD 2008, pp. 371–376 (2008) 4. Mische, J., Uhrig, S., Kluge, F., Ungerer, T.: IPC Control for Multiple Real-Time Threads on an In-order SMT Processor. In: Seznec, A., Emer, J., O’Boyle, M., Martonosi, M., Ungerer, T. (eds.) HiPEAC 2009. LNCS, vol. 5409, pp. 125–139. Spr<strong>in</strong>ger, Heidelberg (2009) 5. Metzlaff, S., Uhrig, S., Mische, J., Ungerer, T.: Predictable dynamic <strong>in</strong>struction scratchpad for simultaneous multithreaded processors. In: Proceed<strong>in</strong>gs <strong>of</strong> the 9th Workshop on Memory Performance (MEDEA 2008), pp. 38–45 (2008) 6. Ja<strong>in</strong>, R., Hughes, C.J., Adve, S.V.: S<strong>of</strong>t Real-Time Schedul<strong>in</strong>g on Simultaneous Multithreaded Processors. In: RTSS 2002, pp. 134–145 (2002) 7. Dorai, G.K., Yeung, D., Choi, S.: Optimiz<strong>in</strong>g SMT Processors for High S<strong>in</strong>gle- Thread Performance. Journal <strong>of</strong> Instruction-Level Parallelism 5 (April 2003) 8. Cazorla, F.J., Knijnenburg, P.M., Sakellariou, R., Fernndez, E., Ramirez, A., Valero, M.: Predictable Performance <strong>in</strong> SMT Processors. In: Proceed<strong>in</strong>gs <strong>of</strong> the 1st Conference on <strong>Comput<strong>in</strong>g</strong> Frontiers, pp. 433–443 (2004) 9. Yamasaki, N., Magaki, I., Itou, T.: Prioritized SMT <strong>Architecture</strong> with IPC Control Method for Real-Time Process<strong>in</strong>g. In: RTAS 2007, pp. 12–21 (2007) 10. Hily, S., Seznec, A.: Out-Of-Order Execution May Not Be Cost-Effective on Processors Featur<strong>in</strong>g Simultaneous Multithread<strong>in</strong>g. In: HPCA-5, pp. 64–67 (1999) 11. Zang, C., Imai, S., Frank, S., Kimura, S.: Issue Mechanism for Embedded Simultaneous Multithread<strong>in</strong>g Processor. IEICE Transactions on Fundamentals <strong>of</strong> Electronics, Communications and <strong>Computer</strong> Sciences E91-A(4), 1092–1100 (2008) 12. Moon, B.I., Yoon, H., Yun, I., Kang, S.: An In-Order SMT <strong>Architecture</strong> with Static Resource Partiton<strong>in</strong>g for Consumer Applications. In: Liew, K.-M., Shen, H., See, S., Cai, W. (eds.) PDCAT 2004. LNCS, vol. 3320, pp. 539–544. Spr<strong>in</strong>ger, Heidelberg (2004) 13. El-Moursy, A., Garg, R., Albonesi, D.H., Dwarkadas, S.: Partition<strong>in</strong>g Multi- Threaded Processors with a Large Number <strong>of</strong> Threads. In: IEEE International Symposium on Performance Analysis <strong>of</strong> <strong>Systems</strong> and S<strong>of</strong>tware, March 2005, pp. 112–123 (2005) 14. Raasch, S.E., Re<strong>in</strong>hardt, S.K.: The Impact <strong>of</strong> Resource Partition<strong>in</strong>g on SMT Processors. In: PACT 2003, pp. 15–25 (2003) 15. El-Haj-Mahmoud, A., AL-Zawawi, A.S., Anantaraman, A., Rotenberg, E.: Virtual Multiprocessor: An Analyzable, High-Performance <strong>Architecture</strong> for Real-Time <strong>Comput<strong>in</strong>g</strong>. In: CASES 2005, pp. 213–224 (2005)

- Page 2 and 3: Lecture Notes in Computer Science 5

- Page 4 and 5: Volume Editors Christian Müller-Sc

- Page 6 and 7: General Chair Organization Christia

- Page 8 and 9: Organization IX Hartmut Schmeck Kar

- Page 10 and 11: Keynote Table of Contents HyVM - Hy

- Page 12 and 13: Table of Contents XIII JetBench:AnO

- Page 14 and 15: How to Enhance a Superscalar Proces

- Page 16 and 17: 4 J. Mische et al. The Real-time Vi

- Page 18 and 19: 6 J. Mische et al. 4.1 Instruction

- Page 20 and 21: 8 J. Mische et al. Additionally the

- Page 22 and 23: 10 J. Mische et al. % of unused pip

- Page 26 and 27: 14 J. Mische et al. 16. Lickly, B.,

- Page 28 and 29: 16 G. Aşılıoğlu, E.M. Kaya, and

- Page 30 and 31: 18 G. Aşılıoğlu, E.M. Kaya, and

- Page 32 and 33: 20 G. Aşılıoğlu, E.M. Kaya, and

- Page 34 and 35: 22 G. Aşılıoğlu, E.M. Kaya, and

- Page 36 and 37: 24 G. Aşılıoğlu, E.M. Kaya, and

- Page 38 and 39: 26 T.B. Preußer, P. Reichel, and R

- Page 40 and 41: 28 T.B. Preußer, P. Reichel, and R

- Page 42 and 43: 30 T.B. Preußer, P. Reichel, and R

- Page 44 and 45: 32 T.B. Preußer, P. Reichel, and R

- Page 46 and 47: 34 T.B. Preußer, P. Reichel, and R

- Page 48 and 49: 36 T.B. Preußer, P. Reichel, and R

- Page 50 and 51: 38 P. Bellasi, W. Fornaciari, and D

- Page 52 and 53: 40 P. Bellasi, W. Fornaciari, and D

- Page 54 and 55: 42 P. Bellasi, W. Fornaciari, and D

- Page 56 and 57: 44 P. Bellasi, W. Fornaciari, and D

- Page 58 and 59: 46 P. Bellasi, W. Fornaciari, and D

- Page 60 and 61: 48 P. Bellasi, W. Fornaciari, and D

- Page 62 and 63: 50 J. Zeppenfeld and A. Herkersdorf

- Page 64 and 65: 52 J. Zeppenfeld and A. Herkersdorf

- Page 66 and 67: 54 J. Zeppenfeld and A. Herkersdorf

- Page 68 and 69: 56 J. Zeppenfeld and A. Herkersdorf

- Page 70 and 71: 58 J. Zeppenfeld and A. Herkersdorf

- Page 72 and 73: 60 J. Zeppenfeld and A. Herkersdorf

- Page 74 and 75:

62 B. Jakimovski, B. Meyer, and E.

- Page 76 and 77:

64 B. Jakimovski, B. Meyer, and E.

- Page 78 and 79:

66 B. Jakimovski, B. Meyer, and E.

- Page 80 and 81:

68 B. Jakimovski, B. Meyer, and E.

- Page 82 and 83:

70 B. Jakimovski, B. Meyer, and E.

- Page 84 and 85:

72 B. Jakimovski, B. Meyer, and E.

- Page 86 and 87:

74 M. Bonn and H. Schmeck Fig. 1. J

- Page 88 and 89:

76 M. Bonn and H. Schmeck 2.2 Node

- Page 90 and 91:

78 M. Bonn and H. Schmeck Uptime-ba

- Page 92 and 93:

80 M. Bonn and H. Schmeck 2.4 Simul

- Page 94 and 95:

82 M. Bonn and H. Schmeck done rate

- Page 96 and 97:

84 M. Bonn and H. Schmeck Fig. 8. J

- Page 98 and 99:

86 M. Bonn and H. Schmeck tells the

- Page 100 and 101:

88 J.-P. Steghöfer et al. � �

- Page 102 and 103:

90 J.-P. Steghöfer et al. mechanis

- Page 104 and 105:

92 J.-P. Steghöfer et al. resource

- Page 106 and 107:

94 J.-P. Steghöfer et al. Choose a

- Page 108 and 109:

96 J.-P. Steghöfer et al. 1. Defin

- Page 110 and 111:

98 J.-P. Steghöfer et al. and all

- Page 112 and 113:

100 J.-P. Steghöfer et al. 19. Kim

- Page 114 and 115:

102 K. Kloch et al. large-scale sys

- Page 116 and 117:

104 K. Kloch et al. a�t� 1.0 0.

- Page 118 and 119:

106 K. Kloch et al. constant. This

- Page 120 and 121:

108 K. Kloch et al. Relative number

- Page 122 and 123:

110 K. Kloch et al. (a) infection r

- Page 124 and 125:

112 K. Kloch et al. (ii) Phase of a

- Page 126 and 127:

114 P. Petoumenos et al. Studying t

- Page 128 and 129:

116 P. Petoumenos et al. % of Misse

- Page 130 and 131:

118 P. Petoumenos et al. IQ: n 4-in

- Page 132 and 133:

120 P. Petoumenos et al. downsizing

- Page 134 and 135:

122 P. Petoumenos et al. As long as

- Page 136 and 137:

124 P. Petoumenos et al. comparable

- Page 138 and 139:

Exploiting Inactive Rename Slots fo

- Page 140 and 141:

128 M. Kayaalp et al. In a supersca

- Page 142 and 143:

130 M. Kayaalp et al. INSTRUCTION 1

- Page 144 and 145:

132 M. Kayaalp et al. time. Alterna

- Page 146 and 147:

134 M. Kayaalp et al. Fig. 3. Numbe

- Page 148 and 149:

136 M. Kayaalp et al. The results o

- Page 150 and 151:

Efficient Transaction Nesting in Ha

- Page 152 and 153:

140 Y. Liu et al. HTMs include TCC

- Page 154 and 155:

142 Y. Liu et al. rollback T0 begin

- Page 156 and 157:

144 Y. Liu et al. Processor core Pr

- Page 158 and 159:

146 Y. Liu et al. 5.2 Results and A

- Page 160 and 161:

148 Y. Liu et al. decreases, partia

- Page 162 and 163:

Decentralized Energy-Management to

- Page 164 and 165:

152 B. Becker et al. Furthermore, t

- Page 166 and 167:

154 B. Becker et al. An optimizing

- Page 168 and 169:

156 B. Becker et al. smart-home man

- Page 170 and 171:

158 B. Becker et al. freedom like w

- Page 172 and 173:

160 B. Becker et al. Power [W] 4500

- Page 174 and 175:

EnergySaving Cluster Roll: Power Sa

- Page 176 and 177:

164 M.F. Dolz et al. Themodulequeri

- Page 178 and 179:

166 M.F. Dolz et al. This daemon al

- Page 180 and 181:

168 M.F. Dolz et al. been submitted

- Page 182 and 183:

170 M.F. Dolz et al. On the other h

- Page 184 and 185:

172 M.F. Dolz et al. at the inactiv

- Page 186 and 187:

Effect of the Degree of Neighborhoo

- Page 188 and 189:

176 T. Abdullah et al. A zone based

- Page 190 and 191:

178 T. Abdullah et al. A consumer/p

- Page 192 and 193:

180 T. Abdullah et al. Messages 140

- Page 194 and 195:

182 T. Abdullah et al. (except when

- Page 196 and 197:

184 T. Abdullah et al. % Matchmakin

- Page 198 and 199:

186 T. Abdullah et al. show that th

- Page 200 and 201:

188 M. Schindewolf, D. Kramer, and

- Page 202 and 203:

190 M. Schindewolf, D. Kramer, and

- Page 204 and 205:

192 M. Schindewolf, D. Kramer, and

- Page 206 and 207:

194 M. Schindewolf, D. Kramer, and

- Page 208 and 209:

196 M. Schindewolf, D. Kramer, and

- Page 210 and 211:

198 M. Schindewolf, D. Kramer, and

- Page 212 and 213:

200 R. Plyaskin and A. Herkersdorf

- Page 214 and 215:

202 R. Plyaskin and A. Herkersdorf

- Page 216 and 217:

204 R. Plyaskin and A. Herkersdorf

- Page 218 and 219:

206 R. Plyaskin and A. Herkersdorf

- Page 220 and 221:

208 R. Plyaskin and A. Herkersdorf

- Page 222 and 223:

210 R. Plyaskin and A. Herkersdorf

- Page 224 and 225:

212 M.Y. Qadri, D. Matichard, and K

- Page 226 and 227:

214 M.Y. Qadri, D. Matichard, and K

- Page 228 and 229:

216 M.Y. Qadri, D. Matichard, and K

- Page 230 and 231:

218 M.Y. Qadri, D. Matichard, and K

- Page 232 and 233:

220 M.Y. Qadri, D. Matichard, and K

- Page 234 and 235:

A Tightly Coupled Accelerator Infra

- Page 236 and 237:

224 F. Nowak and R. Buchty where A

- Page 238 and 239:

226 F. Nowak and R. Buchty Fig. 3.

- Page 240 and 241:

228 F. Nowak and R. Buchty Table 2.

- Page 242 and 243:

230 F. Nowak and R. Buchty Table 4.

- Page 244 and 245:

232 F. Nowak and R. Buchty 5.3 Comp

- Page 246 and 247:

Optimizing Stencil Application on M

- Page 248 and 249:

236 F. Xudong et al. compared to th

- Page 250 and 251:

238 F. Xudong et al. stencil comput

- Page 252 and 253:

240 F. Xudong et al. threads in the

- Page 254 and 255:

242 F. Xudong et al. Speedup 14 12

- Page 256 and 257:

244 F. Xudong et al. 5 Related Work

- Page 258:

Abdullah, Tariq 174 Alima, Luc Onan