Architecture of Computing Systems (Lecture Notes in Computer ...

Architecture of Computing Systems (Lecture Notes in Computer ...

Architecture of Computing Systems (Lecture Notes in Computer ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

22 G. Aşılıoğlu, E.M. Kaya, and O. Erg<strong>in</strong><br />

The head po<strong>in</strong>ters <strong>of</strong> each FIFO queues are also separate registers that are updated<br />

from the commit stage <strong>of</strong> the pipel<strong>in</strong>e. When an <strong>in</strong>struction is committed and removed<br />

from the reorder buffer it simply <strong>in</strong>crements the head po<strong>in</strong>ter <strong>of</strong> its dest<strong>in</strong>ation architectural<br />

register. Note that <strong>in</strong>structions do not access the payload area from the commit<br />

stage but they only access the head po<strong>in</strong>ter register.<br />

Processor is stalled at the rename stage if the contents <strong>of</strong> the head po<strong>in</strong>ter register<br />

is just one over the contents <strong>of</strong> the tail po<strong>in</strong>ter register for an architectural register that<br />

needs to be renamed. S<strong>in</strong>ce these registers are just <strong>in</strong>cremented unless there is an<br />

exception (such as a branch misprediction), they are better be implemented as a<br />

counter with parallel data load<strong>in</strong>g capability.<br />

The checkpo<strong>in</strong>t table that is needed to recover the contents <strong>of</strong> the po<strong>in</strong>ter register <strong>in</strong><br />

case <strong>of</strong> an exception or misprediction is a pla<strong>in</strong> payload structure that only holds the<br />

values <strong>of</strong> the registers just at the time a branch reaches the rename stage. That structure<br />

is implemented by us<strong>in</strong>g SRAM bitcells and it is smaller than a register file<br />

which makes it possible to access this structure <strong>in</strong> less than a cycle.<br />

Complexity is moved to the po<strong>in</strong>ter registers <strong>in</strong> the proposed scheme s<strong>in</strong>ce these<br />

registers are actually counters but they also need a logic for immediate data load<strong>in</strong>g<br />

and comparison <strong>of</strong> head and tail po<strong>in</strong>ters. There is also a control logic that allows the<br />

read<strong>in</strong>g and writ<strong>in</strong>g <strong>of</strong> values by us<strong>in</strong>g only the contents <strong>of</strong> the tail po<strong>in</strong>ter.<br />

7 Results and Discussions<br />

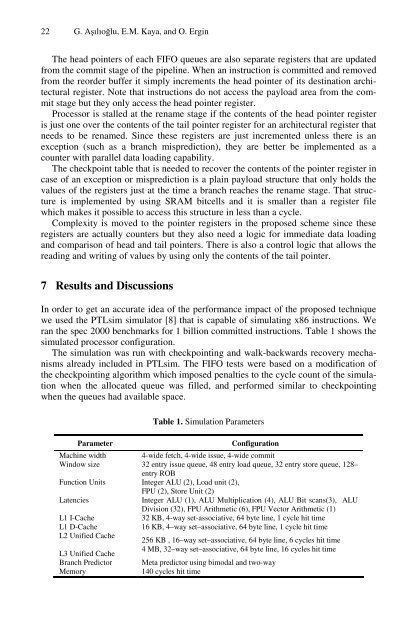

In order to get an accurate idea <strong>of</strong> the performance impact <strong>of</strong> the proposed technique<br />

we used the PTLsim simulator [8] that is capable <strong>of</strong> simulat<strong>in</strong>g x86 <strong>in</strong>structions. We<br />

ran the spec 2000 benchmarks for 1 billion committed <strong>in</strong>structions. Table 1 shows the<br />

simulated processor configuration.<br />

The simulation was run with checkpo<strong>in</strong>t<strong>in</strong>g and walk-backwards recovery mechanisms<br />

already <strong>in</strong>cluded <strong>in</strong> PTLsim. The FIFO tests were based on a modification <strong>of</strong><br />

the checkpo<strong>in</strong>t<strong>in</strong>g algorithm which imposed penalties to the cycle count <strong>of</strong> the simulation<br />

when the allocated queue was filled, and performed similar to checkpo<strong>in</strong>t<strong>in</strong>g<br />

when the queues had available space.<br />

Table 1. Simulation Parameters<br />

Parameter Configuration<br />

Mach<strong>in</strong>e width 4-wide fetch, 4-wide issue, 4-wide commit<br />

W<strong>in</strong>dow size 32 entry issue queue, 48 entry load queue, 32 entry store queue, 128–<br />

entry ROB<br />

Function Units Integer ALU (2), Load unit (2),<br />

FPU (2), Store Unit (2)<br />

Latencies<br />

Integer ALU (1), ALU Multiplication (4), ALU Bit scans(3), ALU<br />

Division (32), FPU Arithmetic (6), FPU Vector Arithmetic (1)<br />

L1 I-Cache 32 KB, 4-way set-associative, 64 byte l<strong>in</strong>e, 1 cycle hit time<br />

L1 D-Cache 16 KB, 4–way set–associative, 64 byte l<strong>in</strong>e, 1 cycle hit time<br />

L2 Unified Cache<br />

256 KB , 16–way set–associative, 64 byte l<strong>in</strong>e, 6 cycles hit time<br />

L3 Unified Cache<br />

4 MB, 32–way set–associative, 64 byte l<strong>in</strong>e, 16 cycles hit time<br />

Branch Predictor Meta predictor us<strong>in</strong>g bimodal and two-way<br />

Memory 140 cycles hit time