PPKE ITK PhD and MPhil Thesis Classes

PPKE ITK PhD and MPhil Thesis Classes

PPKE ITK PhD and MPhil Thesis Classes

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

42<br />

2. MAPPING THE NUMERICAL SIMULATIONS OF PARTIAL<br />

DIFFERENTIAL EQUATIONS<br />

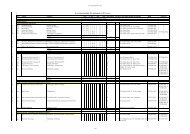

operations (channel stall cycle). The utilization of these SPEs is less than 15%,<br />

while the other SPEs are almost as efficient as a single SPE. Investigating the<br />

required memory b<strong>and</strong>width shows that one SPE should load 2 32bit floating<br />

point values <strong>and</strong> the result should be stored in the main memory. If 600 million<br />

cells are updated every second each SPE requires 7.2Gb/s memory I/O b<strong>and</strong>width<br />

<strong>and</strong> the available 25.6Gb/s b<strong>and</strong>width is not enough to support all the 8 SPEs.<br />

To reduce this high b<strong>and</strong>width requirement pipelining technique can be used<br />

as shown in Figure 2.6. In this case the SPEs are chained one after the other, <strong>and</strong><br />

each SPE computes a different iteration step, using the results of the previous<br />

SPE <strong>and</strong> the Cell processor is working similarly to a systolic array. Only the<br />

first <strong>and</strong> last SPE in the pipeline should access main memory, the other SPEs<br />

are loading the results of the previous iteration directly from the local memory<br />

of the neighboring SPE. Due to the ring structure of the Element Interconnect<br />

Bus (EIB), communication between the neighboring SPEs is very efficient. The<br />

instruction histogram of the optimized CNN simulator kernel is summarized in<br />

Figure 2.7.<br />

Though the memory b<strong>and</strong>width bottleneck is eliminated by using the SPEs in<br />

a pipeline, overall performance of the simulation kernel can not be improved. The<br />

instruction histogram in Figure 2.7 still show huge amount of channel stall cycles<br />

in the 4 <strong>and</strong> 8 SPE case. To find the source of these stall cycles detailed profiling<br />

of the simulation kernel is required. First, profiling instructions are inserted into<br />

the source code to determine the length of the computation part of the program<br />

<strong>and</strong> the overhead. The results are summarized on Figure 2.8.<br />

Profiling results show that in the 4 <strong>and</strong> 8 SPE cases some SPEs spend huge<br />

amount of cycles in the setup portion of the program. This behavior is caused by<br />

the SPE thread creation <strong>and</strong> initialization process. First, the required number<br />

of SPE threads is created, then the addresses required to set up communication<br />

between the SPEs <strong>and</strong> form the pipeline are determined. Meanwhile the started<br />

threads are blocked. In the 4 SPE case threads on SPE 0 <strong>and</strong> SPE 1 are created<br />

first, hence these threads should wait not only for the address determination<br />

phase but also until the other two threads are created. Unfortunately this thread<br />

creation overhead cannot be eliminated. For a long analogic algorithm which uses<br />

several templates, the SPEs <strong>and</strong> the PPE should be used in a client/server model.

![optika tervezés [Kompatibilitási mód] - Ez itt...](https://img.yumpu.com/45881475/1/190x146/optika-tervezacs-kompatibilitasi-mad-ez-itt.jpg?quality=85)