PPKE ITK PhD and MPhil Thesis Classes

PPKE ITK PhD and MPhil Thesis Classes

PPKE ITK PhD and MPhil Thesis Classes

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

3.5 Results 79<br />

in the L2 cache of the processor comfortably. However the architecture implemented<br />

on the FPGA was not optimized for speed <strong>and</strong> runs only on 100MHz,<br />

its performance is comparable or in some cases slightly higher than a 3GHz Intel<br />

Core 2 Duo microprocessor.<br />

3.5 Results<br />

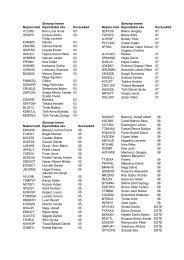

The L ∞ norm of the error in case of different grid resolution <strong>and</strong> different fixedpoint<br />

<strong>and</strong> floating-point precisions are compared in Figure (3.6) – (3.8). As the<br />

resolution is increased, the accuracy of the solution can be improved. Finer<br />

grid resolution requires smaller time-step, according to the CFL condition, which<br />

results in more operations. After a certain number of steps the round-off error<br />

of the computation will be comparable or larger than the truncation error of the<br />

numerical method. Therefore the step size can not be decreased to zero in a<br />

given precision. The precision of the arithmetic units should be increased when<br />

the grid is refined.<br />

The arithmetic unit in the 2nd order case (see Figure 3.7) is twice as large as<br />

in 1st order arithmetic unit, additionally the step-size should be halved therefore<br />

four times more computation is required. The slope of the error is higher as<br />

shown in Figure 3.9, therefore computation on a coarser grid results in higher<br />

accuracy.<br />

The L ∞ norm of fix-point <strong>and</strong> floating point solutions with the same mantissa<br />

width are compared in Figure 3.9. Because we use 11 bit for the exponent in<br />

floating-point numbers the 40 bit floating point number compared to the 29 bit<br />

fix-point number. As it can be seen, the floating-point computation results in<br />

more accurate solution than the fix-point. But just adding four bits to the 29bit<br />

fixed-point number <strong>and</strong> using 33bit fixed-point number the L ∞ norm of the error<br />

is comparable to the error of the 40bit floating point computations. In addition<br />

7 bits are saved, which results in lower memory b<strong>and</strong>width <strong>and</strong> has a reduced<br />

area requirement. The fixed-point arithmetic unit requires 22 multipliers while<br />

the floating-point arithmetic unit requires only 16 multipliers, the number of<br />

required slices is far more less in the fixed-point case. While the 34 bit fixed-point

![optika tervezés [Kompatibilitási mód] - Ez itt...](https://img.yumpu.com/45881475/1/190x146/optika-tervezacs-kompatibilitasi-mad-ez-itt.jpg?quality=85)