Superconducting Technology Assessment - nitrd

Superconducting Technology Assessment - nitrd

Superconducting Technology Assessment - nitrd

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

SYSTEM ARCHITECTURES<br />

System-level Hardware Architecture<br />

The primary challenges to achieving extremes in high performance computing are 1) integrating sufficient<br />

hardware to provide the necessary peak capabilities in operation performance, memory and storage capacity, and<br />

communications and I/O bandwidth, and 2) devising an architecture with support mechanisms to deliver efficient<br />

operation across a wide range of applications. For peta-scale systems, size, complexity, and power consumption<br />

become dominant constraints to delivering peak capabilities. Such systems also demand means of overcoming the<br />

sources of performance degradation and efficiency reduction including latency, overhead, contention, and starvation.<br />

Today, some of the largest parallel systems routinely experience floating point efficiency of below 10% for many<br />

applications and single digit efficiencies have been observed, even after efforts towards optimization.<br />

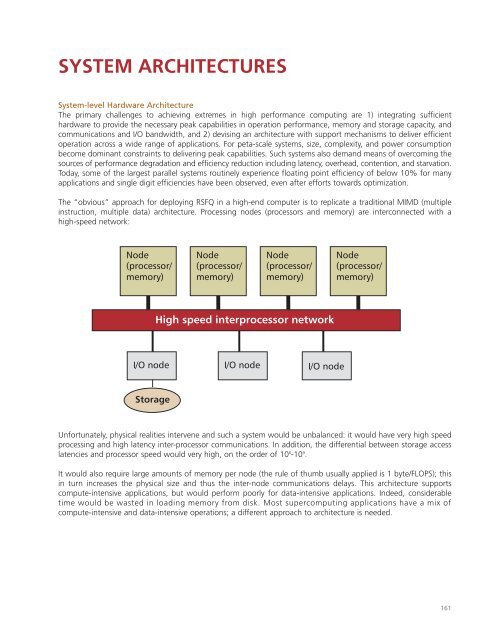

The “obvious” approach for deploying RSFQ in a high-end computer is to replicate a traditional MIMD (multiple<br />

instruction, multiple data) architecture. Processing nodes (processors and memory) are interconnected with a<br />

high-speed network:<br />

Node<br />

(processor/<br />

memory)<br />

Node<br />

(processor/<br />

memory)<br />

Node<br />

(processor/<br />

memory)<br />

I/O node I/O node I/O node<br />

Storage<br />

High speed interprocessor network<br />

Node<br />

(processor/<br />

memory)<br />

Unfortunately, physical realities intervene and such a system would be unbalanced: it would have very high speed<br />

processing and high latency inter-processor communications. In addition, the differential between storage access<br />

latencies and processor speed would very high, on the order of 10 6 -10 9 .<br />

It would also require large amounts of memory per node (the rule of thumb usually applied is 1 byte/FLOPS); this<br />

in turn increases the physical size and thus the inter-node communications delays. This architecture supports<br />

compute-intensive applications, but would perform poorly for data-intensive applications. Indeed, considerable<br />

time would be wasted in loading memory from disk. Most supercomputing applications have a mix of<br />

compute-intensive and data-intensive operations; a different approach to architecture is needed.<br />

161