Download - Academy Publisher

Download - Academy Publisher

Download - Academy Publisher

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

x<br />

j<br />

input node , hidden node<br />

y σ<br />

i , output node<br />

j , network<br />

Begin<br />

weight of input node<br />

w<br />

ij , network weight of hidden node<br />

T<br />

and output node<br />

li , the expectation output the output<br />

t<br />

node l , Figure 1 shows the BP neural network structure.<br />

The basic learning algorithm of BP neural network:<br />

l) Determine the various learning parameters on the<br />

basis of the known network structure, including input<br />

layer, hidden layer, output layer neuron number, learning<br />

rate and error parameters.<br />

2) Initialize the network weights and thresholds.<br />

3) Provide learning sample: input vector and target<br />

vector.<br />

4) Start to learn, and do the following for each sample:<br />

1 Forward-calculation of the j unit in the l layer:<br />

T<br />

() l<br />

l l−1<br />

v<br />

j<br />

( n) = ∑ w<br />

ji<br />

( n) yi<br />

( n)<br />

i=<br />

0<br />

(1)<br />

y l −1<br />

( n)<br />

Equation (7)<br />

i<br />

the i (i=0, set<br />

l −1<br />

( )<br />

then<br />

y<br />

l−1<br />

0<br />

= −<br />

1<br />

is the signal transmitted from<br />

l<br />

l<br />

w<br />

j0<br />

( n) = θ<br />

j<br />

( n)<br />

,<br />

) unit of the<br />

layer.<br />

If function of the j unit activation is sigmoid function,<br />

And<br />

y<br />

1<br />

() l<br />

j<br />

( n) =<br />

() l<br />

1+<br />

exp − v ( n)<br />

( )<br />

() l<br />

∂y<br />

j<br />

( n)<br />

f ′( v<br />

j<br />

( n)<br />

) = = y<br />

j<br />

( n) [ 1−<br />

y<br />

j<br />

( n)<br />

]<br />

∂v<br />

j<br />

( n)<br />

( )<br />

If the j unit belongs to the first hidden layer l = 1<br />

( 0) y<br />

j<br />

= x<br />

j<br />

( n)<br />

( )<br />

If the j unit belongs to the output layer l = L<br />

( L<br />

y )<br />

j<br />

( n) = O<br />

j<br />

( n)<br />

e ( n) = d ( n) − O ( n)<br />

j<br />

j<br />

j<br />

j<br />

, then<br />

(2)<br />

(3)<br />

, then<br />

2 back-calculation of δ :<br />

For the output units,<br />

() l<br />

( ) ( L<br />

δ n e ) ( n ) O ( n ) [ O ( n )<br />

j<br />

=<br />

j j<br />

1−<br />

j<br />

]<br />

(7)<br />

and for the hidden layer units,<br />

() l<br />

( ) () l<br />

( ) [ () l<br />

( ) ( l+<br />

1<br />

n y n y n ] )<br />

( n) w<br />

( l+<br />

1<br />

δ<br />

) j<br />

=<br />

j<br />

1−<br />

j ∑δ<br />

k kj<br />

( n)<br />

k<br />

(8)<br />

3 Fix the right values according to the following:<br />

() l<br />

( ) () l<br />

( ) ( l ) ( ) ( l−1<br />

w n w n n y<br />

)( n )<br />

jk<br />

+ 1 =<br />

ji<br />

+ ηδ<br />

j i<br />

(9)<br />

5) Enter a new sample until it reaches the error<br />

requirement, and the input order of each cycle in training<br />

samples needs a re-random order.<br />

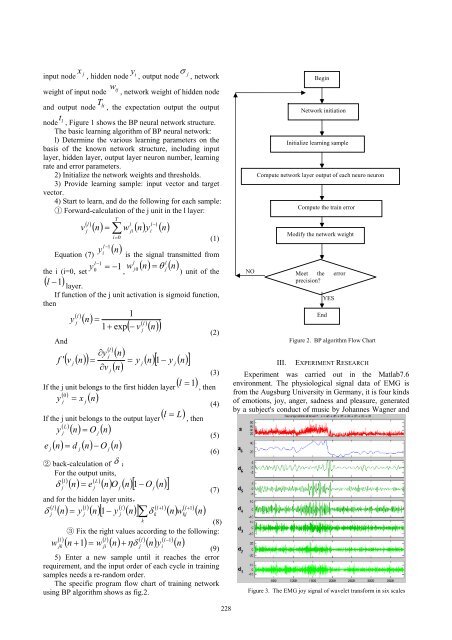

The specific program flow chart of training network<br />

using BP algorithm shows as fig.2.<br />

(4)<br />

(5)<br />

(6)<br />

NO<br />

Network initiation<br />

Initialize learning sample<br />

Compute network layer output of each neuro neuron<br />

Compute the train error<br />

Modify the network weight<br />

Meet the error<br />

precision<br />

End<br />

YES<br />

Figure 2. BP algorithm Flow Chart<br />

III. EXPERIMENT RESEARCH<br />

Experiment was carried out in the Matlab7.6<br />

environment. The physiological signal data of EMG is<br />

from the Augsburg University in Germany, it is four kinds<br />

of emotions, joy, anger, sadness and pleasure, generated<br />

by a subject's conduct of music by Johannes Wagner and<br />

Figure 3. The EMG joy signal of wavelet transform in six scales<br />

228