NAVY SCHOOL MANAGEMENT MANUAL

NAVY SCHOOL MANAGEMENT MANUAL - AIM

NAVY SCHOOL MANAGEMENT MANUAL - AIM

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

students near the middle of a scale. If, for example, a<br />

scale has seven points and you get a large number of "4s"<br />

from the raters, they may be making this error. One way to<br />

counter this is to use scales with an even number of points<br />

(so there is no middle point). Also, behavioral "anchors"<br />

again help.<br />

Determining Reliability of Rating Scales and Checklists<br />

<br />

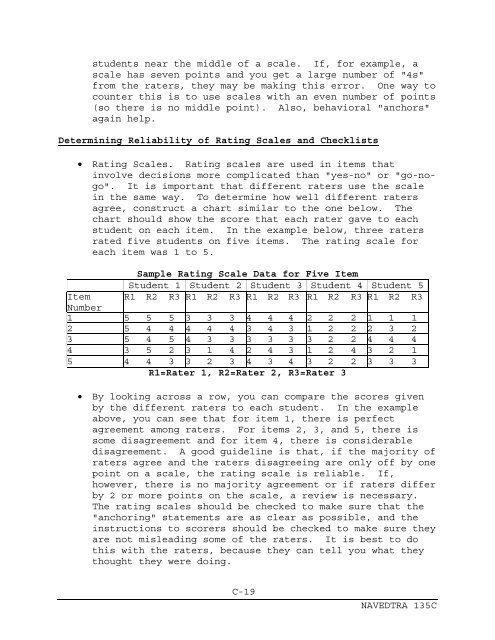

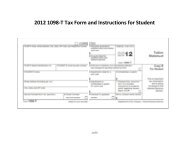

Rating Scales. Rating scales are used in items that<br />

involve decisions more complicated than "yes-no" or "go-nogo".<br />

It is important that different raters use the scale<br />

in the same way. To determine how well different raters<br />

agree, construct a chart similar to the one below. The<br />

chart should show the score that each rater gave to each<br />

student on each item. In the example below, three raters<br />

rated five students on five items. The rating scale for<br />

each item was 1 to 5.<br />

Sample Rating Scale Data for Five Item<br />

Student 1 Student 2 Student 3 Student 4 Student 5<br />

Item R1 R2 R3 R1 R2 R3 R1 R2 R3 R1 R2 R3 R1 R2 R3<br />

Number<br />

1 5 5 5 3 3 3 4 4 4 2 2 2 1 1 1<br />

2 5 4 4 4 4 4 3 4 3 1 2 2 2 3 2<br />

3 5 4 5 4 3 3 3 3 3 3 2 2 4 4 4<br />

4 3 5 2 3 1 4 2 4 3 1 2 4 3 2 1<br />

5 4 4 3 3 2 3 4 3 4 3 2 2 3 3 3<br />

R1=Rater 1, R2=Rater 2, R3=Rater 3<br />

<br />

By looking across a row, you can compare the scores given<br />

by the different raters to each student. In the example<br />

above, you can see that for item 1, there is perfect<br />

agreement among raters. For items 2, 3, and 5, there is<br />

some disagreement and for item 4, there is considerable<br />

disagreement. A good guideline is that, if the majority of<br />

raters agree and the raters disagreeing are only off by one<br />

point on a scale, the rating scale is reliable. If,<br />

however, there is no majority agreement or if raters differ<br />

by 2 or more points on the scale, a review is necessary.<br />

The rating scales should be checked to make sure that the<br />

"anchoring" statements are as clear as possible, and the<br />

instructions to scorers should be checked to make sure they<br />

are not misleading some of the raters. It is best to do<br />

this with the raters, because they can tell you what they<br />

thought they were doing.<br />

C-19<br />

NAVEDTRA 135C