Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Recall that by the min-max theorem Thm. 5.3.5<br />

u n = argmax x∈R n ρ A (x) , u n−1 = argmax x∈R n ,x⊥u n<br />

ρ A (x) . (5.3.20)<br />

Idea: maximize Rayleigh quotient over Span {v,w}, where v, w are output by Code 5.3.19. This<br />

leads to the optimization problem<br />

(α ∗ ,β ∗ ) := argmax ρ A (αv + βw) =<br />

α,β∈R, α 2 +β 2 =1<br />

( α<br />

argmax ρ (v,w)<br />

α,β∈R, α 2 +β 2 T A(v,w) ( =1 β<br />

Then a better approximation for the eigenvector to the largest eigenvalue is<br />

v ∗ := α ∗ v + β ∗ w .<br />

Note that ‖v ∗ ‖ 2 = 1, if both v and w are normalized, which is guaranteed in Code 5.3.19.<br />

Then, orthogonalizing w w.r.t v ∗ will produce a new iterate w ∗ .<br />

)<br />

) . (5.3.21)<br />

More general: Ritz projection of Ax = λx onto Im(V) (subspace spanned by columns of V)<br />

V H AVw = λV H Vw . (5.3.23)<br />

If V is unitary, then this generalized eigenvalue problem will become a standard linear eigenvalue<br />

problem.<br />

Note that he orthogonalization step in Code 5.3.21 is actually redundant, if exact arithmetic could be<br />

employed, because the Ritz projection could also be realized by solving the generalized eigenvalue<br />

problem<br />

However, prior orthogonalization is essential for numerical stability (→ Def. 2.5.5), cf. the discussion<br />

in Sect. 2.8.<br />

In MATLAB-implementations the vectors v, w can be collected in a matrix V ∈ R n,2 :<br />

Again the min-max theorem Thm. 5.3.5 tells us that we can find (α ∗ , β ∗ ) T as eigenvector to the<br />

largest eigenvalue of<br />

( (<br />

(v,w) T α α<br />

A(v,w) = λ<br />

β)<br />

β)<br />

Ôº¿ º¿<br />

. (5.3.22)<br />

Since eigenvectors of symmetric matrices are mutually orthogonal, we find w ∗ = α 2 v + β 2 w, where<br />

(α 2 , β 2 ) T is the eigenvector of (5.4.1) belonging to the smallest eigenvalue. This assumes orthonormal<br />

vectors v, w.<br />

Summing up the following MATLAB-function performs these computations:<br />

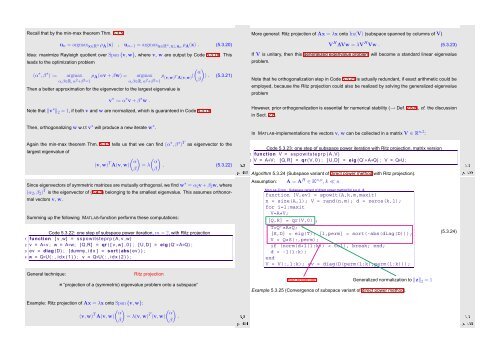

Code 5.3.22: one step of subspace power iteration, m = 2, with Ritz projection<br />

1 function [ v ,w] = sspowitsteprp (A, v ,w)<br />

2 v = A∗v ; w = A∗w; [Q,R] = qr ( [ v ,w] , 0 ) ; [U,D] = eig (Q’∗A∗Q) ;<br />

3 ev = diag (D) ; [dummy, i d x ] = sort ( abs ( ev ) ) ;<br />

4 w = Q∗U( : , i d x ( 1 ) ) ; v = Q∗U( : , i d x ( 2 ) ) ;<br />

Code 5.3.23: one step of subspace power iteration with Ritz projection, matrix version<br />

1 function V = sspowitsteprp (A,V)<br />

2 V = A∗V ; [Q,R] = qr (V, 0 ) ; [U,D] = eig (Q’∗A∗Q) ; V = Q∗U;<br />

Algorithm 5.3.24 (Subspace variant of direct power method with Ritz projection).<br />

Assumption:<br />

A = A H ∈ K n,n , k ≪ n<br />

MATLAB-CODE : Subspace variant of direct power method for s.p.d. A<br />

function [V,ev] = spowit(A,k,m,maxit)<br />

n = size(A,1); V = rand(n,m); d = zeros(k,1);<br />

for i=1:maxit<br />

V=A*V;<br />

[Q,R] = qr(V,0) ;<br />

T=Q’*A*Q;<br />

[S,D] = eig(T); [l,perm] = sort(-abs(diag(D)));<br />

V = Q*S(:,perm);<br />

if (norm(d+l(1:k)) < tol), break; end;<br />

d = -l(1:k);<br />

end<br />

V = V(:,1:k); ev = diag(D(perm(1:k),perm(1:k)));<br />

(5.3.24)<br />

Ôº º¿<br />

General technique:<br />

Ritz projection<br />

= “projection of a (symmetric) eigenvalue problem onto a subspace”<br />

Example: Ritz projection of Ax = λx onto Span {v,w}:<br />

( (<br />

(v,w) T α<br />

A(v,w) = λ(v,w)<br />

β)<br />

T α<br />

(v,w)<br />

β)<br />

Ôº º¿<br />

.<br />

Ritz projection Generalized normalization to ‖z‖ 2 = 1<br />

Example 5.3.25 (Convergence of subspace variant of direct power method).<br />

Ôº º¿