Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

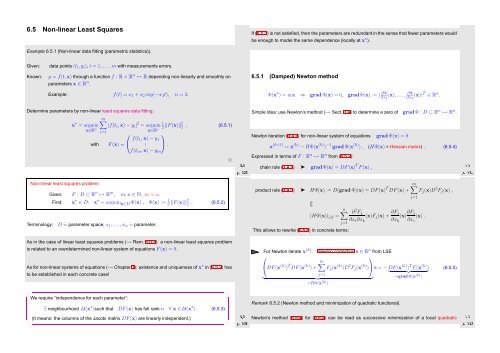

6.5 Non-linear Least Squares<br />

If (6.5.3) is not satisfied, then the parameters are redundant in the sense that fewer parameters would<br />

be enough to model the same dependence (locally at x ∗ ).<br />

Example 6.5.1 (Non-linear data fitting (parametric statistics)).<br />

Given:<br />

Known:<br />

data points (t i , y i ), i = 1, ...,m with measurements errors.<br />

y = f(t,x) through a function f : R × R n ↦→ R depending non-linearly and smoothly on<br />

parameters x ∈ R n .<br />

Example: f(t) = x 1 + x 2 exp(−x 3 t), n = 3.<br />

6.5.1 (Damped) Newton method<br />

Φ(x ∗ ) = min ⇒ grad Φ(x) = 0, grad Φ(x) := ( ∂Φ<br />

∂x 1<br />

(x), ..., ∂Φ<br />

∂x n<br />

(x)) T ∈ R n .<br />

Determine parameters by non-linear least squares data fitting:<br />

x ∗ = argmin<br />

x∈R n<br />

∑ m |f(t i ,x) − y i | 2 = argmin 1<br />

2 ‖F(x)‖ 2<br />

i=1<br />

x∈R n 2 , (6.5.1)<br />

⎛<br />

with F(x) = ⎝ f(t ⎞<br />

1,x) − y 1<br />

. ⎠ .<br />

f(t m ,x) − y m<br />

Ôº¾ º<br />

✸<br />

Simple idea: use Newton’s method (→ Sect. 3.4) to determine a zero of grad Φ : D ⊂ R n ↦→ R n .<br />

Newton iteration (3.4.1) for non-linear system of equations grad Φ(x) = 0<br />

Ôº¿½ º<br />

chain rule (3.4.2) ➤ grad Φ(x) = DF(x) T F(x) ,<br />

x (k+1) = x (k) − HΦ(x (k) ) −1 grad Φ(x (k) ) , (HΦ(x) = Hessian matrix) . (6.5.4)<br />

Expressed in terms of F : R n ↦→ R n from (6.5.2):<br />

Non-linear least squares problem<br />

Given: F : D ⊂ R n ↦→ R m , m, n ∈ N, m > n.<br />

Find: x ∗ ∈ D: x ∗ = argmin x∈D Φ(x) , Φ(x) := 2 1 ‖F(x)‖2 2 . (6.5.2)<br />

Terminology: D ˆ= parameter space, x 1 , ...,x n ˆ= parameter.<br />

As in the case of linear least squares problems (→ Rem. 6.0.3): a non-linear least squares problem<br />

is related to an overdetermined non-linear system of equations F(x) = 0.<br />

As for non-linear systems of equations (→ Chapter 3): existence and uniqueness of x ∗ in (6.5.2) has<br />

to be established in each concrete case!<br />

product rule (3.4.3) ➤ HΦ(x) := D(grad Φ)(x) = DF(x) T DF(x) +<br />

This allows to rewrite (6.5.4) in concrete terms:<br />

j=1<br />

⇕<br />

n∑ ∂ 2 F j<br />

(HΦ(x)) i,k = (x)F<br />

∂x<br />

j=1 i ∂x j (x) + ∂F j<br />

(x) ∂F j<br />

(x) .<br />

k ∂x k ∂x i<br />

For Newton iterate x (k) : Newton correction s ∈ R n from LSE<br />

⎛<br />

⎞<br />

m∑<br />

⎝DF(x (k) ) T DF(x (k) ) + F j (x (k) )D 2 F j (x (k) ) ⎠<br />

}<br />

j=1<br />

{{ }<br />

=HΦ(x (k) )<br />

m∑<br />

F j (x)D 2 F j (x) ,<br />

s = −DF(x (k) ) T F(x (k) ) . (6.5.5)<br />

} {{ }<br />

=grad Φ(x (k) )<br />

✬<br />

We require “independence for each parameter”:<br />

∃ neighbourhood U(x ∗ )such that DF(x) has full rank n ∀ x ∈ U(x ∗ ) . (6.5.3)<br />

(It means: the columns of the Jacobi matrix DF(x) are linearly independent.)<br />

✩<br />

Ôº¿¼ º<br />

Remark 6.5.2 (Newton method and minimization of quadratic functional).<br />

Newton’s method (6.5.4) for (6.5.2) can be read as successive minimization of a local quadratic<br />

Ôº¿¾ º<br />

✫<br />

✪