Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

(diagonal) entries of D are strictly positive and we can define<br />

D = diag(λ 1 ,...,λ n ), λ i > 0 ⇒ D 1/2 := diag( √ λ 1 , . .., √ λ n ) .<br />

This is generalized to<br />

B 1/2 := Q T D 1/2 Q ,<br />

and one easily verifies, using Q T = Q −1 , that (B 1/2 ) 2 = B and that B 1/2 is s.p.d. In fact, these two<br />

requirements already determine B 1/2 uniquely.<br />

However, if one formally applies Algorithm 4.2.1 to the transformed system from (4.3.1), it becomes<br />

apparent that, after suitable transformation of the iteration variables p j and r j , B 1/2 and B −1/2 invariably<br />

occur in products B −1/2 B −1/2 = B −1 and B 1/2 B −1/2 = I. Thus, thanks to this intrinsic<br />

transformation square roots of B are not required for the implementation!<br />

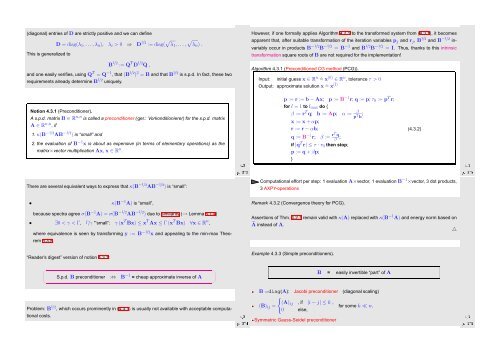

Algorithm 4.3.1 (Preconditioned CG method (PCG)).<br />

Input: initial guess x ∈ R n ˆ= x (0) ∈ R n , tolerance τ > 0<br />

Output: approximate solution x ˆ= x (l)<br />

Notion 4.3.1 (Preconditioner).<br />

A s.p.d. matrix B ∈ R n,n is called a preconditioner (ger.: Vorkonditionierer) for the s.p.d. matrix<br />

A ∈ R n,n , if<br />

1. κ(B −1/2 AB −1/2 ) is “small” and<br />

2. the evaluation of B −1 x is about as expensive (in terms of elementary operations) as the<br />

matrix×vector multiplication Ax, x ∈ R n .<br />

Ôº¿¿ º¿<br />

p := r := b − Ax; p := B −1 r; q := p; τ 0 := p T r;<br />

for l = 1 to l max do {<br />

β := r T q; h := Ap; α := β<br />

p T h ;<br />

x := x + αp;<br />

r := r − αh;<br />

q := B −1 r; β := rT q<br />

β ;<br />

if |q T r| ≤ τ · τ 0 then stop;<br />

p := q + βp;<br />

}<br />

(4.3.2)<br />

Ôº¿ º¿<br />

There are several equivalent ways to express that κ(B −1/2 AB −1/2 ) is “small”:<br />

Computational effort per step: 1 evaluation A×vector, 1 evaluation B −1 ×vector, 3 dot products,<br />

3 AXPY-operations<br />

• κ(B −1 A) is “small”,<br />

because spectra agree σ(B −1 A) = σ(B −1/2 AB −1/2 ) due to similarity (→ Lemma 5.1.4)<br />

• ∃0 < γ < Γ, Γ/γ "‘small”: γ (x T Bx) ≤ x T Ax ≤ Γ (x T Bx) ∀x ∈ R n ,<br />

where equivalence is seen by transforming y := B −1/2 x and appealing to the min-max Theorem<br />

5.3.5.<br />

“Reader’s digest” version of notion 4.3.1:<br />

Remark 4.3.2 (Convergence theory for PCG).<br />

Assertions of Thm. 4.2.7 remain valid with κ(A) replaced with κ(B −1 A) and energy norm based on<br />

à instead of A.<br />

△<br />

Example 4.3.3 (Simple preconditioners).<br />

✗<br />

✖<br />

S.p.d. B preconditioner :⇔ B −1 = cheap approximate inverse of A<br />

✔<br />

✕<br />

B = easily invertible “part” of A<br />

Problem: B 1/2 , which occurs prominently in (4.3.1) is usually not available with acceptable computational<br />

costs.<br />

Ôº¿ º¿<br />

B =diag(A): Jacobi preconditioner (diagonal scaling)<br />

{<br />

(B) ij =<br />

(A) ij , if |i − j| ≤ k ,<br />

0 else,<br />

for some k ≪ n.<br />

Symmetric Gauss-Seidel preconditioner<br />

Ôº¿ º¿