Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

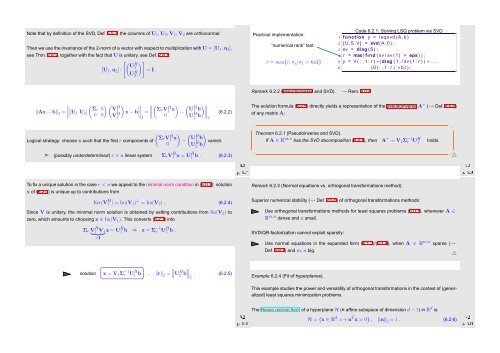

Note that by definition of the SVD, Def. 5.5.2, the columns of U 1 ,U 2 ,V 1 ,V 2 are orthonormal.<br />

Then we use the invariance of the 2-norm of a vector with respect to multiplication with U = [U 1 ,u 2 ],<br />

see Thm. 2.8.2, together with the fact that U is unitary, see Def. 2.8.1:<br />

[ (U H) ]<br />

[U 1 ,u 2 ] · 1<br />

U H = I .<br />

2<br />

Practical implementation:<br />

“numerical rank” test:<br />

r = max{i: σ i /σ 1 > tol}<br />

Code 6.2.1: Solving LSQ problem via SVD<br />

1 function y = lsqsvd (A, b )<br />

2 [U, S, V] = svd (A, 0 ) ;<br />

3 sv = diag (S) ;<br />

4 r = max( find ( sv / sv ( 1 ) > eps ) ) ;<br />

5 y = V ( : , 1 : r ) ∗( diag ( 1 . / sv ( 1 : r ) ) ∗ . . .<br />

6 (U( : , 1 : r ) ’∗b ) ) ;<br />

Remark 6.2.2 (Pseudoinverse and SVD). → Rem. 6.0.4<br />

( ) ( )<br />

( ‖Ax − b‖ 2 =<br />

∥ [U Σr 0 V H 1 U 2 ]<br />

1<br />

0 0 V2<br />

H x − b<br />

∥ =<br />

Σr V1 H ∥<br />

x<br />

2<br />

0<br />

)<br />

−<br />

(<br />

U H<br />

1 b<br />

U H 2 b )∥ ∥∥∥2<br />

(6.2.2)<br />

The solution formula (6.2.5) directly yields a representation of the pseudoinverse A + (→ Def. 6.0.2)<br />

of any matrix A:<br />

✬<br />

✩<br />

Logical strategy: choose x such that the first r components of<br />

(<br />

Σr V1 Hx<br />

) ( )<br />

U H<br />

− 1 b<br />

0 U H 2 b vanish:<br />

Theorem 6.2.1 (Pseudoinverse and SVD).<br />

If A ∈ K m,n has the SVD decomposition (6.2.1), then<br />

A + = V 1 Σ −1 r U H 1<br />

holds.<br />

➣ (possibly underdetermined) r × n linear system Σ r V H 1 x = UH 1 b . (6.2.3)<br />

Ôº½ º¾<br />

✫<br />

Ôº½ º¾<br />

✪<br />

△<br />

To fix a unique solution in the case r < n we appeal to the minimal norm condition in (6.0.3): solution<br />

x of (6.2.3) is unique up to contributions from<br />

Ker(V H 1 ) = Im(V 1) ⊥ = Im(V 2 ) . (6.2.4)<br />

Since V is unitary, the minimal norm solution is obtained by setting contributions from Im(V 2 ) to<br />

zero, which amounts to choosing x ∈ Im(V 1 ). This converts (6.2.3) into<br />

Remark 6.2.3 (Normal equations vs. orthogonal transformations method).<br />

Superior numerical stability (→ Def. 2.5.5) of orthogonal transformations methods:<br />

Use orthogonal transformations methods for least squares problems (6.0.3), whenever A ∈<br />

R m,n dense and n small.<br />

Σ r V<br />

} 1 H {{ V } 1 z = U H 1 b ⇒<br />

=I<br />

z = Σ−1 r U H 1 b .<br />

SVD/QR-factorization cannot exploit sparsity:<br />

Use normal equations in the expanded form (6.1.3)/(6.1.4), when A ∈ R m,n sparse (→<br />

Def. 2.6.1) and m, n big.<br />

△<br />

solution<br />

∥ x = V 1 Σ −1 r U H ∥∥U<br />

1 b , ‖r‖ 2 = H 2 b∥ . (6.2.5)<br />

2<br />

Example 6.2.4 (Fit of hyperplanes).<br />

Ôº½ º¾<br />

This example studies the power and versatility of orthogonal transformations in the context of (generalized)<br />

least squares minimization problems.<br />

Ôº¾¼ º¾<br />

H = {x ∈ R d : c + n T x = 0} , ‖n‖ 2 = 1 . (6.2.6)<br />

The Hesse normal form of a hyperplane H (= affine subspace of dimension d − 1) in R d is: