Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

∥ ∥∥C ̂b ∈ Im(Â) ⇒ rank(Ĉ) = n (6.3.1) ⇒ min − Ĉ∥ .<br />

rank(Ĉ)=n F<br />

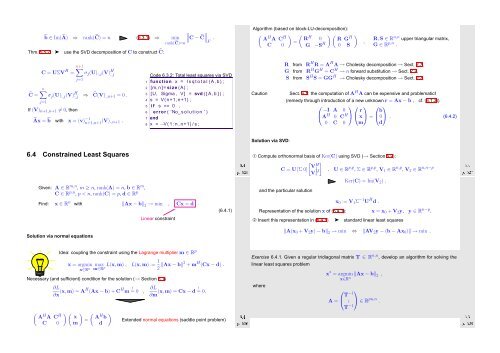

Thm. 5.5.7 ➤ use the SVD decomposition of C to construct Ĉ:<br />

Algorithm (based on block-LU-decomposition):<br />

(<br />

A H A C H ) ( )( )<br />

R H 0 R G H<br />

=<br />

C 0 G −S H 0 S<br />

,<br />

R,S ∈ R n,n upper triangular matrix,<br />

G ∈ R p,n .<br />

Ĉ =<br />

n+1<br />

C = UΣV H ∑<br />

= σ j (U) :,j (V) H :,j<br />

j=1<br />

n∑<br />

σ j (U) :,j (V) H :,j ⇒ Ĉ(V) :,n+1 = 0 .<br />

j=1<br />

If (V) n+1,n+1 ≠ 0, then<br />

Âx = ̂b with<br />

x = (v) −1<br />

n+1,n+1 (V)·,n+1 .<br />

Code 6.3.2: Total least squares via SVD<br />

1 function x = l s q t o t a l (A, b ) ;<br />

2 [m, n ]= size (A) ;<br />

3 [U, Sigma , V ] = svd ( [ A, b ] ) ;<br />

4 s = V( n+1 ,n+1) ;<br />

5 i f s == 0 ,<br />

6 error ( ’No s o l u t i o n ’ )<br />

7 end<br />

8 x = −V( 1 : n , n+1) / s ;<br />

Caution<br />

R from R H R = A H A → Cholesky decomposition → Sect. 2.7,<br />

G from R H G H = C H → n forward substitution → Sect. 2.2,<br />

S from S H S = GG H → Cholesky decomposition → Sect. 2.7.<br />

Sect. 6.1: the computation of A H A can be expensive and problematic!<br />

(remedy through introduction of a new unknown r = Ax − b , cf. (6.1.3))<br />

⎛ ⎞⎛<br />

−I A 0<br />

⎝A H 0 C H ⎠⎝ r ⎞ ⎛<br />

x ⎠ = ⎝ b ⎞<br />

0⎠ . (6.4.2)<br />

0 C 0 m d<br />

Solution via SVD:<br />

6.4 Constrained Least Squares<br />

Given: A ∈ R m,n , m ≥ n, rank(A) = n, b ∈ R m ,<br />

C ∈ R p,n , p < n, rank(C) = p, d ∈ R p<br />

Find: x ∈ R n with ‖Ax − b‖ 2 → min , Cx = d .<br />

Linear constraint<br />

(6.4.1)<br />

Ôº¾ º<br />

➀ Compute orthonormal basis of Ker(C) using SVD (→ Section 6.2):<br />

[ ]<br />

V H<br />

C = U [Σ 0] 1<br />

V2<br />

H Ker(C) = Im(V 2 ) .<br />

and the particular solution<br />

Ôº¾ º<br />

, U ∈ R p,p , Σ ∈ R p,p , V 1 ∈ R n,p , V 2 ∈ R n,n−p<br />

x 0 := V 1 Σ −1 U H d .<br />

Representation of the solution x of (6.4.1): x = x 0 + V 2 y, y ∈ R n−p .<br />

➁ Insert this representation in (6.4.1) ➤ standard linear least squares<br />

Solution via normal equations<br />

‖A(x 0 + V 2 y) − b‖ 2 → min ⇔ ‖AV 2 y − (b − Ax 0 )‖ → min .<br />

Idea: coupling the constraint using the Lagrange multiplier m ∈ R p<br />

x = argmin<br />

x∈R n<br />

max L(x,m) , L(x,m) := 1 m∈R p 2 ‖Ax − b‖2 + m H (Cx − d) .<br />

Necessary (and sufficient) condition for the solution (→ Section 6.1)<br />

(<br />

A H A C H<br />

C 0<br />

∂L<br />

∂x (x,m) = AH (Ax − b) + C H m ! = 0 ,<br />

) (<br />

x<br />

m<br />

)<br />

=<br />

(<br />

A H )<br />

b<br />

d<br />

∂L<br />

∂m (x,m) = Cx − d ! = 0.<br />

Extended normal equations (saddle point problem)<br />

Ôº¾ º<br />

Exercise 6.4.1. Given a regular tridiagonal matrix T ∈ R n,n , develop an algorithm for solving the<br />

linear least squares problem<br />

where<br />

x ∗ = argmin<br />

x∈R n ‖Ax − b‖ 2 ,<br />

⎛ ⎞<br />

A = ⎝ T−1<br />

. ⎠ ∈ R pn,n .<br />

T −1<br />

Ôº¾ º