Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

4.1 Descent <strong>Methods</strong><br />

2<br />

16<br />

16<br />

1.5<br />

14<br />

14<br />

12<br />

Focus: Linear system of equations Ax = b, A ∈ R n,n , b ∈ R n , n ∈ N given,<br />

1<br />

12<br />

10<br />

with symmetric positive definite (s.p.d., → Def. 2.7.1) system matrix A<br />

x 2<br />

0.5<br />

0<br />

10<br />

8<br />

J(x 1<br />

,x 2<br />

)<br />

8<br />

6<br />

➨ A-inner product (x,y) ↦→ x T Ay ⇒ “A-geometry”<br />

−0.5<br />

6<br />

4<br />

2<br />

−1<br />

4<br />

0<br />

Definition 4.1.1 (Energy norm).<br />

A s.p.d. matrix A ∈ R n,n induces an energy norm<br />

‖x‖ A := (x T Ax) 1/2 , x ∈ R n .<br />

−1.5<br />

0<br />

−2<br />

−2 −1.5 −1 −0.5 0 0.5 1 1.5 2<br />

x 1<br />

Fig. 41<br />

2<br />

−2<br />

−2<br />

0<br />

x1<br />

2 −2 −1.5 −1 −0.5 0 0.5 1 1.5 2<br />

x 2<br />

Fig. 42<br />

Remark 4.1.1 (Krylov methods for complex s.p.d. system matrices).<br />

✗<br />

✖<br />

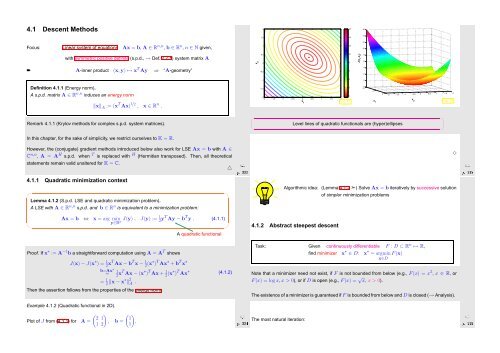

Level lines of quadratic functionals are (hyper)ellipses<br />

✔<br />

✕<br />

In this chapter, for the sake of simplicity, we restrict ourselves to K = R.<br />

However, the (conjugate) gradient methods introduced below also work for LSE Ax = b with A ∈<br />

C n,n , A = A H s.p.d. when T is replaced with H (Hermitian transposed). Then, all theoretical<br />

statements remain valid unaltered for K = C.<br />

4.1.1 Quadratic minimization context<br />

✬<br />

Lemma 4.1.2 (S.p.d. LSE and quadratic minimization problem).<br />

A LSE with A ∈ R n,n s.p.d. and b ∈ R n is equivalent to a minimization problem:<br />

△<br />

✩<br />

Ôº¿¿¿ º½<br />

Algorithmic idea: (Lemma 4.1.2 ➣) Solve Ax = b iteratively by successive solution<br />

of simpler minimization problems<br />

✸<br />

Ôº¿¿ º½<br />

✫<br />

Ax = b ⇔<br />

x = arg min<br />

y∈R n J(y) , J(y) := 1 2 yT Ay − b T y . (4.1.1)<br />

✪<br />

4.1.2 Abstract steepest descent<br />

A quadratic functional<br />

Proof. If x ∗ := A −1 b a straightforward computation using A = A T shows<br />

J(x) − J(x ∗ ) = 1 2 xT Ax − b T x − 1 2 (x∗ ) T Ax ∗ + b T x ∗<br />

Then the assertion follows from the properties of the energy norm.<br />

Example 4.1.2 (Quadratic functional in 2D).<br />

Plot of J from (4.1.1) for A =<br />

b=Ax ∗<br />

= 1 2 xT Ax − (x ∗ ) T Ax + 1 2 (x∗ ) T Ax ∗<br />

= 1 2 ‖x − x∗ ‖ 2 A . (4.1.2)<br />

( ) (<br />

2 1 1<br />

, b =<br />

1 2 1)<br />

Ôº¿¿ º½<br />

.<br />

Task: Given continuously differentiable F : D ⊂ R n ↦→ R,<br />

find minimizer x ∗ ∈ D: x ∗ = argmin F(x)<br />

x∈D<br />

Note that a minimizer need not exist, if F is not bounded from below (e.g., F(x) = x 3 , x ∈ R, or<br />

F(x) = log x, x > 0), or if D is open (e.g., F(x) = √ x, x > 0).<br />

The existence of a minimizer is guaranteed if F is bounded from below and D is closed (→ Analysis).<br />

The most natural iteration:<br />

Ôº¿¿ º½