Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

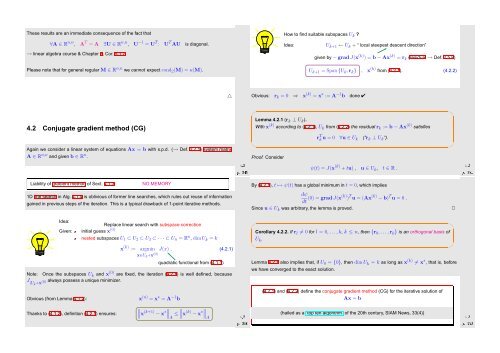

These results are an immediate consequence of the fact that<br />

How to find suitable subspaces U k ?<br />

∀A ∈ R n,n , A T = A ∃U ∈ R n,n , U −1 = U T : U T AU is diagonal.<br />

→ linear algebra course & Chapter 5, Cor. 5.1.7.<br />

Please note that for general regular M ∈ R n,n we cannot expect cond 2 (M) = κ(M).<br />

Idea:<br />

U k+1 ← U k + “ local steepest descent direction”<br />

given by −gradJ(x (k) ) = b − Ax (k) = r k (residual → Def. 2.5.8)<br />

U k+1 = Span {U k ,r k } , x (k) from (4.2.1). (4.2.2)<br />

△<br />

Obvious: r k = 0 ⇒ x (k) = x ∗ := A −1 b done ✔<br />

✬<br />

✩<br />

4.2 Conjugate gradient method (CG)<br />

Lemma 4.2.1 (r k ⊥ U k ).<br />

With x (k) according to (4.2.1), U k from (4.2.2) the residual r k := b − Ax (k) satisfies<br />

r T k u = 0 ∀u ∈ U k (”r k ⊥ U k ”).<br />

Again we consider a linear system of equations Ax = b with s.p.d. (→ Def. 2.7.1) system matrix<br />

A ∈ R n,n and given b ∈ R n .<br />

Ôº¿ º¾<br />

✫<br />

Proof. Consider<br />

ψ(t) = J(x (k) + tu) , u ∈ U k , t ∈ R .<br />

✪<br />

Ôº¿½ º¾<br />

Liability of gradient method of Sect. 4.1.3:<br />

NO MEMORY<br />

1D line search in Alg. 4.1.4 is oblivious of former line searches, which rules out reuse of information<br />

gained in previous steps of the iteration. This is a typical drawback of 1-point iterative methods.<br />

By (4.2.1), t ↦→ ψ(t) has a global minimum in t = 0, which implies<br />

dψ<br />

dt (0) = grad J(x(k) ) T u = (Ax (k) − b) T u = 0 .<br />

Since u ∈ U k was arbitrary, the lemma is proved.<br />

✷<br />

Idea:<br />

Replace linear search with subspace correction<br />

Given: initial guess x (0)<br />

nested subspaces U 1 ⊂ U 2 ⊂ U 3 ⊂ · · · ⊂ U n = R n , dimU k = k<br />

x (k) := argmin<br />

x∈U k +x (0) J(x) , (4.2.1)<br />

quadratic functional from (4.1.1)<br />

Note: Once the subspaces U k and x (0) are fixed, the iteration (4.2.1) is well defined, because<br />

J |Uk +x (0) always possess a unique minimizer.<br />

✬<br />

Corollary 4.2.2. If r l ≠ 0 for l = 0,...,k, k ≤ n, then {r 0 ,...,r k } is an orthogonal basis of<br />

U k .<br />

✫<br />

Lemma 4.2.1 also implies that, if U 0 = {0}, then dimU k = k as long as x (k) ≠ x ∗ , that is, before<br />

we have converged to the exact solution.<br />

✩<br />

✪<br />

Obvious (from Lemma 4.1.2):<br />

Thanks to (4.1.2), definition (4.2.1) ensures:<br />

x (n) = x ∗ = A −1 b<br />

∥<br />

∥x (k+1) − x ∗∥ ∥ ∥A ≤<br />

∥<br />

∥x (k) − x ∗∥ ∥ ∥A<br />

Ôº¿¼ º¾<br />

(4.2.1) and (4.2.2) define the conjugate gradient method (CG) for the iterative solution of<br />

Ax = b<br />

(hailed as a “top ten algorithm” of the 20th century, SIAM News, 33(4))<br />

Ôº¿¾ º¾