Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

Numerical Methods Contents - SAM

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

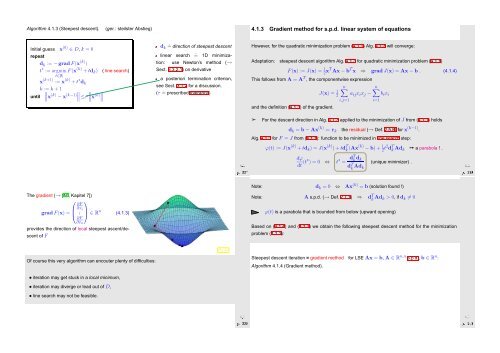

Algorithm 4.1.3 (Steepest descent).<br />

(ger.: steilster Abstieg)<br />

4.1.3 Gradient method for s.p.d. linear system of equations<br />

Initial guess x (0) ∈ D, k = 0<br />

repeat<br />

d k := −gradF(x (k) )<br />

t ∗ := argmin F(x (k) + td k ) ( line search)<br />

t∈R<br />

x (k+1) := x (k) + t ∗ d k<br />

k := k + 1<br />

∥<br />

until ∥x (k) − x (k−1)∥ ∥<br />

∥ ∥ ∥∥x ≤τ (k) ∥<br />

d k ˆ= direction of steepest descent<br />

linear search ˆ= 1D minimization:<br />

use Newton’s method (→<br />

Sect. 3.3.2.1) on derivative<br />

a posteriori termination criterion,<br />

see Sect. 3.1.2 for a discussion.<br />

(τ ˆ= prescribed tolerance)<br />

However, for the quadratic minimization problem (4.1.1) Alg. 4.1.3 will converge:<br />

Adaptation: steepest descent algorithm Alg. 4.1.3 for quadratic minimization problem (4.1.1)<br />

F(x) := J(x) = 2 1xT Ax − b T x ⇒ grad J(x) = Ax − b . (4.1.4)<br />

This follows from A = A T , the componentwise expression<br />

n∑ n∑<br />

J(x) = 1 2 a ij x i x j − b i x i<br />

i,j=1 i=1<br />

and the definition (4.1.3) of the gradient.<br />

➣<br />

For the descent direction in Alg. 4.1.3 applied to the minimization of J from (4.1.1) holds<br />

Ôº¿¿ º½<br />

d k = b − Ax (k) =: r k the residual (→ Def. 2.5.8) for x (k−1) .<br />

Alg. 4.1.3 for F = J from (4.1.1): function to be minimized in line search step:<br />

ϕ(t) := J(x (k) + td k ) = J(x (k) ) + td T k (Ax(k) − b) + 1 2 t2 d T k Ad k ➙ a parabola ! .<br />

dϕ<br />

dt (t∗ ) = 0 ⇔<br />

t ∗ = dT k d k<br />

d T k Ad k<br />

(unique minimizer) .<br />

Ôº¿¿ º½<br />

Note: d k = 0 ⇔ Ax (k) = b (solution found !)<br />

The gradient (→ [40, Kapitel 7])<br />

⎛ ⎞<br />

∂F<br />

∂x<br />

⎜ i ⎟<br />

grad F(x) = ⎝ . ⎠ ∈ R n (4.1.3)<br />

∂F<br />

∂x n<br />

provides the direction of local steepest ascent/descent<br />

of F<br />

Note: A s.p.d. (→ Def. 2.7.1) ⇒ d T k Ad k > 0, if d k ≠ 0<br />

ϕ(t) is a parabola that is bounded from below (upward opening)<br />

Based on (4.1.4) and (4.1.3) we obtain the following steepest descent method for the minimization<br />

problem (4.1.1):<br />

Fig. 43<br />

Of course this very algorithm can encouter plenty of difficulties:<br />

Steepest descent iteration = gradient method for LSE Ax = b, A ∈ R n,n s.p.d., b ∈ R n :<br />

Algorithm 4.1.4 (Gradient method).<br />

• iteration may get stuck in a local minimum,<br />

• iteration may diverge or lead out of D,<br />

• line search may not be feasible.<br />

Ôº¿¿ º½<br />

Ôº¿¼ º½